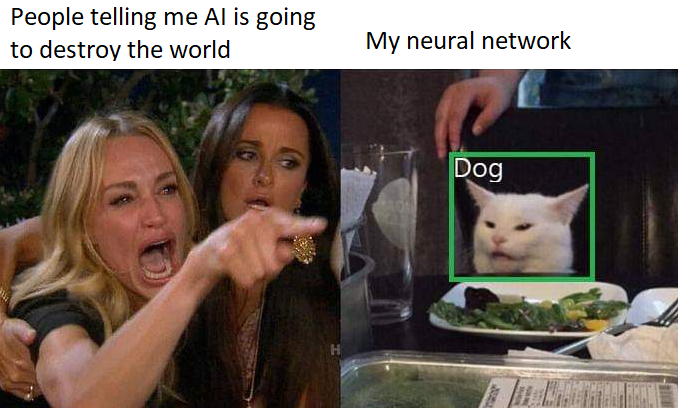

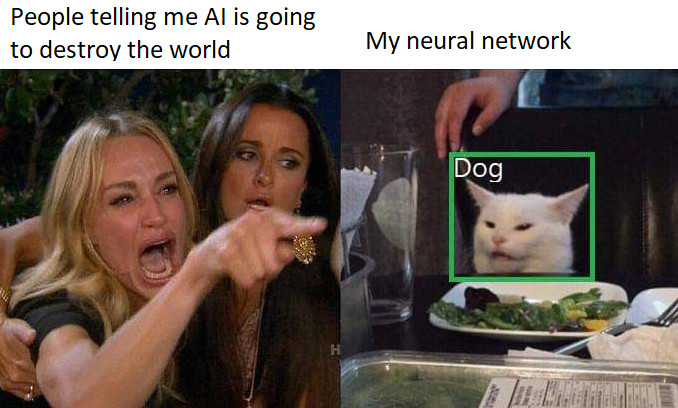

It happened again. Last week, as I was explaining my job to someone, they interrupted me and said “So you’re building Skynet”. I felt like I had to show them this meme, which I thought described pretty well my current situation.

Artificial General Intelligence and Pragmatic Thinking

No need to say that super-human AI is nowhere near happening. Nonetheless, I think the public is fascinated by the idea of super-intelligent computers taking over the world. This fascination has a name: the myth of singularity.

The singularity refers to the point in time when an artificial intelligence would enter a process of exponential improvement. A software so intelligent that it would be able to improve itself faster and faster. At this point, technical progress would become the exclusive doing of AIs, with unforeseeable repercussions on the fate of the human species.

Singularity is linked to the concept of Artificial General Intelligence. An Artificial General Intelligence can be defined as an AI that can perform any task that a human can perform. I find this concept way more interesting than the concept of singularity, because its definition is at least a bit concrete.

As a result, you have elements to decide whether an algorithm is an Artificial General Intelligence or not. I, a human, can design pragmatic and innovative solutions to increase the value of your data. Current AI software can’t. Therefore, he haven’t reached Artificial General Intelligence.

Even more useful: if we are able to identify the features of human intelligence, then we can know what is missing in our algorithms. And we can improve them.

Let’s do that.

How can we characterize human intelligence?

We defined an Artificial General Intelligence (AGI) as an AI that can at least match human intelligence’s capabilities. If we want to go further, it would be good to have an idea of what makes human intelligence.

We have two options here: either we focus on the nature of human intelligence, either we focus on its characterization. The nature is where it comes from. The characterization is how we can recognize it.

There are thousands of theories aiming at defining the nature of human intelligence in each field of study. Psychology, biology, genetics, sociology, cognitive science, mathematics, theology… All of which I know close to nothing about. Good news is: we just have to focus on the characterization of human intelligence.

If we want to get closer to Artificial General Intelligence, our best shot is not to try to reproduce the human brain. The definition of AGI is functional: an AI that can do anything that humans can do. So, what can human intelligence do?

Of course, we can’t draw an exhaustive list here. But there are a lot of features we can think of:

- abstract reasoning,

- learning from past experience,

- composition of elements,

- adaptability to new environments,

- creativity,

- empathy,

- perception,

- problem solving,

- communication,

- you can go on and on in the comments if you wish.

I promised you 3 reasons why we are far from achieving Artificial General Intelligence. So I’m gonna arbitrarily choose three features of human intelligence that our algorithms do not possess at this point:

- Out-of-distribution generalization

- Compositionality

- Conscious reasoning

To be fair, it is not that arbitrary. We are going to focus on these 3 characteristics of human intelligence because we have ideas to achieve them. Isn’t this exciting?

#artificial intelligence