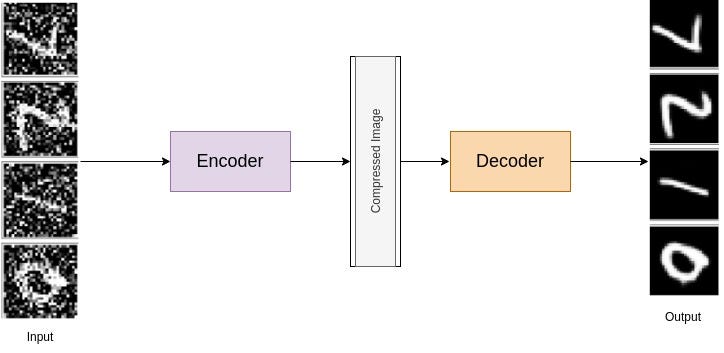

Autoencoders aren’t too useful in practice, but they can be used to denoise images quite successfully just by training the network on noisy images. We can generate noisy images by adding Gaussian noise to the training images, then clipping the values to be between 0 and 1.

“Denoising auto-encoder forces the hidden layer to extract more robust features and restrict it from merely learning the identity. Autoencoder reconstructs the input from a corrupted version of it.”

A denoising auto-encoder does two things:

- Encode the input (preserve the information about the data)

- Undo the effect of a corruption process stochastically applied to the input of the auto-encoder.

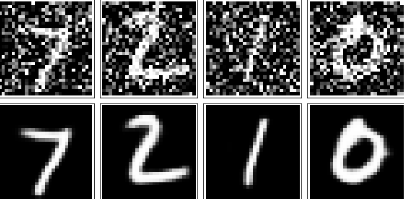

For the depiction of the denoising capabilities of Autoencoders, we’ll use noisy images as input and the original, clean images as targets.

Example: Top image is input, and the bottom image is the target.

Problem Statement:

Build the model for the denoising autoencoder. Add deeper and additional layers to the network. Using MNIST dataset, add noise to the data and try to define and train an autoencoder to denoise the images.

Solution:

**Import Libraries and Load Dataset: **Given below is the standard procedure to import the libraries and load the MNIST dataset.

**Visualize the Data: **You can use standard matplotlib library to view whether you’ve loaded your dataset correctly or not.

The output should be something like this:

Network Architecture: The most crucial part is the network generation. It is because denoising is a hard problem for the network; hence we’ll need to use deeper convolutional layers here. It is recommended to start with a depth of 32 for the convolutional layers in the encoder, and the same depth going backwards through the decoder.

Training: The training of the network takes significantly less time with GPU; hence I would recommend using one. Though here we are only concerned with the training images, which we can get from the train_loader.

In this case, we are actually adding some noise to these images and we’ll feed these

_noisy_imgs_to our model. The model will produce reconstructed images based on the noisy input. But, we want it to produce normal un-noisy images, and so, when we calculate the loss, we will still compare the reconstructed outputs to the original images!

Because we’re comparing pixel values in input and output images, it will be best to use a loss that is meant for a regression task. Regression is all about comparing quantities rather than probabilistic values. So, in this case, I’ll use MSELoss.

#computer-vision #deep-learning #autoencoder #machine-learning #mnist #deep learning