In this blog, we’ll cover another interesting Machine Learning algorithm called Support Vector Regression(SVR). But before going to study SVR let’s study about Support Vector Machine(SVM) as SVR is based on SVM.

SVM is a supervised learning algorithm which tries to predict values based on Classification or Regression by analysing data and recognizing patterns. The algorithm used for Classification is called SVC( Support Vector Classifier) and for Regression is called SVR(Support Vector Regression).

Let’s understand some basic concepts

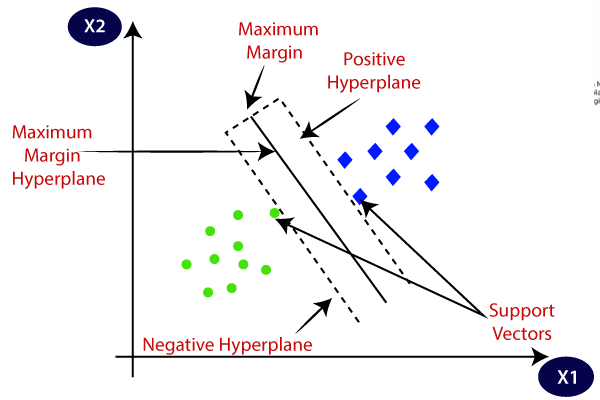

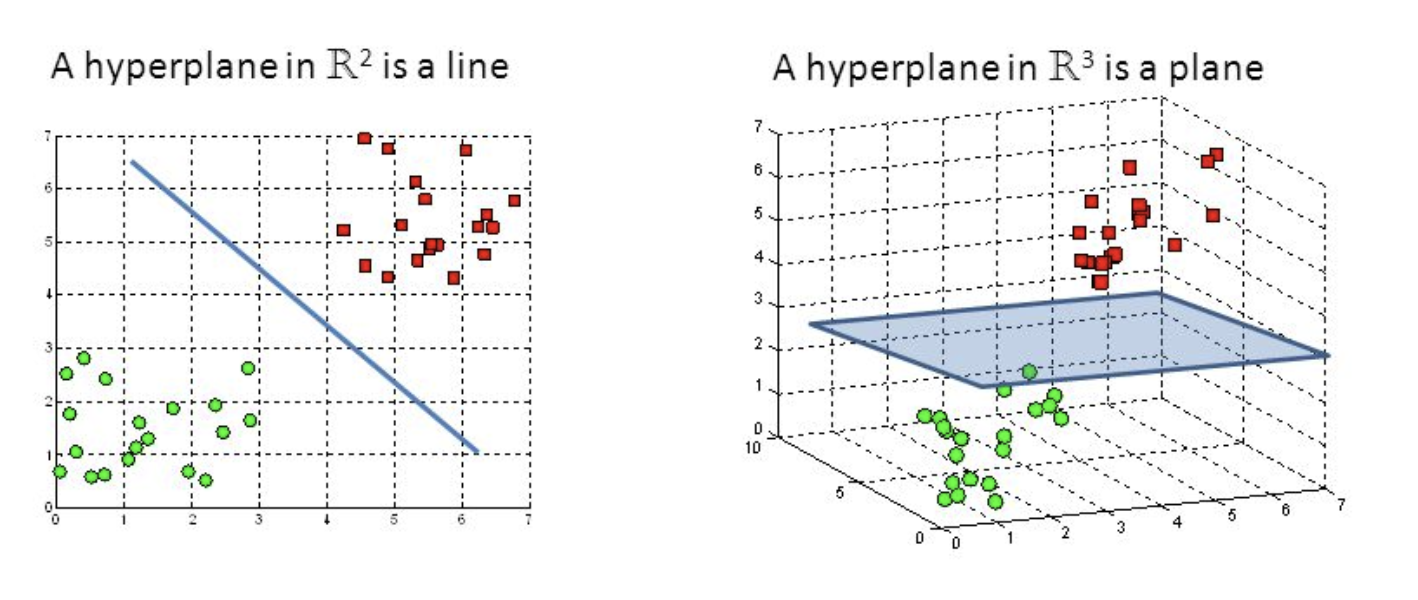

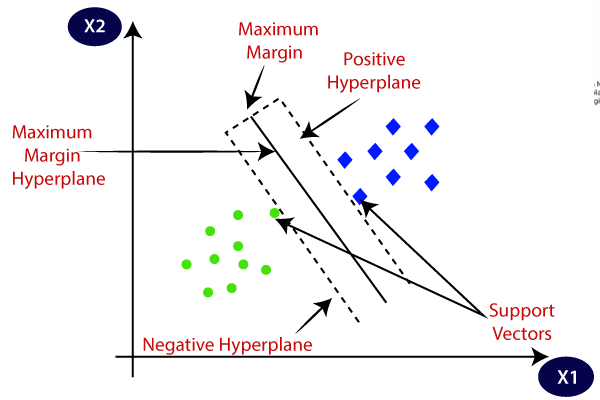

- Hyperplane: A hyperplane is a plane which is used to divide categories based on their values. A hyperplane is always 1 dimension less than the actual plane used for plotting the outcomes or for analyses. For eg, in Linear Regression with 1 feature and 1 outcome we can make a 2-D plane to depict the relationship and the regression line fitted to that is a 1-D plane. Hence, this plane is called as Hyperplane. Similarly, for a 3-D relationship, we get a 2-D hyperplane.

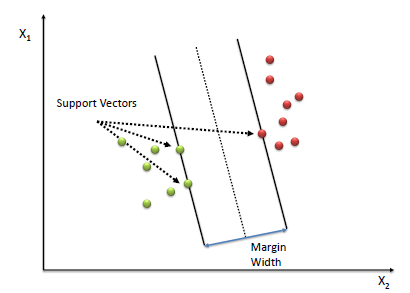

- Support Vectors: Support Vectors are those points in the space that are closer to the hyperplane and also decide the orientation of the hyperplane. The lines or planes drawn is called Support Vector Lines or Support Vector Planes.

- Margin Width: The perpendicular distance between the 2 support vector lines or planes is called Margin Width.

KERNAL TRICK

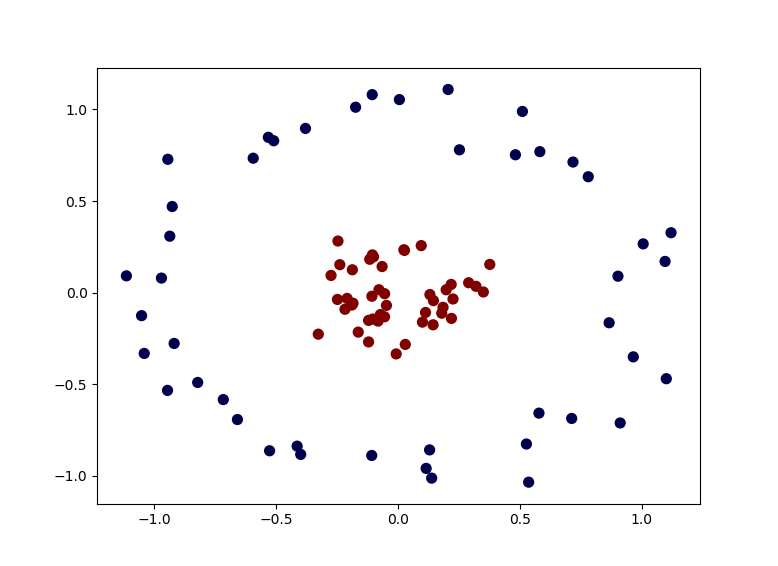

In the above diagrams, we saw that our data is linearly separable. But the consider the below case.

In this, a simple linear division is not possible. So, the SVM kernel adds one more dimension to it. After adding another dimension, the data becomes separable using a plane. The following intuition can be drawn.

#analytics-vidhya #machine-learning #svr #deep learning