_Detailed Notes for Machine Learning Foundation Course by Microsoft Azure & Udacity, 2020 on Lesson 6 — _Managed Services for Machine Learning

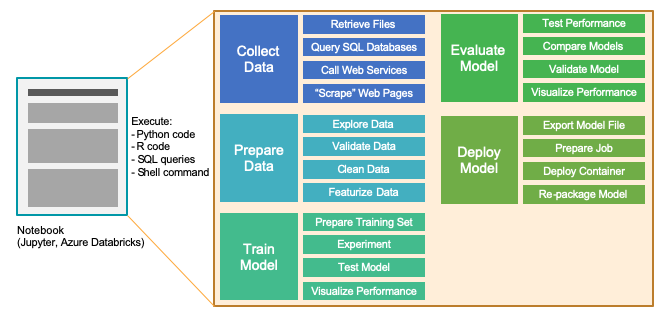

In this lesson, you will learn how to enhance your ML processes with managed services. We’ll discuss computing resources, the modeling process, automation via pipelines, and more.

Intro to Managed Services Approach

Conventional Machine Learning:

- Lengthy installation and setup process: the setup process for most users usually involves installing several applications and libraries on the machine, configuring the environment settings, and then loading all the resources to even begin working within a notebook or integrated development environment, also known as IDEs.

- **Expertise to configure hardware: **For more specialized cases like deep learning, for example. You also require expertise to configure hardware-related aspects such as GPUs.

- **A fair amount of troubleshooting: **all this setup takes time and there’s sometimes a fair amount of troubleshooting involved in making sure you have the right combination of software versions that are compatible with one another.

Managed Services Approach:

- **Very little setup: **it’s fully managed i.e, it provides a ready-made environment that is pre-optimized for your machine learning development.

- **Easy configuration for any needed hardware: **Only a compute target needs to be specified which is a compute resource where experiments are run and service deployments are hosted. It offers support for datastore and datasets management, model registry, deployed service, endpoints management, etc.

- Examples of Compute Resources: Training clusters, inferencing clusters, compute instances, attached compute, local compute.

- Examples of Other Services: Notebooks gallery, Automated Machine Learning configurator, Pipeline designer, datasets and datastore managers, experiments manager, pipelines manager, model registry, endpoints manager.

Compute Resources

A compute target is a designated compute resource or environment where you run training scripts or host your service deployment. There are two different variations of compute targets: training compute targets and inferencing compute targets.

Training Compute:

Compute resources that can be used for model training.

For example:

- Training Clusters: primary choice for model training and batch inferencing. They can also be used for general purposes such as running Machine Learning Python code. It also gives the option of a single or multi-node cluster. It is fully managed and can automatically scale each time a run is submitted and has automatic cluster management and job scheduling. It has support for both CPU and GPU resources to handle various types of workloads.

- Compute Instances: primarily intended to be used as notebook environments but can also be used for model training.

- Local Compute: compute resources of your own machine to train models

#ai #deep-learning #machine-learning #udacity #azure #machine learning

1.35 GEEK