This article discusses Bayes theorem and how Naive Bayes classifier is used in text classification.

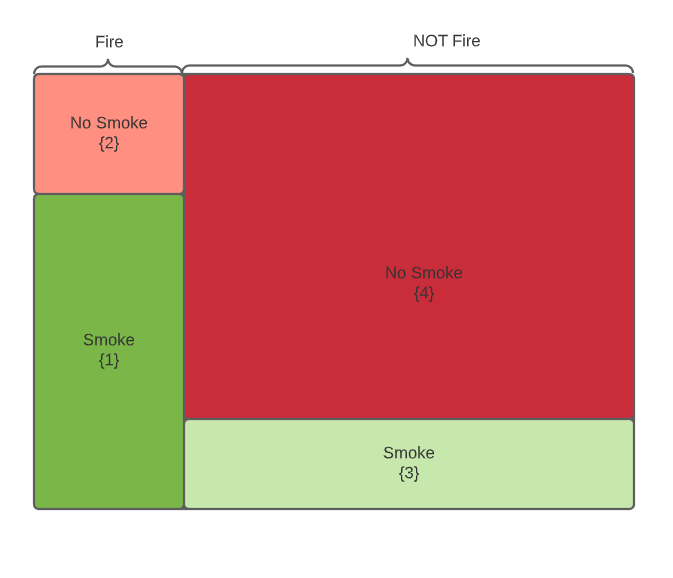

Firstly, We will consider a problem of finding the probability of fire given smoke assuming we were given certain information_. _In order to get to _p(Fire| Smoke) _or p(Fire given Smoke), let us draw an sample space where we can see all possibilities, as below:

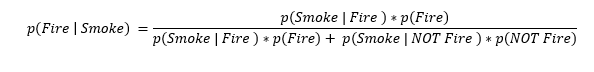

To find p(Fire| Smoke), we are only concerned with areas where Smoke(Evidence) is present {1} and {3} as we are constrained to our evidence GIVEN smoke, so p(Fire| Smoke) will be

or we can also write it has

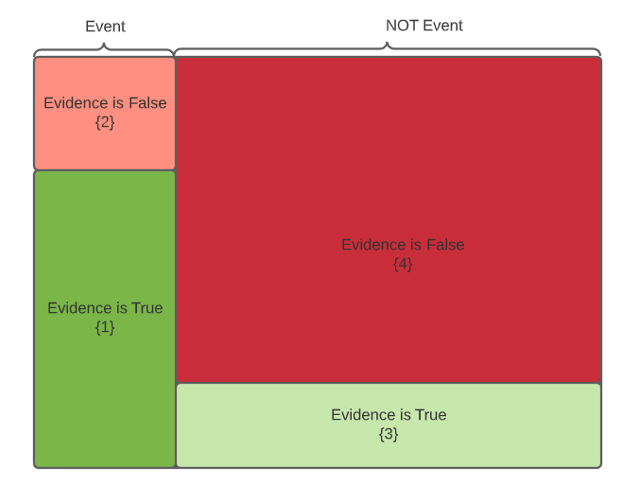

Generalizing it for some Event given Evidence:

As discussed, we have two areas of consideration 1. Evidence True- Event Happening Space {1} . 2. Evidence True- Event NOT happening space {3}.

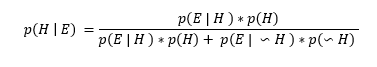

(or)

Same formula can be re-written as below, basically in denominator we are adding up all the areas where Evidence is True.

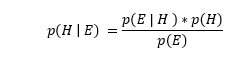

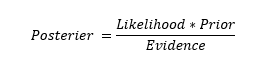

We have arrived at Bayes theorem formula, basically Bayes rule describes the probability of an event in the light of evidence. More generally, finding the probability of an event based on prior knowledge can be written as:

#text-classification #naive-bayes #machine-learning #ebay