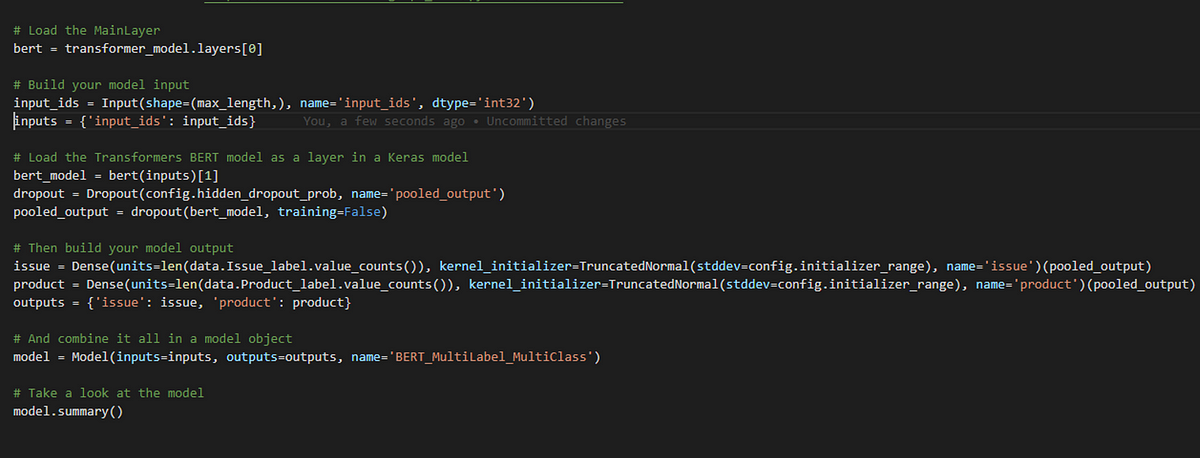

In this article, I’ll show how to do a multi-label, multi-class text classification task using Huggingface Transformers library and Tensorflow Keras API. In doing so, you’ll learn how to use a BERT model from Transformer as a layer in a Tensorflow model built using the Keras API.

The internet is full of text classification articles, most of which are BoW-models combined with some kind of ML-model typically solving a binary text classification problem. With the rise of NLP, and in particular BERT and other multilingual transformer based models, more and more text classification problems can now be solved.

However, when it comes to solving a multi-label, multi-class text classification problem using Huggingface Transformers, BERT, and Tensorflow Keras, the number of articles are indeed very limited and I for one, haven’t found any… Yet!

Therefore, with the help and inspiration of a great deal of blog posts, tutorials and GitHub code snippets all relating to either BERT, multi-label classification in Keras or other useful information I will show you how to build a working model, solving exactly that problem.

And why use Huggingface Transformers instead of Googles own BERT solution? Because with Transformers it is extremely easy to switch between different models, that being BERT, ALBERT, XLnet, GPT-2 etc. Which means, that you more or less ‘just’ replace one model for another in your code.

#tensorflow #transformers #nlp #keras #bert