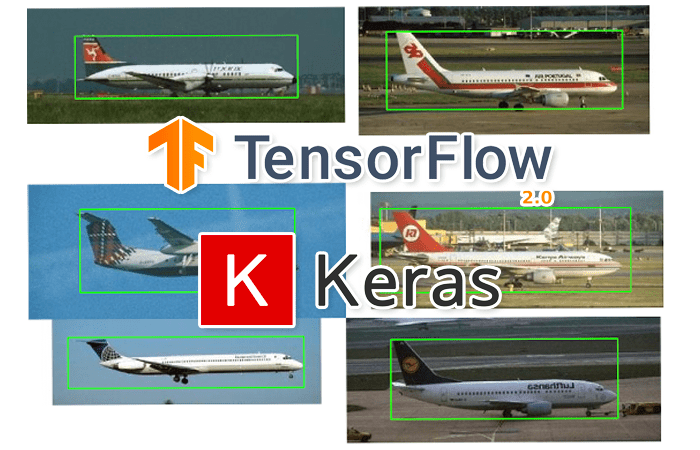

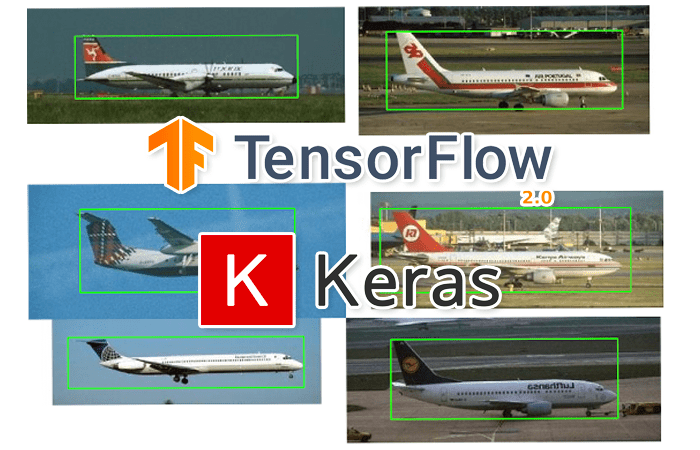

In this tutorial you will learn how to train a custom deep learning model to perform object detection via bounding box regression with Keras and TensorFlow.

These region proposal algorithms (e.g., Selective Search) examine an input image and then identify where a potential object could be. Keep in mind that they have absolutely no idea if an object exists in a given location, just that the area of the image looks interesting and warrants further inspection.

In the classic implementation of Girshick et al.’s R-CNN, these region proposals were used to extract output features from a pre-trained CNN (minus the fully-connected layer head) and then were fed into an SVM for final classification. In this implementation the location from the regional proposal was treated as the bounding box, while the SVM produced the class label for the bounding box region.

Essentially, the original R-CNN architecture didn’t actually “learn” how to detect bounding boxes — it was not end-to-end trainable (future iterations, such as Faster R-CNN, actually were end-to-end trainable).

But that raises the questions:

- What if we wanted to train an end-to-end object detector?

- Is it possible to construct a CNN architecture that can output bounding box coordinates, that way we can actually train the model to make better object detector predictions?

- And if so, how do we go about training such a model?

The key to all those questions lies in the concept of bounding box regression, which is exactly what we’ll be covering today. By the end of this tutorial, you’ll have an end-to-end trainable object detector capable of producing both bounding box predictions and class label predictions for objects in an image.

#keras #tensorflow #deep learning