Hello everyone!! Before starting with part 2 of implementing logic gates using Neural networks, you would want to go through part1 first.

From part 1, we had figured out that we have two input neurons or x vector having values as x1 and x2 and 1 being the bias value. The input values, i.e., x1, x2, and 1 is multiplied with their respective weight matrix that is W1, W2, and W0. The corresponding value is then fed to the summation neuron where we have the summed value which is

Now, this value is fed to a neuron which has a non-linear function(sigmoid in our case) for scaling the output to a desirable range. The scaled output of sigmoid is 0 if the output is less than 0.5 and 1 if the output is greater than 0.5. Our main aim is to find the value of weights or the weight vector which will enable the system to act as a particular gate.

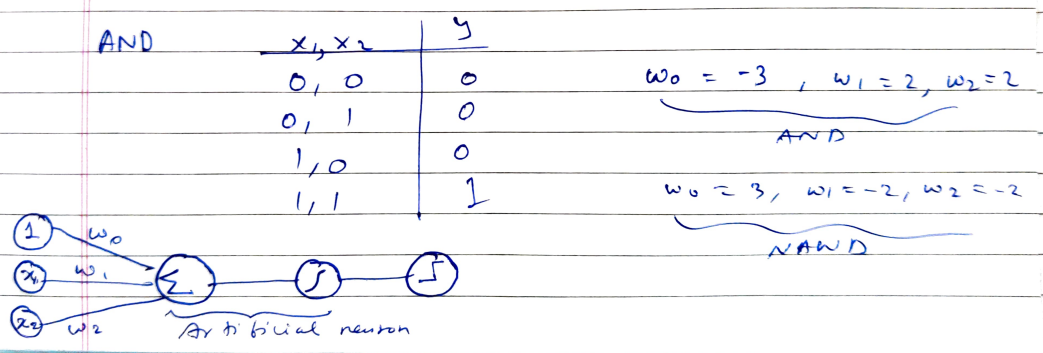

Implementing AND gate

AND gate operation is a simple multiplication operation between the inputs. If any of the input is 0, the output is 0. In order to achieve 1 as the output, both the inputs should be 1. The truth table below conveys the same information.

Truth Table of AND gate and the values of weights that make the system act as AND and NAND gate, Image by Author

As we have 4 choices of input, the weights must be such that the condition of AND gate is satisfied for all the input points.

(0,0) case

Consider a situation in which the input or the x vector is (0,0). The value of Z, in that case, will be nothing but W0. Now, W0 will have to be less than 0 so that Z is less than 0.5 and the output or ŷ is 0 and the definition of the AND gate is satisfied. If it is above 0, then the value after Z has passed through the sigmoid function will be 1 which violates the AND gate condition. Hence, we can say with a resolution that W0 has to be a negative value. But what value of W0? Keep reading…

(0,1) case

Now, consider a situation in which the input or the x vector is (0,1). Here the value of Z will be W0+0+W2*1. This being the input to the sigmoid function should have a value less than 0 so that the output is less than 0.5 and is classified as 0. Henceforth, W0+W2<0. If we take the value of W0 as -3(remember the value of W0 has to be negative) and the value of W2 as +2, the result comes out to be -3+2 and that is -1 which seems to satisfy the above inequality and is at par with the condition of AND gate.

(1,0) case

Similarly, for the (1,0) case, the value of W0 will be -3 and that of W1 can be +2. Remember you can take any values of the weights W0, W1, and W2 as long as the inequality is preserved.

(1,1) case

In this case, the input or the x vector is (1,1). The value of Z, in that case, will be nothing but W0+W1+W2. Now, the overall output has to be greater than 0 so that the output is 1 and the definition of the AND gate is satisfied. From previous scenarios, we had found the values of W0, W1, W2 to be -3,2,2 respectively. Placing these values in the Z equation yields an output -3+2+2 which is 1 and greater than 0. This will, therefore, be classified as 1 after passing through the sigmoid function.

A final note on AND and NAND implementation

The line separating the above four points, therefore, be an equation W0+W1x1+W2x2=0 where W0 is -3, and both W1 and W2 are +2. The equation of the line of separation of four points is therefore x1+x2=3/2. The implementation of the NOR gate will, therefore, be similar to the just the weights being changed to W0 equal to 3, and that of W1 and W2 equal to -2

#geometry #logic-gates #deep-learning #deep learning