Table of Contents

1. Overview

2. Background

3. Dataset and Data Preparation

4.1 Biased Model: A Model with Gender and Name Origin Biases

4.2 Replacing Names in Training Data and at Inference Time

4.3 Augmenting Training Data with Additional Gender Information

5. Analysis

6. Conclusion

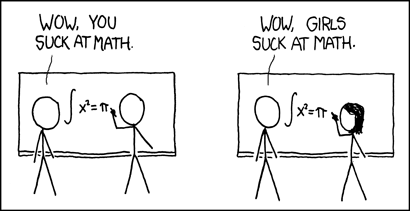

7. References_This article took a long time to prepare. Thank you to Professor Miodrag Bolic from the University of Ottawa for reviewing this article and providing valuable feedback._1. OverviewSmall business classification means looking at a business name and putting a label onto it. This is an important task within many applications where you want to treat similar clients similarly, and it is something humans do all the time. For example, take the text of a company name as your input (e.g., “Daniel’s Roofing Inc”) and predict the type of the business (e.g., “Roofer”). There are business applications for this technology. For example, take a list of invoices and group them into holiday emails. What customers are similar to each other? You could use invoice amounts as an indicator of business type, but the name of the business is probably very helpful for you to understand what the business does with you, and therefore how they are related to what you do, and how you want to message them. In this article, small business classification using only the business name is the goal.In this article, I developed a FastText model for predicting which one of 66 business types a company falls into, based only upon the business name. We will see later on that training a machine learning model on a dataset of small business names and their business type will introduce gender and geographic origin biases.

Two approaches to removing the observed bias are explored in this article:

- Replacing given names with a placeholder token. The bias in the model was reduced by hiding given names from the model, but the bias reduction caused classification performance to drop.Augmenting the training data with gender-swapped examples. For example, Bob’s Diner becomes Lisa’s Diner. Augmentation of the training data with gender-swapping samples proved less effective at bias reduction than the name hiding approach on the evaluated dataset.

2. BackgroundOur initial goal is to observe prediction biases related to the given name gender and geographic origin. Next, let’s work to remove the bias.It is a common issue that a business knows the name of a small business counterparty, but does not know the archetype of the business. Small businesses present a unique challenge when performing customer segmentation, because small businesses may not be listed in databases or classification systems for large companies that include metadata such as the company type or classification code. Beyond the sales and marketing functions, customer segmentation can also be useful for customizing service delivery to clients. The type of a company can also be useful in forecasting sales, and in many other applications. The mix of various types of companies in a client list can also reveal the trends for client segments. Finally, classifying the type of a business may expose useful equity trading signals [1] [2]. The analysis of equity trading models can often include industry classification. For example, the Global Industry Classifications Standard (GICS) [3] was applied to assess trading model performance across various industries in [4] and [5].Existing industry classification systems tend not to cover small businesses well. The criteria for inclusion in widely used industry classification systems is biased toward big businesses, and this bias seeps into training data (i.e., the names of the companies) as a bias for large entities. I imagine the bias is towards companies with high revenue and headcount. For example, a model trained on corporation names from the Russell 3000 index [6] will not be prepared properly at inference time to predict the type of business conducted at “Daniel’s Barber Shoppe”. Neither the business type (e.g., barber), nor the naming conventions (e.g., Shoppe) of small businesses are reflected in the names of larger corporations such as the members of the Russell 3000.There are several human-curated industry classification systems including Standard Industrial Classification (SIC) [9], North American Industry Classification System (NAICS) [10], Global Industry Classification Standard (GICS) [3], Industry Classification Benchmark (ICB) [11], and others. One comparison of these classification schemes revealed that “the GICS advantage is consistent from year to year and is most pronounced among large firms” [12]. This further reinforces the point that these classification systems are better at categorizing large firms, rather than small businesses.The labels within industry classification systems provide an opportunity for supervised learning. A complementary approach is to apply unsupervised learning to model the data. For example, clustering for industry classification has been applied in [13].Measuring **bias **in a text classifier requires a validation dataset that is not a subset or split of the original dataset. The second dataset is required because without it, the testing and training data will likely contain the same data distribution, hiding bias from the assessment [14]. The purpose of the second dataset is to evaluate the classifier in out-of-distribution conditions it was not specifically trained for.Gender bias in word embedding models could be reduced by augmenting the training data with gender-swapping samples [15], fine-tuning bias with a larger less biased dataset [16] or by other weight adjustment techniques [17], or removing gender-specific factors from the model [18]. Applying several of these techniques in combination may yield the best results [16]. In this work, augmenting training with gender-swapping samples, and hiding gender-specific factors from the model were evaluated.

#data-science #nlp #artificial-intelligence #bias #data analysis