Copying files using Azure Data Factory is straightforward; however, it gets tricky if the files are being hosted on a third-party web server, and the only way to copy them is by using their URL. In this article, we look at an innovative use of Data factory activities to generate the URLs on the fly to fetch the content over HTTP and store it in our storage account for further processing.

Disclaimer and Terms of use

Please read our terms of use before proceeding with this article.

Caution

Microsoft Azure is a paid service and following this article can cause financial liability to you or your organization.

Prerequisites

1. An active Microsoft Azure subscription.

2. Azure Data Factory instance

3. Azure Data Lake Storage Gen2 storage

If you don’t have prerequisites set up yet, refer to our previous article for instructions on how to create them

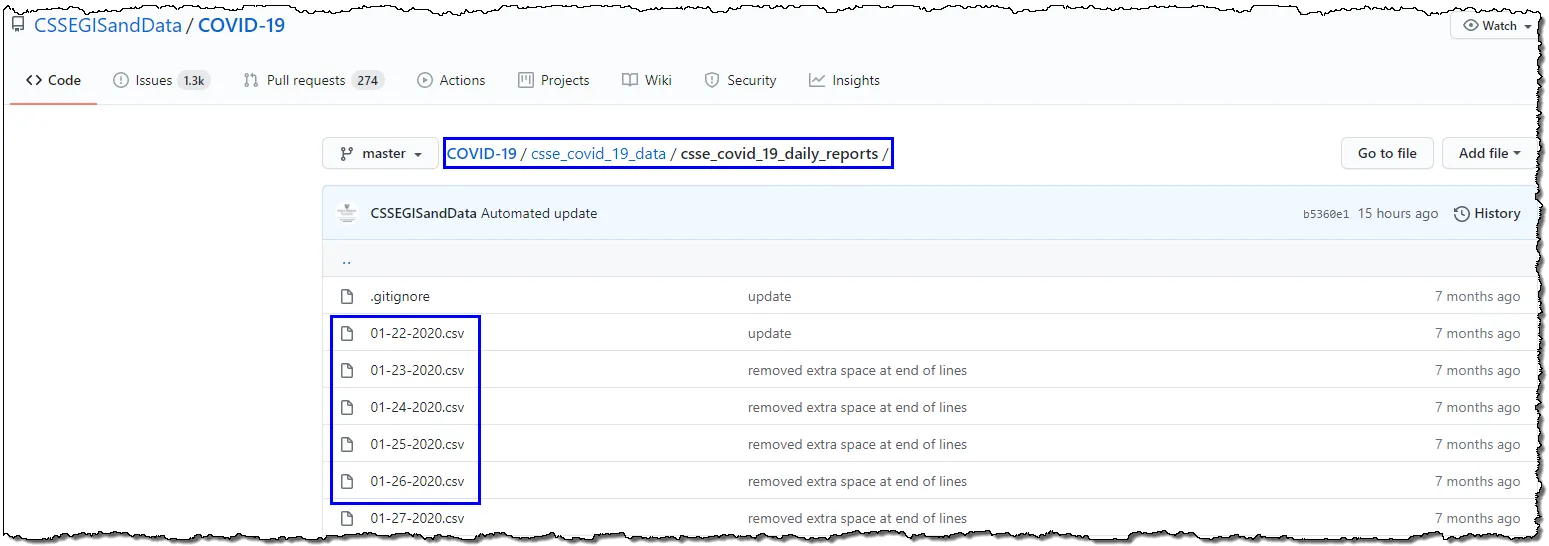

In this article, we will set up our Data factory to fetch publicly available CSV files from the COVID-19 repository at GitHub operated by Johns Hopkins University Center for Systems Science and Engineering (JHU CSSE). We’re interested in the data residing within csse_covid_19_data/csse_covid_19_daily_reports. This folder contains daily case reports with new reports added once daily since January 22, 2020. Files follow a consistent naming convention of MM-DD-YYYY.csv

A typical way of ingesting these files for our project is to download the repository as a zip from GitHub, extracting the files on your client machine, and upload the files to our storage account manually. On the other hand, we will have to upload a new file daily if we want to keep our Power BI report up to date with COVID-19 data. We want to find a solution to automate the ingesting task to keep our data up to date without additional manual efforts. We can achieve this using the Azure Data Factory. Our thought process should be:

- Create a pipeline to fetch files from GitHub and store it in our storage account

- We can fetch only one file at a time using its **Raw **URL (open a file at the GitHub URL and click on Raw to open the file without GitHub’s UI): https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_daily_reports/01-22-2020.csv

#pipeline #csv-file #url-pattern #azure-data-factory #data-science