A very special thanks to _Dr. _Stephanie Shen for sharing insightful thoughts for this article.

Data quality (DQ) is a generic term that can have different meanings to different people. There are many definitions of Data quality in both academic and practitioner’s world but in essence, it is considered as the initiative to make organization data fit for purpose. However, just using the term ‘Data Quality’ is often confusing for the executives while instigating the data quality program in the organization, and they start with the assumption that it would be the primary responsibility of one DQIT team (Also known as centralized DQ) with some degree of involvement from Business in the capacity of ‘Data Stewards’ to make organization data consumable for users. This team is accountable to the Data Governance Committee (DGC) that drives the entire DQ program in horizontal axis cutting across the business units.

Although, with all good intentions of DGC this notion sows the seed of designing the unscalable architecture of Data quality program that eventually hit the bottle-neck in terms of resources and funding, technical roadblock, compliance burden and loss of sponsors interest. As the nice quote by Andrew Smith says “People fear what they don’t understand and hate what they can’t conquer”, there is a need to use the correct terminology for the ‘Data quality’ program to start right, win the trust of stakeholders and make it organization- wide implementation.

Challenges of Data Quality Program

1. Management challenge

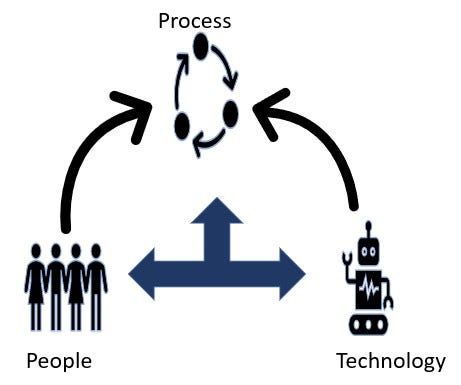

No matter how big or small the organization is budget and funding is always limited and executive need to invest it judiciously in acquiring technology, setting up the processes and hiring and building DQ developers’ team. Centralized DQ approach could be effective in case of the small or mid-size organization but when it comes to the multinational organization if someone tries to implement data quality initiative by one centralized DQ team it is like an attempt to ‘boil the ocean’ and would eventually end-up with stressed-out DQ IT engineers who are expected to develop data quality rule with little data understanding of the business application. The number of human resources allocated for any project is always finite and fixing entire organization data with a handful of developers with a limited financial budget is an over- ambitious approach.

2. Technology challenge

Every source data application applies several transformation logic while processing its data that is finally stored in the highly normalized form at the database level. Bringing the data out from these data models is not straight forward. In legacy systems like mainframe, this situation becomes more adverse, and sometime DQIT is burdened with an unrealistic expectation of interpreting the COBOL program to understand the data flow before even they attempt to develop DQ rule.

In the context of Banking and Financial industry, banks are majorly dealing with the data that typically contain large numbers of business transactions and records that need to be accessed for a various number of banking functions instantly from multiple business applications of banking network [3]. In these scenarios implementing data quality with a centralized Data Quality-process is certainly challenging.

DQIT invest the majority of their development time with the Source IT developers to understand the intricacies of business application data that has little meaning to them when it comes to the technical development of DQ rule in one of the Data quality tools such as Informatica Data Quality (IDQ), Talend, SAP, IBM, and others. On the other side Source IT developer’s primary skill is programming language such as Java, .Net, PHP, etc and with no background of modern DQ tools they often struggle to prepare and provide the data in DQ tool consumable format.

This technical challenge increases the rule development time significantly and builds up more pressure on the DQIT group for the deliverable. Additionally, Senior-level executives find it highly confusing why simple DQ rule development is taking 5 days when they have bought the best DQ tool from the market, and this confusion amplify further when IT unable to explain the real challenge resulting ‘process failure starts giving the impression of tool or technology failure’.

#data-governance #data-quality #data-strategy #chief-data-officer #data analysis