Sometimes, you should

Pandas is one of the most useful tools a data scientist can use. It provides several handy functionalities to extract information. Unfortunately, using Pandas requires data to be loaded into DataFrames, which aren’t great for handling massive quantities of data — quantities of data common at a company where you would need such data manipulation skills.

If you were to download a complete, massive dataset — perhaps store it as a .csv file — you would need to spend at least several minutes waiting for the file to complete download and be converted into a DataFrame by Pandas. On top of this, any operations you perform will be slow because Pandas has trouble dealing with these massive amounts of data and needs to run through every row.

A better solution? First query the database with SQL — the native, efficient processing language it likely runs on — then download a reduced dataset, if necessary, and use Pandas to operate on the smaller-scale tables it is designed to work on. While SQL is very efficient with large databases, it cannot replace the value of Panda’s plotting integration with other libraries and with the Python language in general.

In the case where we only need to find numerical answers, however, like the average cost of an item or how many employees receive commission but not an hourly wage above $40, there is (usually) no need to touch Pandas and a Python environment at all.

While most people’s knowledge of SQL stops at SELECT * FROM table WHERE id = ‘Bob’, one would be surprised by the functionalities that SQL offers.

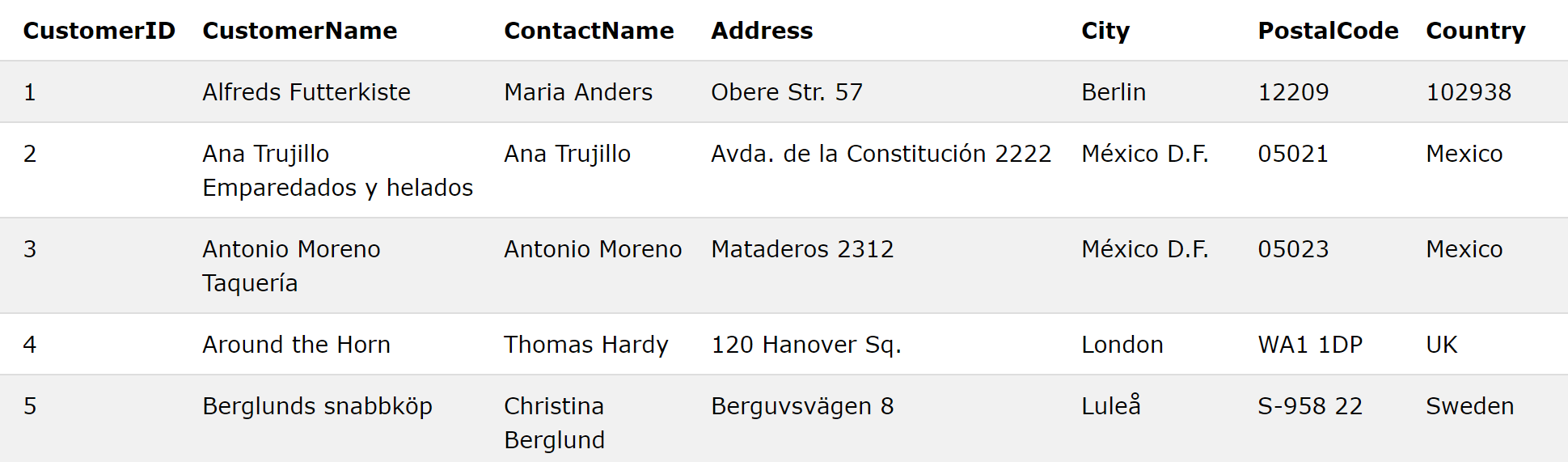

As an example, we will work on the SQL Tryit Editor database provided by w3schools. This site allows you to run SQL queries on a fictional database. The table Customers (you can see the full list of tables on the right panel) has 7 columns with numerical and text data:

#database #data-analysis #data-science #machine-learning #python