This guide will help get you up and running to integrate your containers with a single (local) GPU directly on a Linux workstation.

Note:_ It does not cover the steps needed to connect to multiple GPUs over a network, nor will it help users of Windows or Mac Os (The toolkit mentioned is incompatible with those operating systems)._

What you will need

- The proprietary graphics driver** installed on your host-** This already would have been needed with or without containers. However, installing the additional CUDA libraries won’t be necessary as they will be built into your images.

- The NVIDIA Container Toolkit - The Linux packages here are compatible with both Docker and Podman.

- A CUDA enabled image - To properly integrate Docker/Podman with your GPU, it requires some tweaks to the environment inside the container along with the correct packages. Start with an official build to ensure that the first two steps are working properly.

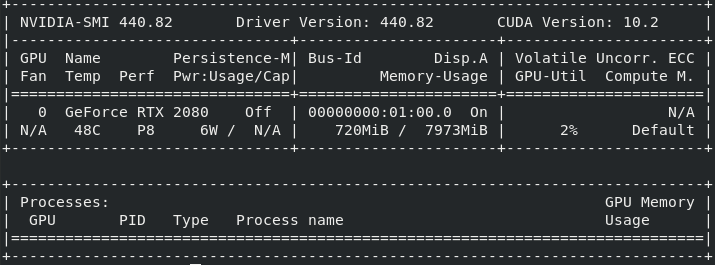

Assuming that everything is set up, a correct install will show an output similar to this in your terminal window when you call nvidia-smi from within your container.

If the image you are testing has a default command that prevents you from getting to bash immediately when you run, you can override it with an additional argument placed after the image name. Typically the direct path to the command will be /usr/bin/nvidia-smi.

#cuda #linux #podman #nvidia #docker