In this post, you will learn the concepts of Adaline (ADAptive LInear NEuron), a machine learning algorithm, along with a Python example. Like Perceptron, it is important to understand the concepts of Adaline as it forms the foundation of learning neural networks. The concept of Perceptron and Adaline could found to be useful in understanding how gradient descent can be used to learn the weights which when combined with input signals is used to make predictions based on unit step function output.

Here are the topics covered in this post in relation to Adaline algorithm and its Python implementation:

- What’s Adaline?

- Adaline Python implementation

- Model trained using Adaline implementation

What’s Adaline?

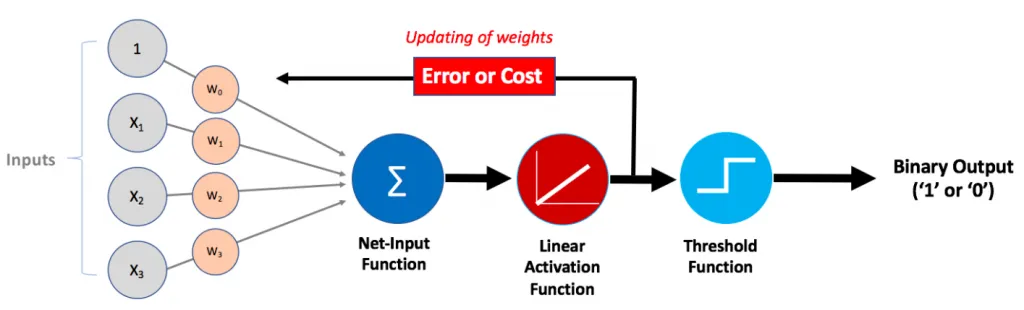

Adaline, like Perceptron, also mimics a neuron in the human brain. You may want to read one of my related posts on Perceptron — Perceptron explained using Python example. Adaline is also called as **single-layer neural network. **Here is the diagram of Adaline:

The following represents the working of Adaline machine learning algorithm based on the above diagram:

- Net Input function - Combination of Input signals of different strength (weights): Input signals of different strength (weights) get combined / added in order to be fed into the activation function. The combined input or sum of weighted inputs can also be called as **net input. ** Pay attention to the Net-input function shown in the above diagram

- Net input is fed into activation function (Linear): Net input is fed into activation function. The activation function of adaline is an identity function. If Z is net input, the identity function would look like (g(Z) = Z). The activation function is linear activation function as the output of the function is linear combination of input signals and weights.

- Activation function output is used to learn weights: The output of activation function (same as net input owing to identity function) is used to calculate the change in weights related to different inputs which will be updated to learn new weights. Pay attention to feedback loop shown with text **Error or cost. **Recall that in Perceptron, the activation function is a unit step function and the output is binary (1 or 0) based on whether the net input value is greater than or equal to zero (0) or otherwise.

- Threshold function - Binary prediction (1 or 0) based on unit step function: The prediction made by Adaline neuron is done in the same manner as in case of Perceptron. The output of activation function, which is net input is compared with 0 and the output is 1 or 0 depending upon whether the net input is greater than or equal to 0. Pay attention in the above diagram as to how the output of activation function is fed into threshold function.

#cloud #python #ai #artificial intelligence #perceptron #adaline