Artistic style transfer is one of the most intuitive and accessible computer vision tasks out there. Though there’s a lot happening under the hood of a style transfer model, functionally, it’s quite simple.

Style transfer takes two images — a content image and a style reference image — and blends them so that the resulting output image retains the core elements of the content image, but appears to be “painted” in the style of the style reference image.

_For a more complete look at what style transfer is, how it works, and what it’s being used for, _check out our full guide.

Style transfer models, as it turns out, also run very well on mobile phones, for both images and real-time video. As such, it’s not entirely surprising that Snap’s Lens Studio 3.0, with the introduction of SnapML, includes a template for building Lenses using style transfer models.

So far, we’ve covered the release of SnapML, taken a closer look at the templates you can work with, and provided a high-level technical overview—so I won’t be covering any of that here.

Instead, I’ll be working through an implementation of a custom style transfer Lens—from building the model, to integrating it in Lens Studio, to deploying and testing it within Snapchat.

But if you need or would like to get caught up on SnapML first, I’d encourage you to check out these resources from our team:

Lens Studio 3.0 introduces SnapML for adding custom neural networks directly to Snapchat

Including a conversation with Hart Woolery, CEO of 2020CV and a SnapML creator

Exploring SnapML: A Technical Overview

Our high-level technical overview of Lens Studio’s new framework for custom machine learning models

Working with SnapML Templates in Lens Studio: An Overview

Add powerful ML features to you Snap Lenses using SnapML’s easy-to-use templates

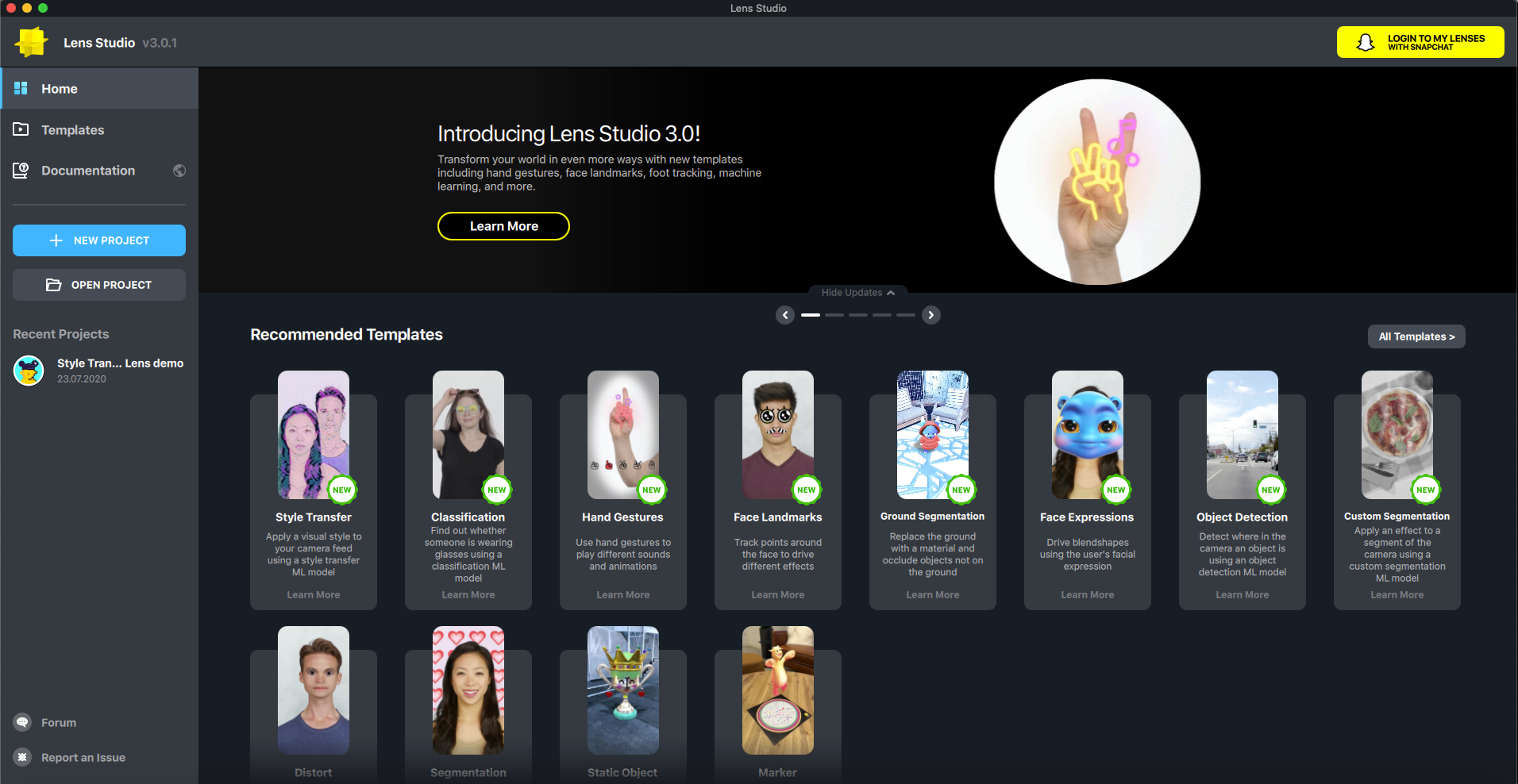

The Style Transfer Template

Lens Studio Home — Note the Style Transfer option under “Recommended Templates”

Before we jump into building our model and Lens, I first want to give you a sense of what’s included in the Lens Studio’s Style Transfer template.

Essentially, each ML-based Lens Studio template includes:

- A preconfigured project inside Lens Studio with an ML Component (Snap’s term for a container that holds the ML model file and input/output configurations) implemented. There’s also a sample model included by default.

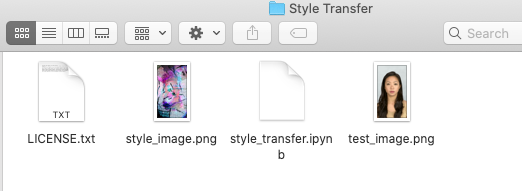

- Access to a zip file that includes a Jupyter Notebook file (.ipynb) that you can run inside Google Colab. You’ll want to download this and unzip it (see below). I created a primary project folder on my local machine and nested this folder inside it.

- Other important project files, depending on the ML task at hand (for Style Transfer, Snap provides a sample style reference image and a content/test image).

Contents of the Style Transfer zip file.

Training a Custom Style Transfer Model in Google Colab

I was pleasantly surprised at how easy it was to work with the Notebook the Snap team had prepared. Let’s work through the steps below:

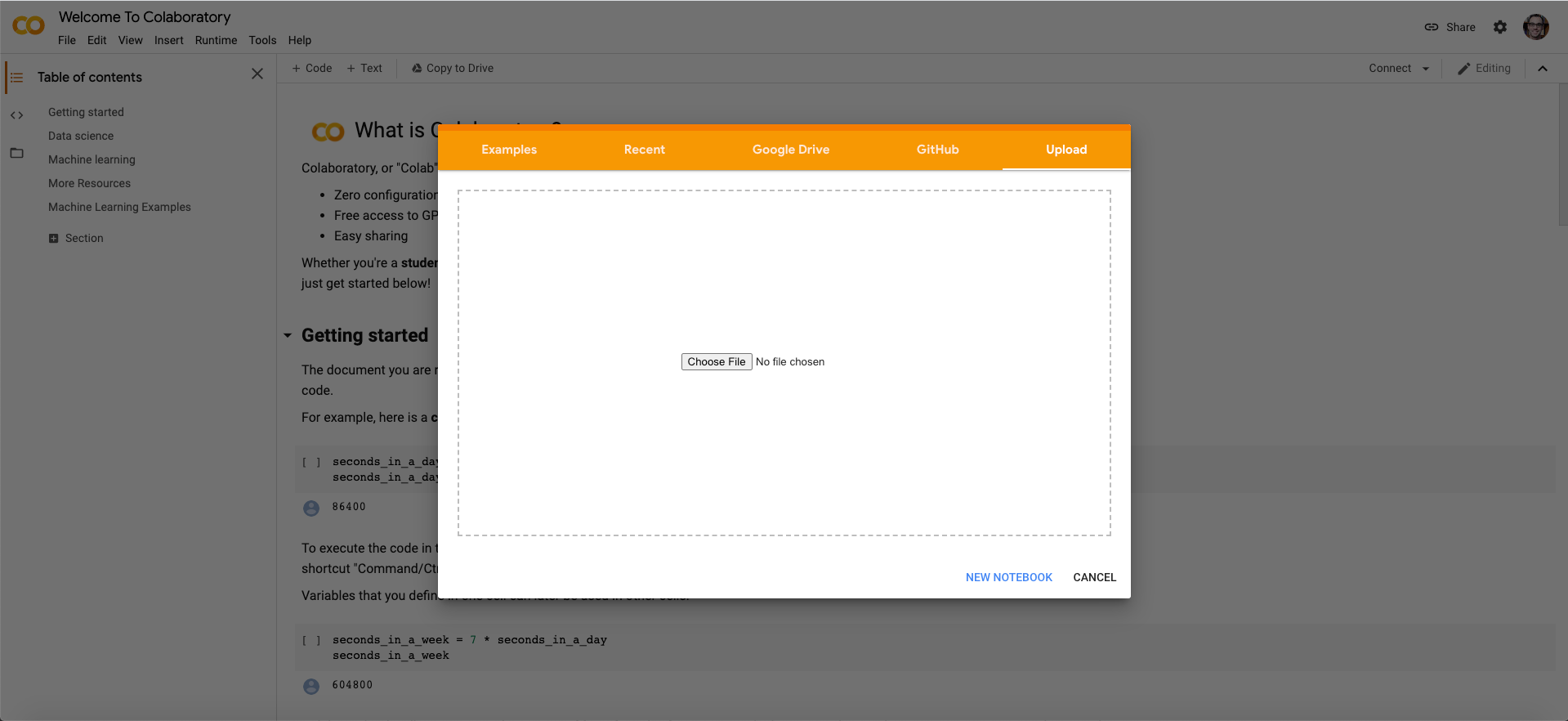

Step 1: Upload Style Transfer Notebook

Simply open Google Colab, and in the intro flow where you’re prompted to choose a project/Notebook, drag-and-drop the provided Python Notebook file. Once you’ve done that, Colab should automatically connect to the appropriate runtime—so for the purposes of this project, you won’t need to adjust anything there.

Drag-and-drop the provided model file here.

Step 2: Upload Style and Reference (Test) Images

Because we’re training our own custom style transfer model, we’ll need to supply our model with the necessary training inputs: a style image and a _content reference _image.

With other templates (i.e. Classification, Segmentation), you’d need to upload full image datasets, but here, given the model architecture and task, you’ll only need to upload two images. These are the ones I chose:

Left: Style Image; Right: Content Reference Image

To upload these, simply drag-and-drop them into the “Files” tab on the left-side panel. Click “Ok” when notified that exiting the runtime will delete these files — we should have a fully trained model once we’re done with this process, so no need to worry about that.

_Important note: _To ensure the model accepts these two input images, they must be named

_style_image_and_test_image_, respectively. They should be in_.png_format.

Step 3: Train the Model

Because all the model needs is a single style image to train on and a reference image to test on, we’re ready to train our model once we’ve uploaded these two images.

If you scroll through the Notebook you’ll notice each step in the process is commented out, with explanations for what’s happening at each step. I like this kind of additional detail, as it gives small windows into how the model is trained at each step, along with the requisite code blocks. So if you’re interested, I’d encourage you to sift through it to get a flavor of Snap’s magic sauce.

But if you just want to train your model, click **Runtime **in the top nav bar and choose the first option, **Run All. **This will automatically run all code blocks in succession, all the way from installing the necessary libraries to importing training data (the model leverages the COCO dataset to help train) to training, converting, and downloading the model.

#lens-studio #heartbeat #mobile-machine-learning #snapchat #machine-learning #deep learning