Understanding MLP-Mixers from beginning to the end, with TF Keras code

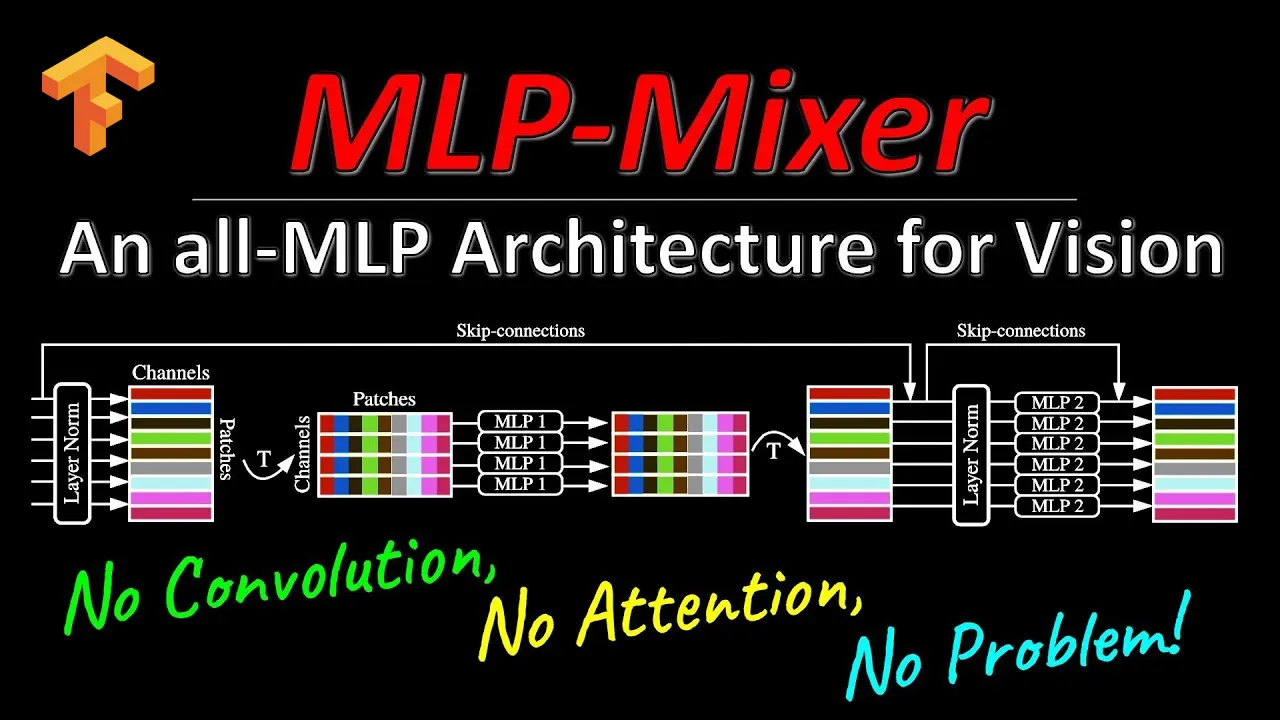

Earlier this May, a group of researchers from Google released a paper “MLP-Mixer: An all-MLP Architecture for Vision” introducing their MLP-Mixer ( Mixer, for short ) model for solving computer vision problems. The research suggests that MLP-Mixer attains competitive scores on image classification benchmarks such as the ImageNet.

One thing that would catch every ML developer’s eyes, is that they haven’t used convolutions in their architecture. Convolutions have reigned computer vision since long as they are efficient in extracting spatial information from images and videos. Recently, Transformers, that were originally used for NLP problems, have shown remarkable results in computer vision problems as well. The research paper for MLP-Mixer suggests,

In this paper we show that while convolutions and attention are both sufficient for good performance, neither of them are necessary.

I have used MLP-Mixers for text classification as well,

We’ll discuss more on MLP-Mixer’s architecture and underlying techniques involved. Finally, we provide a code implementation for MLP-Mixer using TensorFlow Keras.

📃 Contents

- 👉 Dominance of Convolutions, advent of Transformers

- 👉 Multilayer Perceptron ( MLP ) and the GELU activation function

- 👉 MLP-Mixer Architecture Components

- 👉 The End Game

- 👉 More projects/blogs/resources from the author

#machine-learning #artificial-intelligence #neural-networks #python #tensorflow #mlp mixer is all you need?