3D object detection has been receiving increasing attention from both industry and academia thanks to its wide applications in various fields such as autonomous driving and robotics. LiDAR sensors are widely adopted in autonomous driving vehicles and robots for capturing 3D scene information as sparse and irregular point clouds, which provide vital cues for 3D scene perception and understanding.

Most existing 3D detection methods could be classified into two categories in terms of point cloud representations, i.e., the grid-based methods and the point-based methods.

In order to make comprehensive use of the respective advantages of the above two feature extraction operations,[1] are considering how to deeply integrate these two point cloud feature extraction algorithms into a network to improve the network’s structural diversity and characterization capabilities.

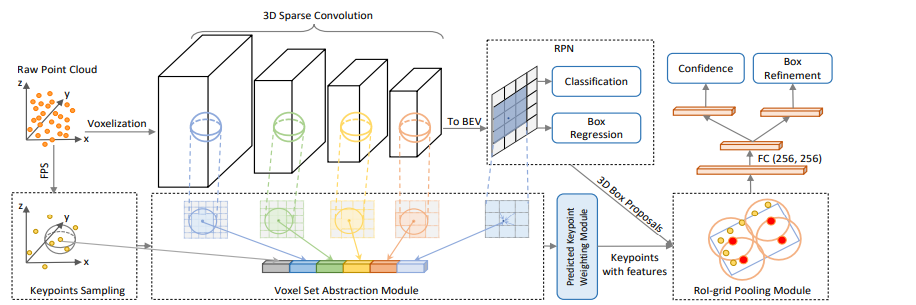

A novel high-performance 3D object detection framework named Point Voxel-RCNN (PV-RCNN) is proposed for accurate 3D object detection from point clouds. The proposed method deeply integrates 3D voxel convolutional neural network (CNN) and PointNet-based ensemble abstraction to learn more discriminative point cloud features. It takes advantage of the high-quality recommendations of 3D voxel CNN and the flexible receptive field of PointNet-based networks.

#object-oriented #cnn #machine-learning #computer-vision #point-cloud