In this article, we will be exploring various feature selection techniques that we need to be familiar with, in order to get the best performance out of your model.

- SelectKBest

- Linear Regression

- Random Forest

- XGBoost

- Recursive Feature Elimination

- Boruta

SelectKBest

SelectKbest is a method provided by sklearn to rank features of a dataset by their “importance ”with respect to the target variable. This “importance” is calculated using a score function which can be one of the following:

- f_classif: ANOVA F-value between label/feature for classification tasks

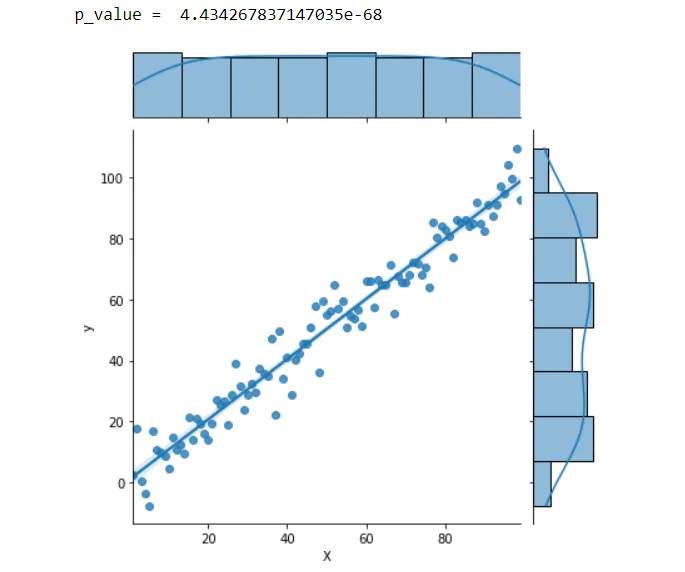

- f_regression: F-value between label/feature for regression tasks.

- chi2: Chi-squared stats of non-negative features for classification tasks.

- mutaul_info_classif: Mutual information for a discrete target.

- SelectPercentile: Select features based on the percentile of the highest scores.

- SelectFpr: Select features based on a false positive rate test.

- SelectFdr: Select features based on an estimated false discovery rate.

- SelectFwe: Select features based on the family-wise error rate.

- GenericUnivariateSelect: Univariate feature selector with configurable mode.

All of the above-mentioned scoring functions are based on statistics. For instance, the f_regression function arranges the** p_values** of each of the variables in increasing order and picks the best K columns with the least p_value. Features with a p_value of less than** 0.05** are considered “significant” and only these features should be used in the predictive model.

#artificial-intelligence #big-data #data-science #machine-learning