The following blog will answer these questions:

- The need for RNN?

- What are RNNs?

- How do RNNs work?

- Problems with RNNs?

- What is LSTM in RNNs?

Let me begin this article with a question — Which of the following sentence makes sense?

- neural why recurrent need we network do

- why do we need a recurrent neural network

Its obvious that the second one makes sense as the sequence of the sentence is preserved. So, whenever the sequence is important we use RNN. RNNs in general and LSTMs, in particular, have received the most success when working with sequences of words and paragraphs, generally called natural language processing.

Use of RNNs in following fields:

- Text data

- Speech data

- Classification prediction problems

- Regression prediction problems

- Generative models

Some of the famous technologies using RNN are Google Assistance, Google Translate, Stock Prediction, Image Captioning, and similarly many more.

Generally, we don’t use RNN for a tabular dataset (CSV) and image dataset. Although NLP is mostly used in text processing RNN comes into picture when we need the sequence of words in a sentence.

What are RNNs?

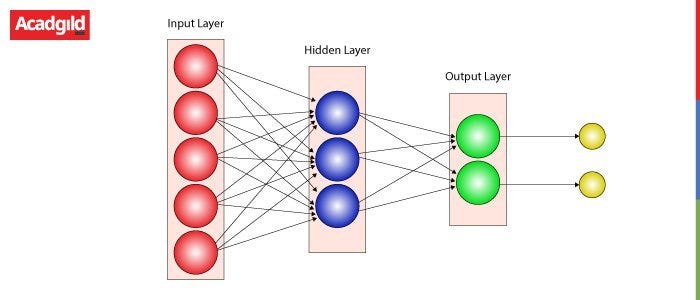

In a traditional neural network, we assume that all inputs (and outputs) are independent of each other. But for many tasks that are a very bad idea. If you want to predict the next word in a sentence you better know which words came before it. RNNs are called recurrent because they perform the same task for every element of a sequence, with the output being dependent on the previous computations.

RNN has a “memory” which remembers all information about what has been calculated. It uses the same parameters for each input as it performs the same task on all the inputs or hidden layers to produce the output. This reduces the complexity of parameters, unlike other neural networks.

How do RNNs work?

Let’s suppose that the neural network has three hidden layers. The first one with weight and biases as w1, b1; the second with w2, b2; and the third with w3, b3. This means that each of these layers is independent of each other, i.e. they do not memorize the previous outputs.

Now, we need to convert this simple neural network into RNN. This is done by assigning the same weight and biases to all the hidden layers. Thus reducing the complexity of increasing parameters and memorizing each previous outputs by giving each output as input to the next hidden layer.

- Let’s say we assigned a word W1 to the 1st hidden layer at time t1.

#artificial-intelligence #lstm #data-science #deep-learning #recurrent-neural-network #deep learning