Overview: SnapML in Lens Studio

In 2015, Snapchat, the incredibly popular social content platform, added Lenses to their mobile app —augmented reality (AR) filters that give you big strange teeth, turn your face into an alpaca, or trigger digital brand-based experiences.

In addition to AR, the other core underlying technology in Lenses is mobile machine learning — neural networks running on-device that do things like create a precise map of your face or separate an image/video’s background from its foreground.

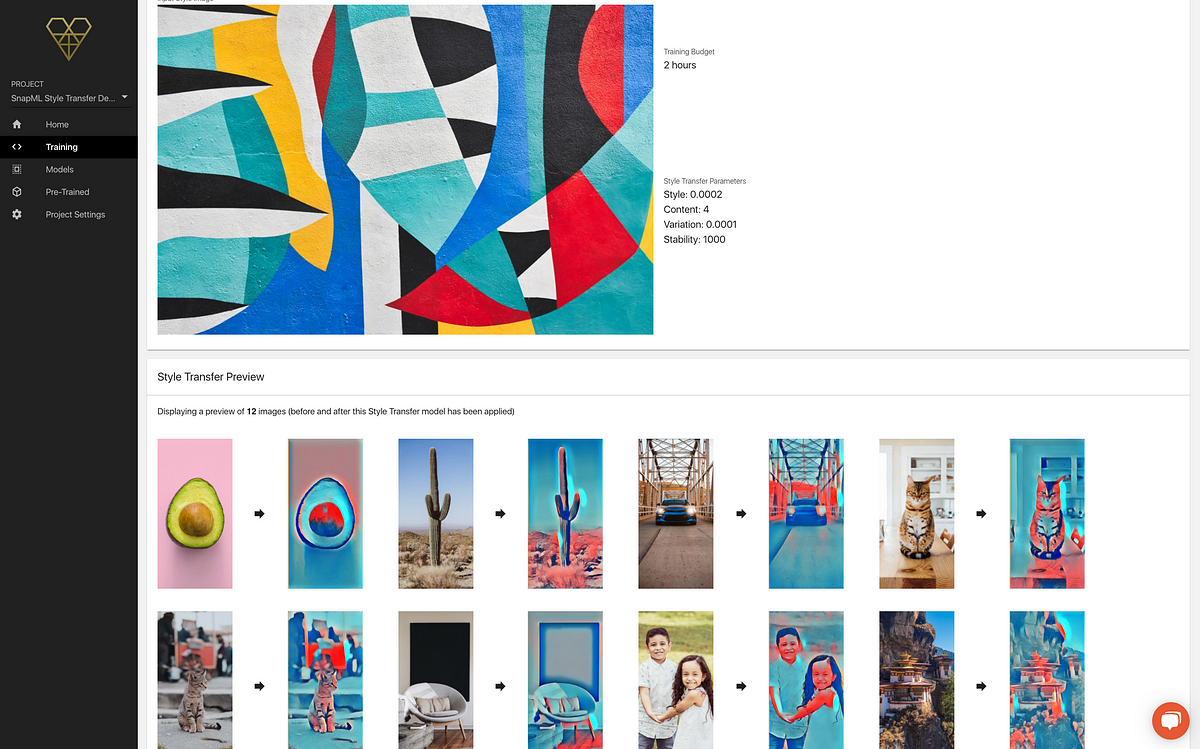

With the release of Lens Studio 3.x (current version is 3.2 at the time of writing), the Lens Studio team introduced SnapML,a framework that facilitates a connection between custom machine learning models and Lens Studio.

There are currently a number of really impressive pre-built templates available in Lens Studio’s official template library. But to truly unlock the expansive potential for things like custom object tracking, improved scene understanding, and deeper immersion between the physical and digital world, creators need to be able to build custom models.

And that’s where there remains an undeniable limiting factor: ML is really hard.

The expertise and skill sets required for ML project lifecycles are quite unique and are often mismatched with creators, designers, and others developing Snapchat Lenses. This gap represents a significant barrier to entry for many creators and creative agencies, who generally don’t have the resources (time, skills/expertise, or tools) to invest in model building pipelines.

#heartbeat #snapchat-filters #fritz-ai #lens-studio #machine-learning