Let’s say you’re looking to buy a new PC from an online store (and you’re most interested in how much RAM it has) and you see on their first page some PCs with 4GB at $100, then some with 16 GB at $1000. Your budget is $500. So, you estimate in your head that given the prices you saw so far, a PC with 8 GB RAM should be around $400. This will fit your budget and decide to buy one such PC with 8 GB RAM.

This kind of estimations can happen almost automatically in your head without knowing it’s called linear regression and without explicitly computing a regression equation in your head (in our case: y = 75x - 200).

So, what is linear regression?

I will attempt to answer this question simply:

Linear regression is just the process of estimating an unknown quantity based on some known ones (this is the regression part) with the condition that the unknown quantity can be obtained from the known ones by using only 2 operations: scalar multiplication and addition (this is the linear part). We multiply each known quantity by some number, and then we add all those terms to obtain an estimate of the unknown one.

It may seem a little complicated when it is described in its formal mathematical way or code, but, in fact, the simple process of estimation as described above you probably already knew way before even hearing about machine learning. Just that you didn’t know that it is called linear regression.

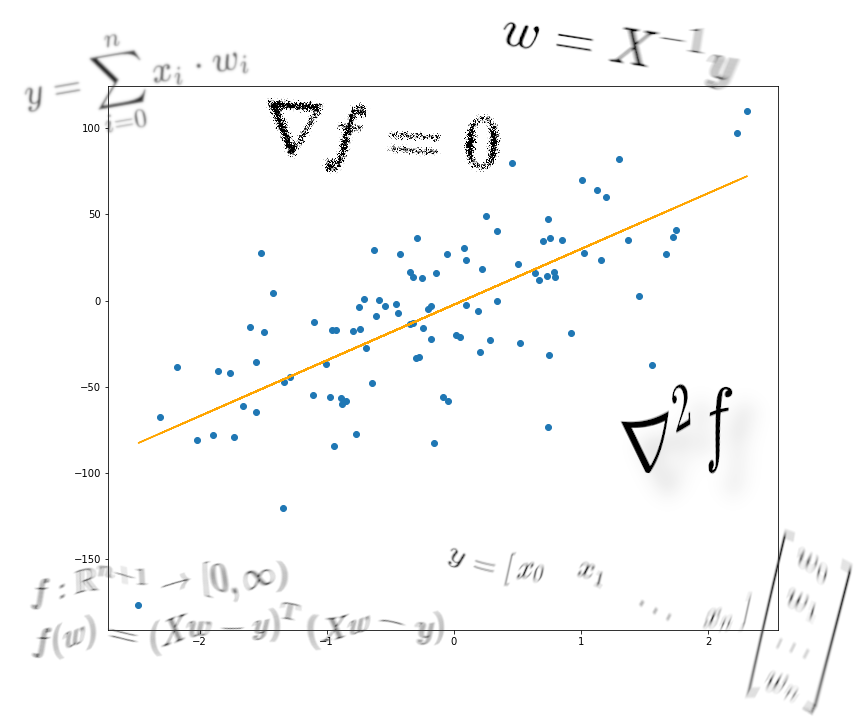

Now, let’s dive into the math behind linear regression.

In linear regression, we obtain an estimate of the unknown variable (denoted by y; the output of our model) by computing a weighted sum of our known variables (denoted by xᵢ; the inputs) to which we add a bias term.

Where n is the number of data points we have.

Adding a bias is the same thing as imagining we have an extra input variable that’s always 1 and using only the weights. We will consider this case to make the math notation a little easier.

Where x₀ is always 1, and w₀ is our previous b.

To make the notation a little easier, we will transition from the above sum notation to matrix notation. The weighted sum in the equation above is equivalent to the multiplication of a row-vector of all the input variables with a column-vector of all the weights. That is:

#data-science #machine-learning #visualization #artificial-intelligence #mathematics