Maths behind Decision Tree Classifier

Before we see the python implementation of the decision tree. Let’s first understand the math behind the decision tree classification. We will see how all the above-mentioned terms are used for splitting.

We will use a simple dataset which contains information about students from different classes and gender and see whether they stay in the school’s hostel or not.

This is how our data set looks like :

Let’s try and understand how the root node is selected by calcualting gini impurity. We will use the above mentioned data.

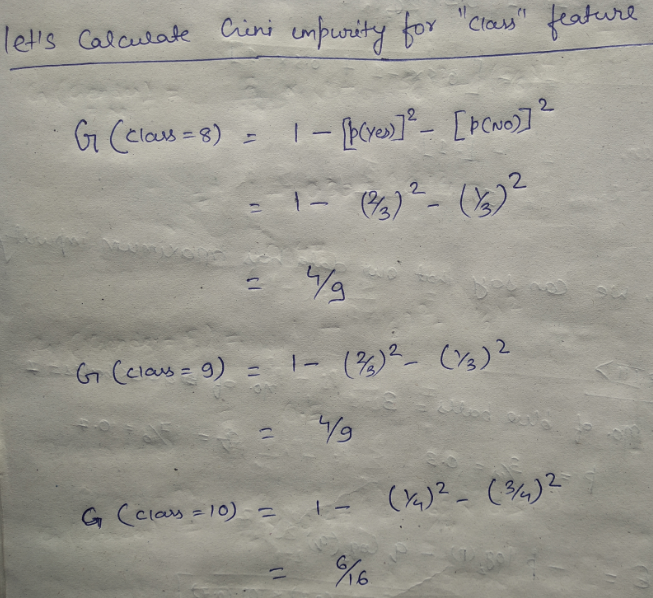

We have two features which we can use for nodes: “Class” and “Gender”. We will calculate gini impurity for each of the features and then select that feature which has least gini impurity.

Let’s review the formula for calculating ginni impurity:

Let’s start with class, we will try to gini impurity for all different values in “class”.

#data-science #machine-learning #decision-tree-classifier #decision-tree #deep learning