Introduction

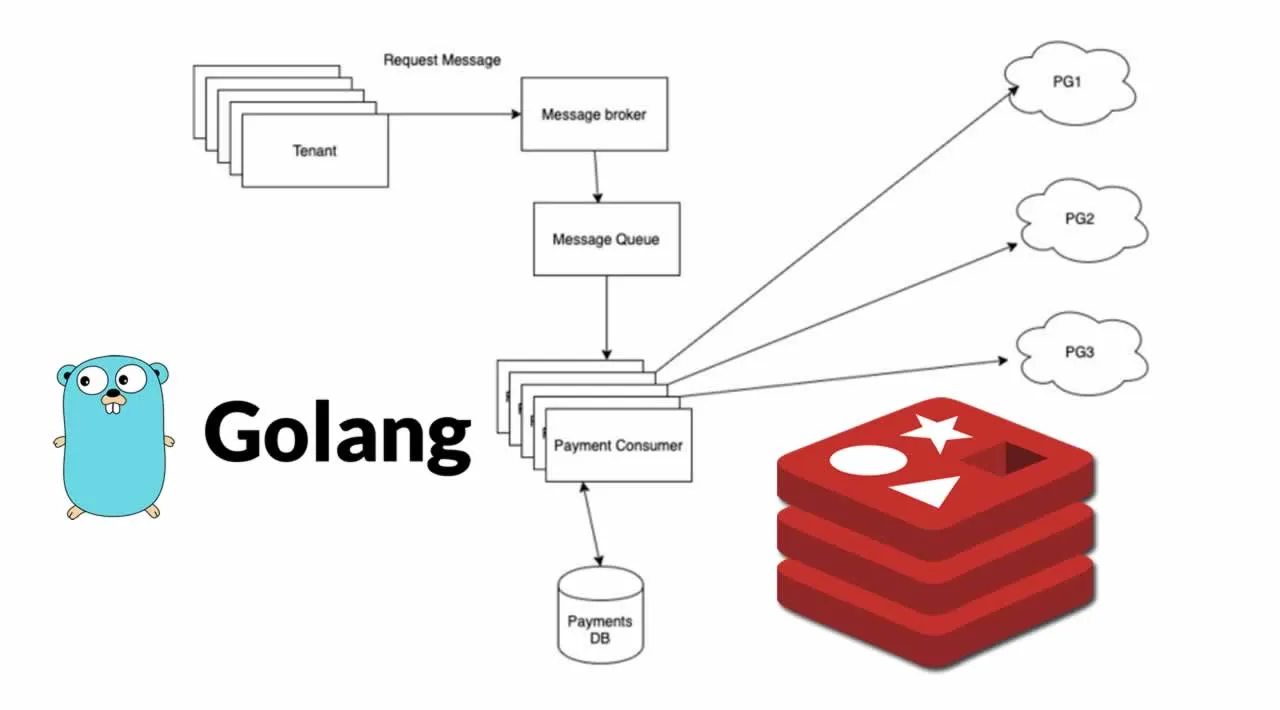

I work on an application that communicates with multiple payment providers. Each provider has their own rate limit for us. We did not want to exhaust our rate limit with any of the payment providers, while also making the most of what limits we are allowed. We could afford to delay requests to the payment provider for a small amount of time, as bulk payments are processed offline as async jobs.

During an average billing day, we run a large number of payments in a short span of time. Are we breaching our payment processors’ rate limits? Should we do something about that? I’ve heard about something called a rate limiter.

What is a rate limiter? A rate limiter caps the number of requests a sender can issue in a specific time window. It then blocks requests once the limit is reached.

Usually the rate limiter component is at the receiving system, but since we depend so heavily on third party processors, it also makes sense to accommodate the provider’s rate limit and control outbound requests so as to not get too many — or optimistically, even a single

We started designing the rate limiter with these requirements in mind:

- It should limit the number of requests in a given time period to a particular payment provider

- Since our systems run on a cluster (as opposed to a single server), the rate limit should apply on the sum total of requests made, and not per application process.

- Our late limiting logic should be atomic, and not fail even if multiple requests land on our systems concurrently

#rate-limiting #golang #lua #redis