Homography based IPM

In computer vision, homography is a transformation matrix H when applied on a projective plane maps it to another plane (or image). In the case of Inverse Perspective Mapping (IPM), we want to produce a birds-eye view image of the scene from the front-facing image plane.

In the field of autonomous driving, IPM aids in several downstream tasks such as lane marking detection, path planning and intersection prediction solely from using a monocular camera as this orthographic view is scale-invariant. Emphasising the importance of this technique.

How does IPM work?

IPM first assume the world to be flat on a plane. Then it maps all pixels from a given viewpoint onto this flat plane through homography projection.

When does IPM work?

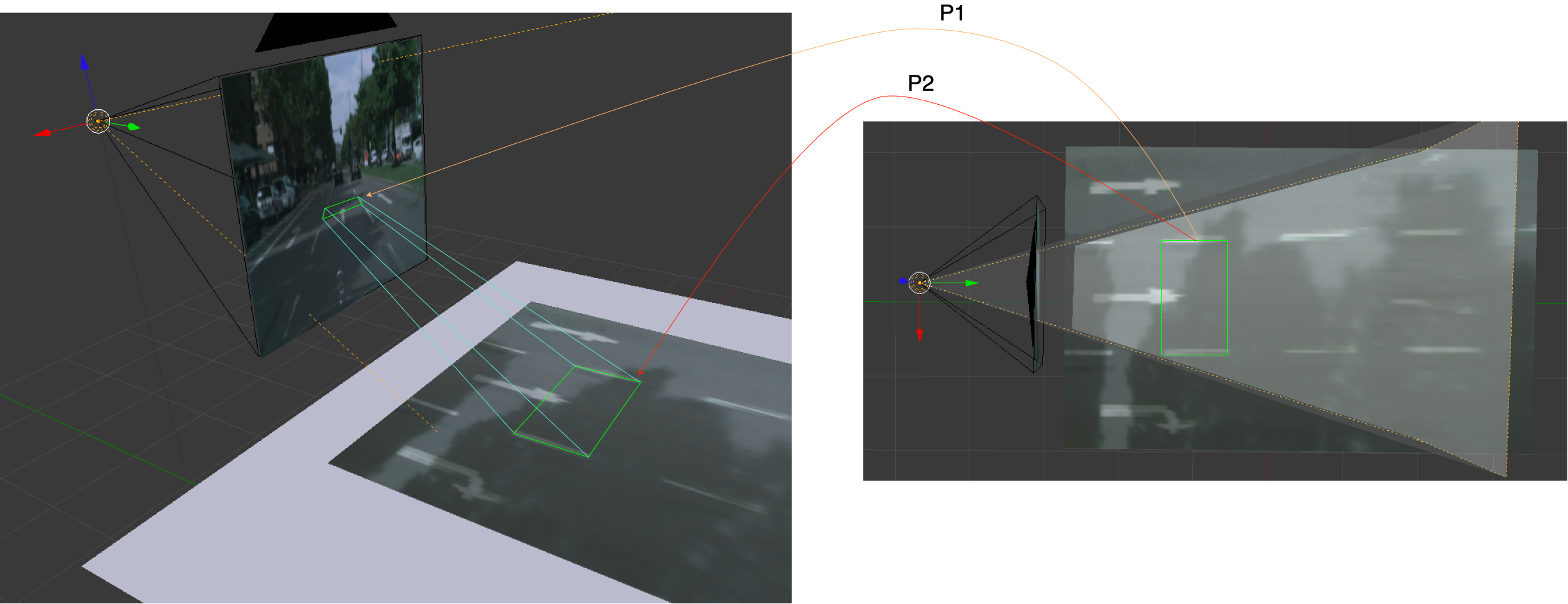

In practice, IPM works well in the immediate vicinity of the camera. For faraway features in the scene, blurring and stretching of the scene become more prominent during perspective projection as a smaller number of pixel is represented, limiting the application of IPM. This can be observed in figure 1, where severe undesirable distortion is produced farther away. To be exact, the lookahead distance is approximately 50m in the figure.

In addition, the following constraints must hold:

- The camera is in a fixed position: Since the position of the road is sensitive to the camera, a slight perturbation in position or orientation will change how the 3D scene is projected onto an image plane.

- The surface is planar: Any object with height or elevation will violate this condition. Non-planar surfaces will create artefacts/distortion in the BEV image.

- Free of objects with height. All points above the ground will induce artefacts as a consequence of perspective projection between 2 planes lying arbitrarily in the 3D scene.

In this article, I will attempt to explain the idea of IPM. More importantly, this post is dedicated to how we can work out and apply the homography using only Python and Numpy.

Next, I will show that somewhat similar results can be obtained using OpenCV. We will be using a relatively common road scene from Cityscape dataset as an example. Feel free to message me regarding any questions/doubts or even mistakes you might have spotted.

The code for this article is available here. And all inline text refers to some variable or function in the code.

Setting up the problem

The problem that we are trying to solve is to transform a frontal view image into a birds-eye view image. IPM does this by removing the perspective effect from the front-facing camera and remap its image onto a top-view 2D domain. The image in BEV is one that attempts to preserve distance and parallel lines, correcting perspective effect.

The following bullet points summarise the procedure for homography based IPM:

- Model the road (X, Y, Z=0) as a flat 2D plane. Some approximation must be made with regard to resolution as the road are discretized onto the BEV image.

- Determine and construct the projection matrix **P **from known extrinsic and intrinsic parameters. Usually, this is obtained through calibration.

- Transform and warped the road by applying P. Also known as a perspective projection.

- Remap the frontal pixel to the new image plane.

I will go into more details in the following sections.

Figure 2. Source

Before we get started, it is important to know where the camera is relative to the road. For the road scene in Cityscape, the camera is mounted on top of the vehicle pitching slightly downwards. The exact position and orientation is written in the file camera.json . Note that the values are relative to the ego-vehicle.

Concepts

To understand IPM, some background knowledge about perspective projection and camera projective geometry is required. I will briefly describe what you need to know about those topics in this section.

1. Perspective projection

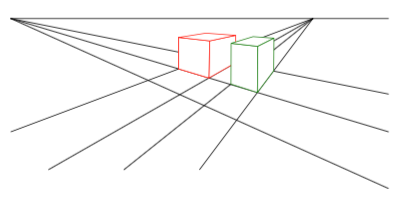

Perspective projection precisely describes how the world around us gets mapped on a 2D plane. During this mapping, 2 parallel lines in the world (euclidean space) are transformed into a pair of line in the new plane which converges at the point of infinity. Referring to figure 3, the parallel properties of the 2 cubes placed in the world are not preserved from where we are observing right now. An example of this would be the lane lines.

Figure 3

In the case of our example as shown in figure 4, when the road is observed from a different viewpoint, notice that the same region looks different. Parallel lies are no longer preserved in a projective transformation P.

#mathematics #machine-learning #computer-vision #deep learning