Hey there! It’s been pretty long since my last post. In this article I wanna share another project that I just done. Well, this one is — once again — related to computer vision field. However though, this is going to be different. Instead of doing classification, what I wanna do here is to generate new images using VAE (Variational Autoencoder). Actually I already created an article related to traditional deep autoencoder.

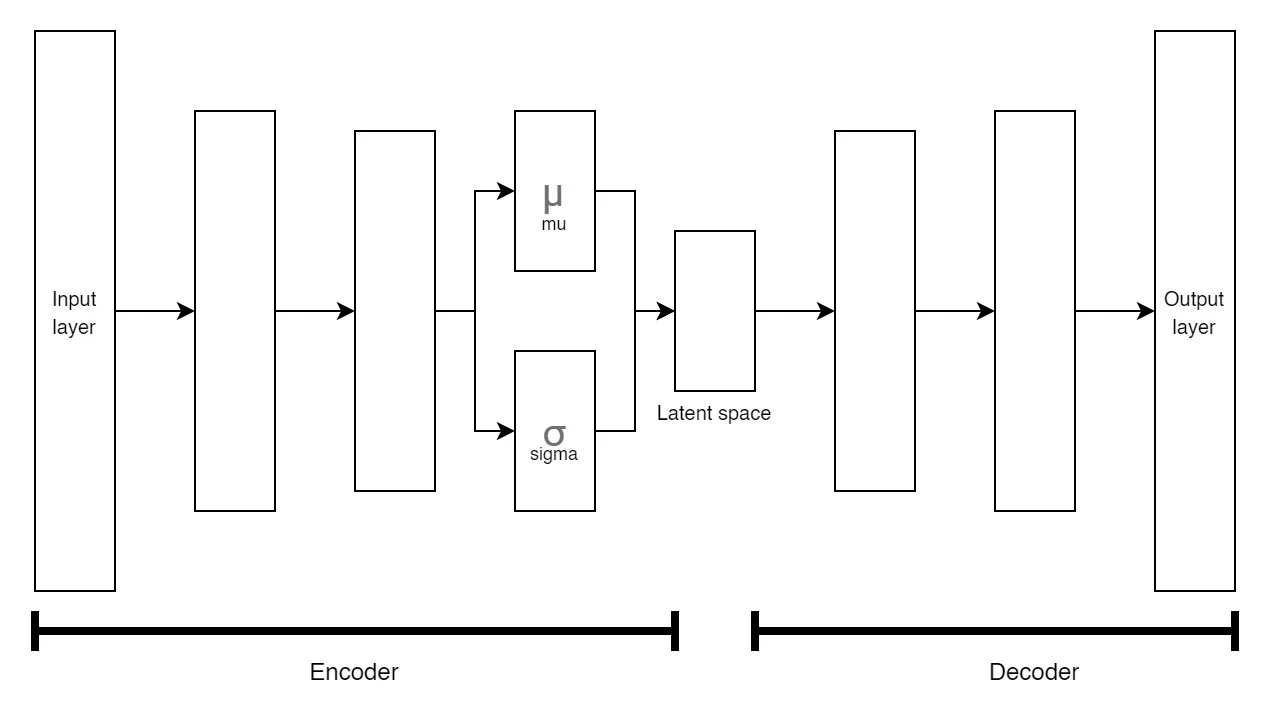

VAE neural net architecture

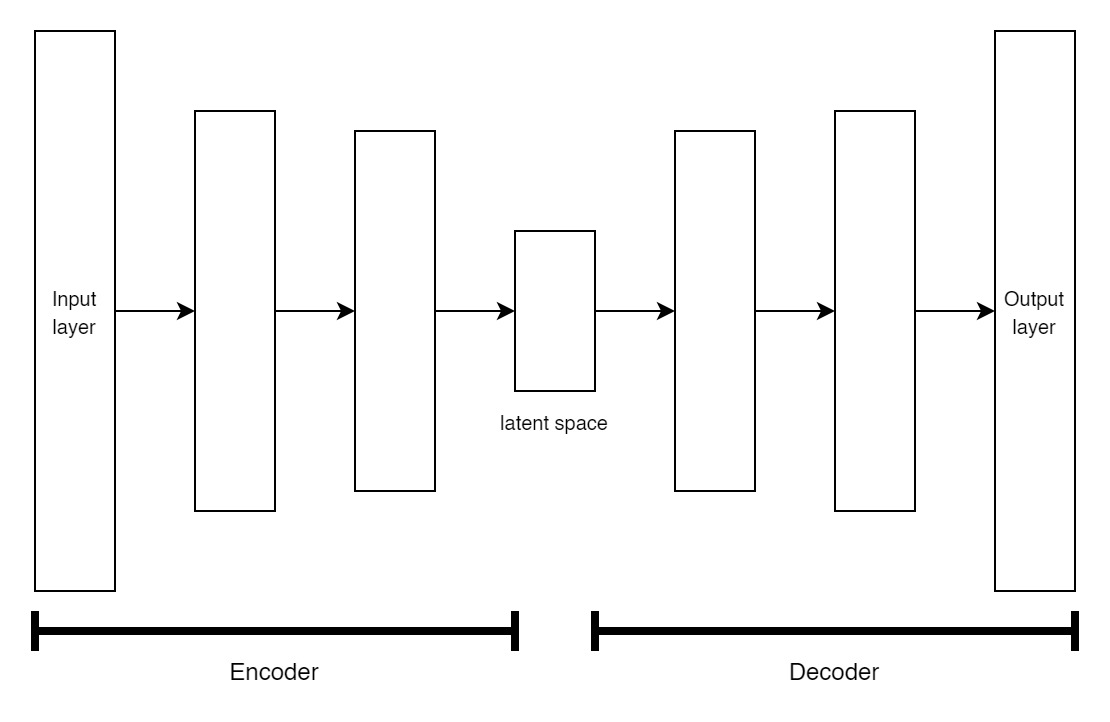

The two algorithms (VAE and AE) are essentially taken from the same idea: mapping original image to latent space (done by encoder) and reconstructing back values in latent space into its original dimension (done by decoder). However, there is a little difference in the two architectures. I display them in the figures below.

Traditional autoencoder.

_I bet it doesn’t even take you a second to spot the difference! Lemme explain a bit. So, the encoder and decoder half of traditional autoencoder simply looks symmetrical. On the other hand, we see the encoder part of VAE is slightly longer than its decoder thanks to the presence of __mu _and _sigma _layers, where those represent mean and standard deviation vectors respectively. I do recommend you to read this article if you wanna know in details why is it necessary to employ the two layers — here I will be more focusing on the code implementation!

#artificial-intelligence #neural-networks #image-processing #deep-learning #ai