Science fiction is becoming reality as increasingly intelligent machines are gradually emerging — ones that not only specialize in things like chess, but that can also carry out higher-level reasoning, or even answer deep philosophical questions. For the past few decades, experts have been collectively bending their efforts toward the creation of such a human-like artificial intelligence, or a so-called “strong” or artificial general intelligence (AGI), which can learn to perform a wide range of tasks as easily as a human might.

But while current AI development may take some inspiration from the neuroscience of the human brain, is it actually appropriate to compare the way AI processes information with the way humans do it?

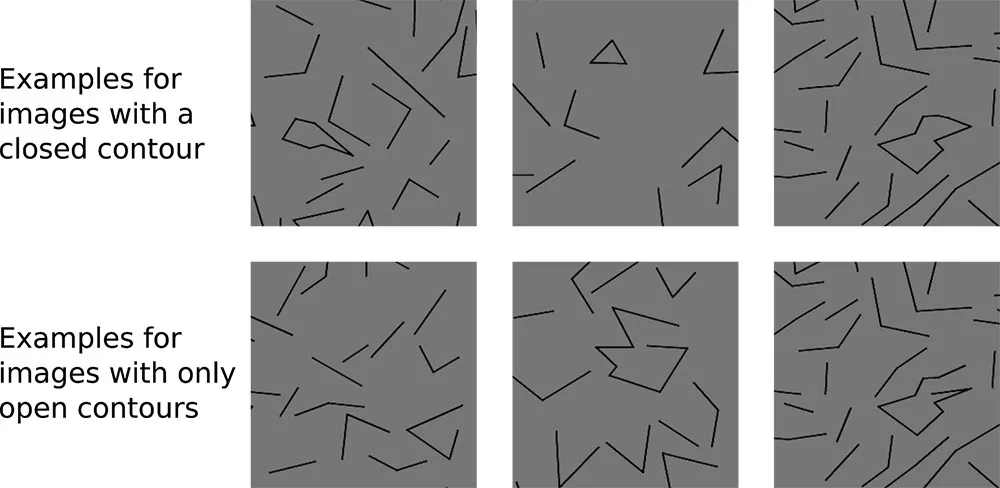

The answer to that question depends on how experiments are set up, and how AI models are structured and trained, according to new research from a team of German researchers from the University of Tübingen and other research institutes.

The team’s study suggests that because of the differences between the way AI and humans arrive at such decisions, any generalizations from such a comparison may not be completely reliable, especially if machines are used to automate critical tasks.

#machine learning #news #science #ai