When we develop and deliver a web application or a mobile app, do we know on which browser, platform, or mobile device the end-user would be viewing it on? Every software company wishes for its application to provide consistent behavior and user experience in any browser or device. But is it possible to have a wish delivered even if sincere? No. Cross-browser testing is essential but time-consuming. There is no shortcut to make your application work across so many platforms without putting in the sweat. But is there no way by which we can provide an effective cross-browser testing strategy that is not as time-consuming and as laborious as it traditionally is?

There is.

But before diving into how we can make this process efficient, we need to understand why it is a necessity. Like us humans, browsers also understand and reproduce things differently. Each browser interprets the code in a different way. CSS styles can be rendered differently in IE 8 when compared to the latest versions of IE or chrome. Elegant styling, fonts, transparency of images, hover behavior, and even shadows can be different across not just different browsers but even across different versions of the same browser as each uses a different rendering engine.

Improving the Efficiency in Cross Browser Testing:

Define device/browser support:

If you do not wish to perform testing on all the browsers, then one of the effective strategies is to define the browsers and devices that your application would support. In this way, the development and testing teams are clear where they need to focus. It also sheds light on what technologies and features might be possible and not possible in your application.

Leverage Analytics data to Prioritize Cross Browser Testing:

Google Analytics helps you track important information such as the browser, device, and OS most commonly used by your end-users to access your application. Using this data, we can prioritize the browser version/device matrix list.

This can be done for a website that has been in the market for quite some time, but if your application is new, then you can leverage the analytical data for other similar websites and presume that your audience would also behave the same way. Also, find the market share for each of the browsers for the countries in which your application generates the most traffic and derive a correlation between them. Make sure you present the browser device list in a simple manner, in a format that is easy to understand.

Stat counter is another website which helps you to map the browser/device usage and filter it according to time, location, OS and sort it the way you want it.

Form a Cross Browser Testing Matrix:

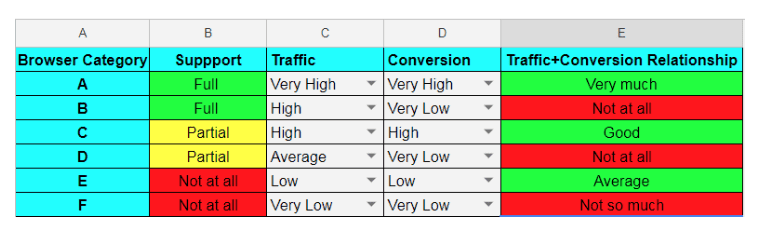

Once your analytics data is sorted, it is time to classify the browsers based on their usage/preference.

A — Browsers/devices that are most preferred and generates the most traffic.

B — Browsers/devices that are not preferred but supports your application/app

C — Browsers/devices that are most preferred but partially supported

D — Browsers/devices that are least preferred but partially supported

E — Browsers/devices that are preferred but not supported at all

F — Browsers/devices that are not preferred and not supported.

Based on the traffic and conversion rate of each browser/device, form a table such as the one below to give QA clarity on which browsers/devices need thorough testing, which ones need to be checked subsequently, and which ones needn’t be bothered about.

Define with clarity, the objective of your Cross Browser Testing:

There can be only two objectives for performing cross-browser testing. One is to find defects and the other is to perform sanity. If my intention is to confirm if my application provides the expected behavior on the most preferred browsers then I perform sanity, say for example on Chrome & Safari on desktop and mobile devices in their respective OSs. If I am driven towards perfection and want to ensure that my application is literally fault-free on the browser/devices categories of B, C & D, then I throw my application at the most problematic browsers/devices such as IE 8 and native android browsers. These browsers are used by a meager percentage of end-users, but they serve you well if you wish to see how they affect your application through obscure rendering engines.

The dilemma in the Cross-browser Testing Strategy:

Traditional methods of cross-browser testing suggest going with testing in the most preferred browsers. Whereas the most of number of bugs exist in the least used, partially supported, problematic browsers. So we are faced with a dilemma. Option one is a safe bet but does not provide full coverage and satisfies the majority. Option 2 is where cross-browser testing actually provides value in terms of browser/device-specific issues.

So what do you do when you detect an issue on a problematic browser/device.

- Ignore the issue

- Fix the issue hoping that nothing else has been affected.

- Fix the issue and retest it on all previously tested browsers.

Ideally, the last option is the best as it helps you be truly confident of the software that you have built. But it is tremendously inefficient. That is the reason why we go in for what we term as the ‘three-pronged attack’.

Three-Pronged Attack:

The answer to the above dilemma is to be smart about how we do the cross-browser testing fulfilling the objectives of defect detection and sanity validation yet keeping it all efficient.

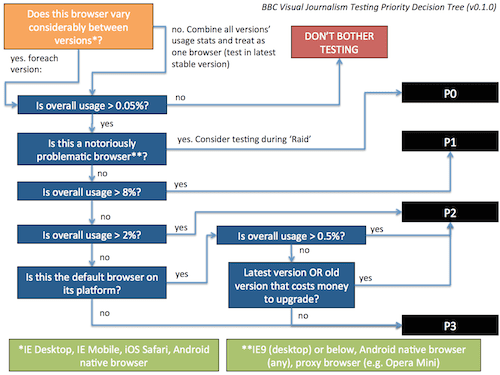

Step 1: Reconnaissance — Perform exploratory tests on the preferred browsers, get a feel for how the browser behaves, find and fix any bugs encountered.

Step 2: Raid — To subject the application to a small subset of problematic browsers, find and fix any bugs encountered.

Step 3: Clearance — Perform a sanity check to ensure that your most preferred browsers provide the same experience and functionality behavior as they did before the fix.

In the first step, similar to the reconnaissance phase on a battlefield, there isn’t much time available. You have to be sharp enough to find as many bugs as you can, as many important ones as you can to limit the time invested. The second phase called Raid is comparatively more time-intensive but provides worthwhile results thereby stabilizing your application. The third phase, Clearance, is the most grueling of all and it ensures your application is bug-free but you still need to be on high alert just in case a previously unspotted issue or an issue caused by the impact of the fixes comes across.

Important points to note in the three-pronged attack:

- It is very rare that something is found to be working on a chrome browser in Windows but not on Chrome in a MAC OS.

- Neighbor versions of browsers also do not show much difference in terms of UI or functionality — for example, FF 49 and FF 50.

- The above rule does not apply to IE so it’s best to keep it separate.

- Versions gain prominence when we talk about native android browsers which are problematic to the core.

Find Browser Agnostic Bugs during the reconnaissance stage:

Browser agnostic bugs are those that are independent of the hardware, OS, or browser you use to view the application on. Suppose you get an end user-generated bug report that says the shopping cart screen of your application looks really bad in the landscape mode of their Samsung Note 2. You waste time on researching why this happened and how on earth you could find a fix for this, without realizing that the issue is not related to a specific device at all. It is a device-agnostic bug in the guise of a device-specific issue. It is just that your shopping cart screen looks bad at a certain viewport width and the issue occurs on all devices at that width. That’s the reason why it is important to have foresight and capture such issues right at the beginning to avoid wastage of a lot of effort. Below are certain tricks to root out the browser-agnostic bugs, on your most preferred browser, as the first line of attack.

- Test your application by manipulating the responsiveness of your screen to different aspect ratios.

- Zoom in and zoom out to check if the images on your page have been skewed.

- Turn off javascript and check if the basic content on your page has loaded as it should.

- Turn off CSS and check the behavior and UI alignment of your page.

- Turn both CSS and Javascript off and check the behavior.

- Try strangulating your network speed and check if the page loads at least with the minimum desired performance.

Before moving to phase 2 find and fix all such browser-agnostic issues to prevent the pain of investing a lot more effort in fixing them at a later stage.

Test in High-Risk Problematic Browsers during the Raid Stage:

Optimizing padding in one browser might break it another. Every change to the code that we do carries risk and to minimize that risk, we have got to be smart about how we go about testing and fixing issues to reduce the duplication of effort.

#quality-assurance #testing #technology #digital-transformation #cross-browser-testing