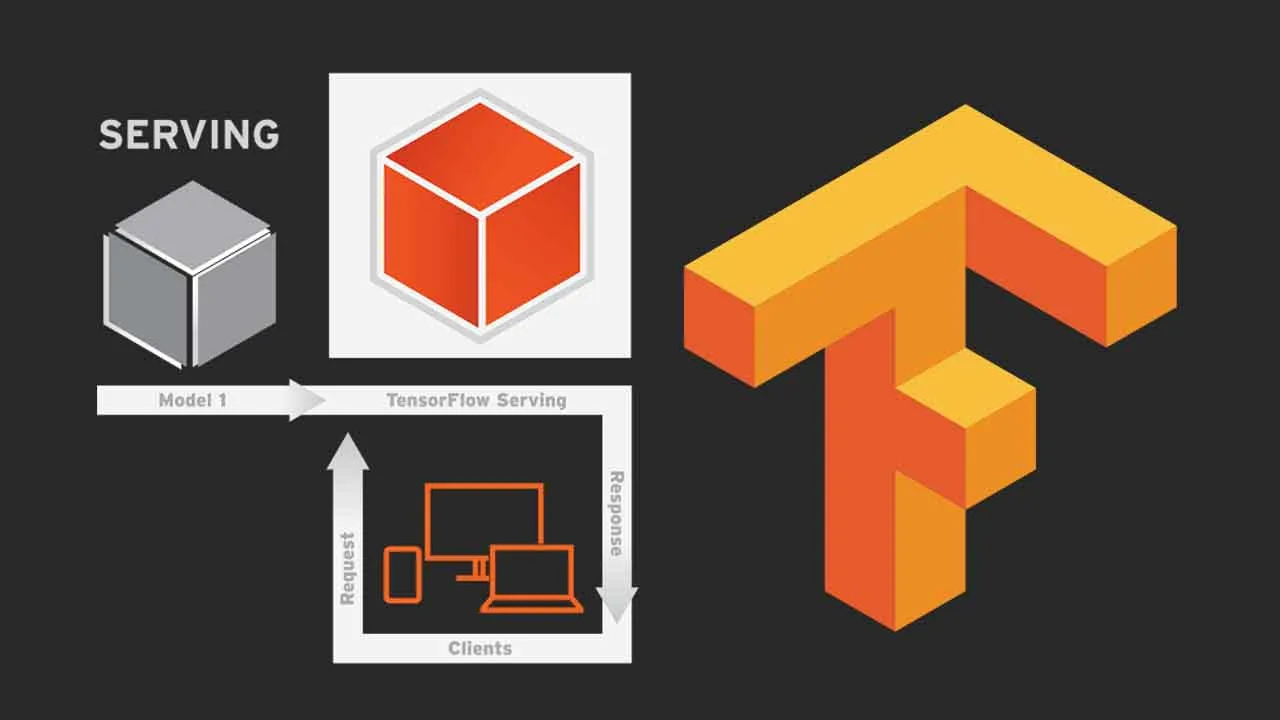

TensorFlow Serving is a flexible, high-performance serving system for machine learning models, designed for production environments. TensorFlow Serving makes it easy to deploy new algorithms and experiments, while keeping the same server architecture and APIs. TensorFlow Serving provides out-of-the-box integration with TensorFlow models, but can be easily extended to serve other types of models and data.

📖 Introduction

Currently there are a lot of different solutions to serve ML models in production with the growth that **MLOps **is having nowadays as the standard procedure to work with ML models during all their lifecycle. Maybe the most popular one is TensorFlow Serving developed by TensorFlow so as to server their models in production environments.

This post is a guide on how to train, save, serve and use TensorFlow ML models in production environments. Along the GitHub repository linked to this post we will prepare and train a custom CNN model for image classification of The Simpsons Characters Data dataset, that will be later deployed using TensorFlow Serving.

So as to get a better understanding on all the process that is presented in this post, as a personal recommendation, you should read it while you check the resources available in the repository, as well as trying to reproduce it with the same or with a different TensorFlow model, as “practice makes the master”.

alvarobartt/serving-tensorflow-models

#deep-learning #tensorflow-serving #tensorflow