How does it work?

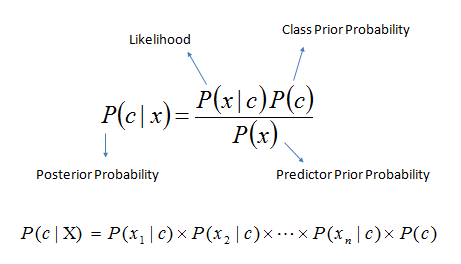

When the algorithm has a probability for a hypothesis, it updates its hypothesis’s unreliable probability as it learns new evidence supporting or opposing the hypothesis, this will impact and ultimately determine the probability of the hypothesis’s accuracy.

The Naive Bayes algorithm is a classification model for binary, and multiclass classification problems.

The ‘Naive’ part of Naive Bayes is due to the calculation of the probabilities for each hypothesis. Each hypothesis is simplified to make its calculation tractable. It is assumed that each attribute or feature is conditionally independent, meaning that their value has no impact or relation to any other concerning features. The problem with this is that this is highly unlikely when working with real-world data and real-world problems.

Naive Bayes is generally used for a linear dataset, although this isn’t always the case, it is the convention.

When deciding to use Naive Bayes, it isn’t used in complex datasets, however, it is a good choice as an initial baseline for your problems. If Naive Bayes doesn’t produce accurate results based on the chosen metric, it may be a good idea to entertain a new more sophisticated machine learning model, perhaps one that can separate non-linear data.

#artificial-intelligence #data-science #classification #machine-learning #naïve-bayes