Apache Parquet is a columnar storage format available to any project […], regardless of the choice of data processing framework, data model or programming language.

This description is a good summary of this format. This post will talk about the features of the format and why it is beneficial to analytical data queries in the data warehouse or lake.

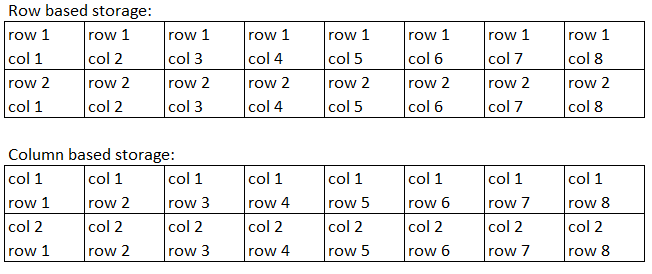

Data are stored row after row with each row containing all columns/fields

https://www.ellicium.com/parquet-file-format-structure/

The first feature is the columnar storage nature of the format. This simply means that data is encoded and stored by columns instead of by rows. This pattern allows for analytical queries to select a subset of columns for all rows. Parquet stores columns as chunks and can further split files within each chunk too. This allows restricting the disk i/o operations to a minimum.

The second feature to mention is data schema and types. Parquet is a binary format and allows encoded data types. Unlike some formats, it is possible to store data with a specific type of boolean, numeric( int32, int64, int96, float, double) and byte array. This allows clients to easily and efficiently serialise and deserialise the data when reading and writing to parquet format.

In addition to the data types, Parquet specification also stores metadata which records the schema at three levels; file, chunk(column) and page header. The footer for each file contains the file metadata. This records the following:

- Version (of Parquet format)

- Data Schema

- Column metadata (type, number of values, location, encoding)

- Number of row groups

- Additional key-value pairs

#data-lake #apache-parquet #analytics-tool #parquet #columnar-databases