Sequence Models

Sequence models are the machine learning models that input or output sequences of data. Sequential data includes text streams, audio clips, video clips, time-series data and etc. Recurrent Neural Networks (RNNs) is a popular algorithm used in sequence models.

Applications of Sequence Models

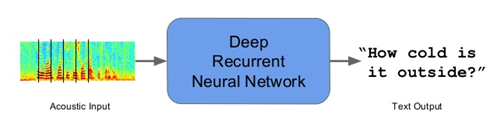

1. Speech recognition**:** In speech recognition, an audio clip is given as an input and then the model has to generate its text transcript. Here both the input and output are sequences of data.

Speech recognition (Source: Author)

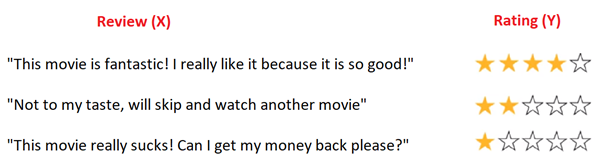

2. Sentiment Classification**:** In sentiment classification opinions expressed in a piece of text is categorized. Here the input is a sequence of words.

Sentiment Classification (Source: Author)

3. Video Activity Recognition**:** In video activity recognition, the model needs to identify the activity in a video clip. A video clip is a sequence of video frames, therefore in case of video activity recognition input is a sequence of data.

Video Activity Recognition (Source: Author)

These examples show that there are different applications of sequence models. Sometimes both the input and output are sequences, in some either the input or the output is a sequence. Recurrent neural network (RNN) is a popular sequence model that has shown efficient performance for sequential data.

Recurrent Neural Networks (RNNs)

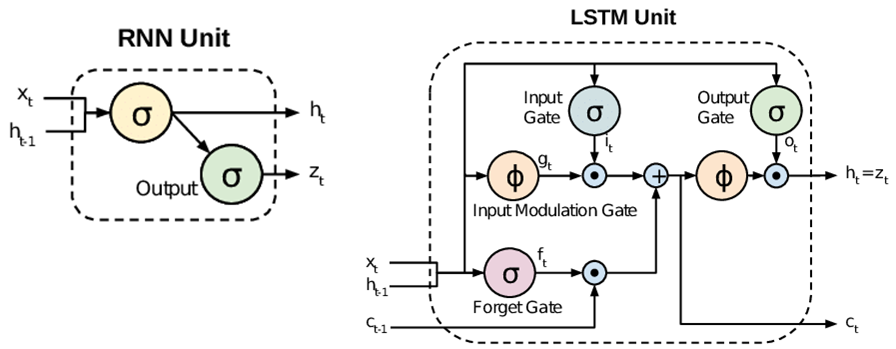

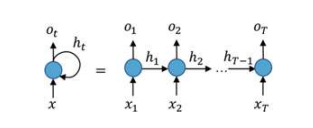

Recurrent Neural Network (RNN) is a Deep learning algorithm and it is a type of Artificial Neural Network architecture that is specialized for processing sequential data. RNNs are mostly used in the field of Natural Language Processing (NLP). RNN maintains internal memory, due to this they are very efficient for machine learning problems that involve sequential data. RNNs are also used in time series predictions as well.

Traditional RNN architecture (Source: Stanford.edu)

The main advantage of using RNNs instead of standard neural networks is that the features are not shared in standard neural networks. Weights are shared across time in RNN. RNNs can remember its previous inputs but Standard Neural Networks are not capable of remembering previous inputs. RNN takes historical information for computation.

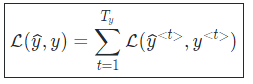

Loss function

In RNN loss function is defined based on the loss at each time step.

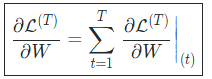

The derivative of the Loss with respect to Weight

In RNN backpropagation is done at each point in time

RNN Architectures

There are several RNN architectures based on the number of inputs and outputs,

1. One to Many Architecture: Image captioning is one good example of this architecture. In image captioning, it takes one image and then outputs a sequence of words. Here there is only one input but many outputs.

2. Many to One Architecture: Sentiment classification is one good example of this architecture. In sentiment classification, a given sentence is classified as positive or negative. In this case, the input is a sequence of words and output is a binary classification.

3. Many to Many Architecture: There are two cases in many to many architectures,

#nlp #deep-learning #recurrent-neural-network #artificial-intelligence #long-short-term-memory #deep learning