Apache Hudi is in use at organizations such as Alibaba Group, EMIS Health, Linknovate, Tathastu.AI, Tencent, and Uber, and is supported as part of Amazon EMR by Amazon Web Services and Google Cloud Platform. Recently, Amazon Athena adds support for querying Apache Hudi datasets in Amazon S3-based data lake. In this blog, I am going to test it and see if Athena can read Hudi format data set in S3.

Preparation — Spark Environment, S3 Bucket

We need Spark to write Hudi data. Login to Amazon EMR and launch a spark-shell:

$ export SCALA_VERSION=2.12

$ export SPARK_VERSION=2.4.4

$ spark-shell \

--packages org.apache.hudi:hudi-spark-bundle_${SCALA_VERSION}:0.5.3,org.apache.spark:spark-avro_${SCALA_VERSION}:${SPARK_VERSION} \

--conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

...

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.4

/_/

Using Scala version 2.12.10 (OpenJDK 64-Bit Server VM, Java 1.8.0_242)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

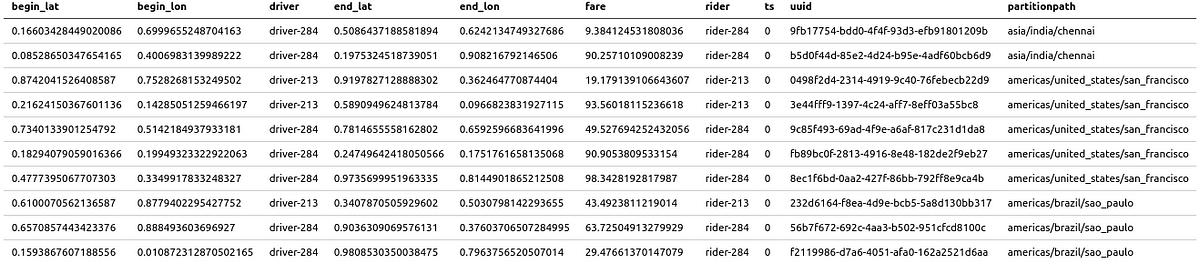

Now input the following scala code to setup table name, base path and a data generator to generate records for this article. Here we set the basepath to a folder s3://hudi_athena_test/hudi_trips in Amazon S3 bucket, so we can query it later:

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConversions._

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

val tableName = "hudi_trips"

val basePath = "s3://hudi_athena_test/hudi_trips"

val dataGen = new DataGenerator

#data-lake #athena #hudi #aws-emr #spark