Introduction

Writing a custom implementation of a popular algorithm can be compared to playing a musical standard. For as long as the code reflects upon the equations, the functionality remains unchanged. It is, indeed, just like playing from notes. However, it lets you master your tools and practice your ability to hear and think.

In this post, we are going to re-play the classic Multi-Layer Perceptron. Most importantly, we will play the solo called backpropagation, which is, indeed, one of the machine-learning standards.

As usual, we are going to show how the math translates into code. In other words, we will take the notes (equations) and play them using bare-bone numpy.

FYI: Feel free to check another “implemented from scratch” article on Hidden Markov Models here.

Overture — A Dense Layer

Data

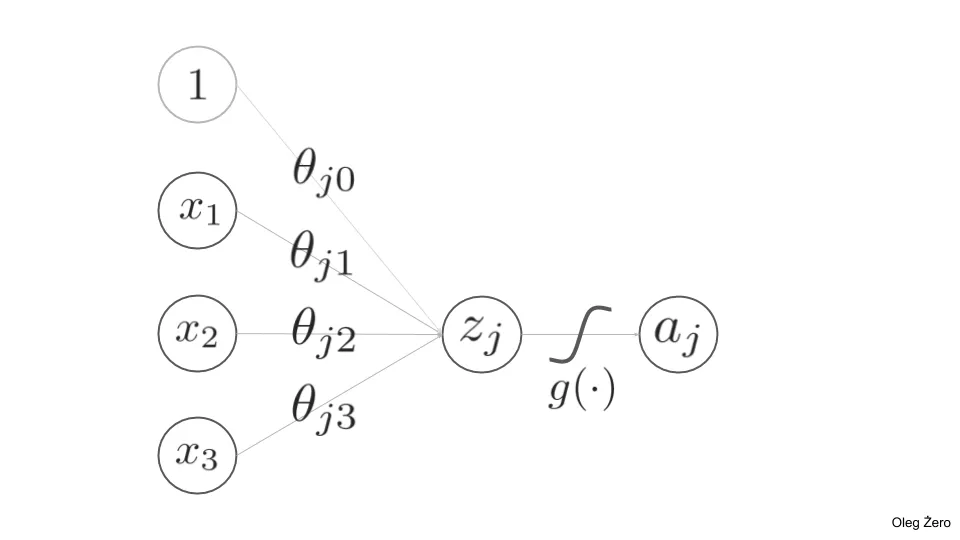

Let our (most generic) data be described as pairs of question-answer examples:_ (x, y), where **X **∈ ℝ^m× n is as a matrix of feature vectors, y ∈ ℝᵐ _is known a matrix of labels and _i _refers to an index of a particular data example. Here, by _n _we understand the number of features and is the number of examples, so i ∈ {1, 2, …, m}. Also, we assume that _y ∈ {0, 1} _thus posing a binary classification problem for us. Here, it is important to mention that the approach won’t be much different if _y _was a multi-class or a continuous variable (regression).

To generate the mock for the data, we use the sklearn’s make_classification function.

import numpy as np

from sklearn.datasets import make_classification

np.random.seed(42)

X, y = make_classification(n_samples=10, n_features=4,

n_classes=2, n_clusters_per_class=1)

y_true = y.reshape(-1, 1)

Note that we do not split the data into the training and test datasets, as our goal would be to construct the network. Therefore, if the model overfits it would be a perfect sign!

At this stage, we adopt the convention that axis=0 shall refer to the examples i, while axis=1 will be reserved for the features j ∈ {1, 2, …, n}. Naturally, for binary classification dim _y _= (m, 1).

#machine-learning #mathematics #keras #data-science #python