Most facial recognition algorithms you find on the internet and research papers suffer from photo attacks. These methods work really well at detecting and recognizing faces on images, videos and video streams from webcam. However they can’t distinguish between real life faces and faces on a photo. This inability to recognize faces is due to the fact that these algorithms work on 2D frames.

Now let’s imagine we want to implement a facial recognition door opener. The system would work well to distinguish between known faces and unknown faces so that only authorized persons have access. Nonetheless, it would be easy for an ill-intentioned person to enter by only showing an authorized person’s photo.This is where 3D detectors, similar to Apple’s FaceID, enter the game. But what if we don’t have 3D detectors ?

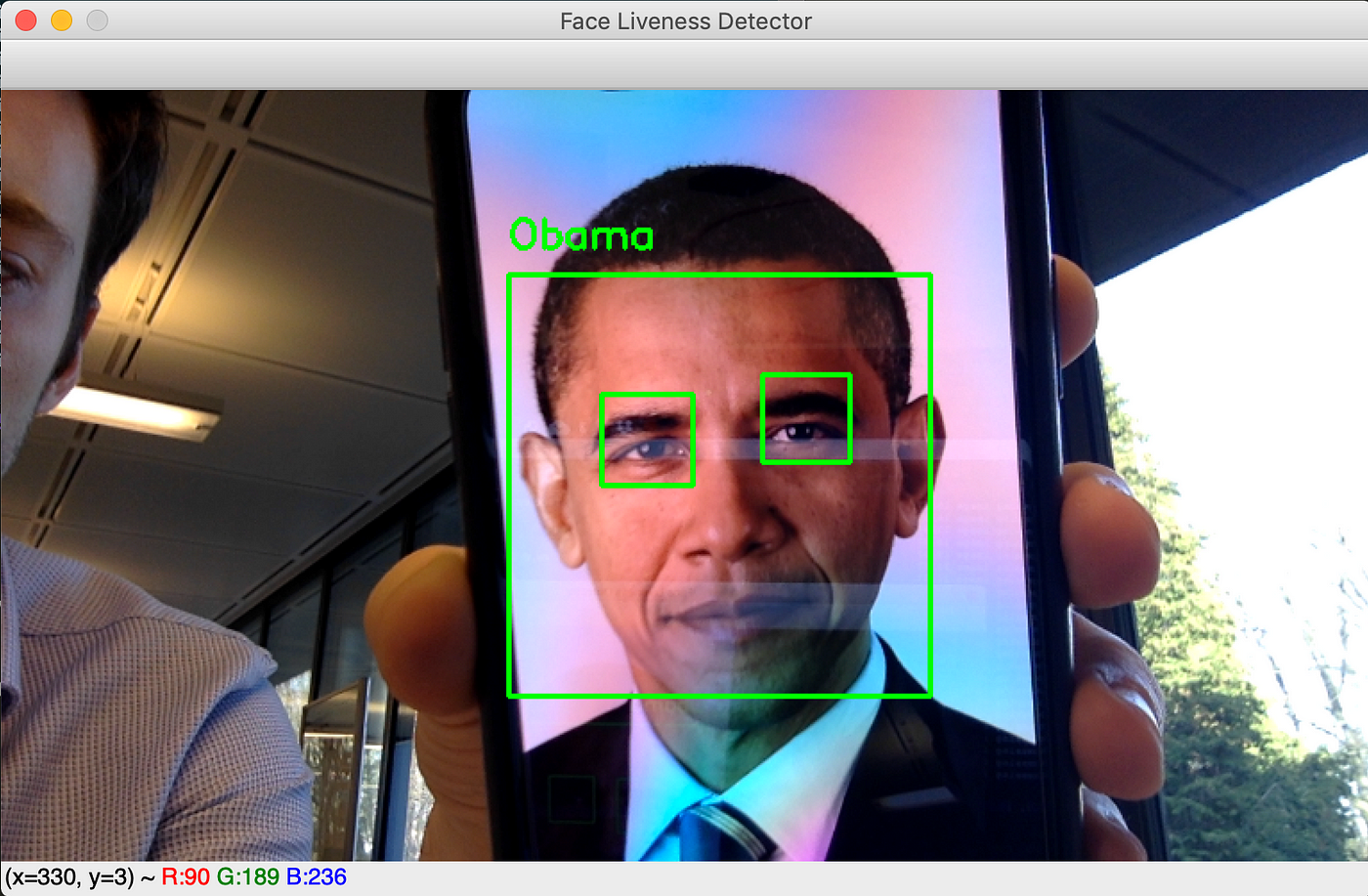

Example of photo attack with Obama face ❌

This article objective is to implement an eye-blink detection-based face liveness detection algorithm to thwart photo attacks. The algorithm works in real time through a webcam and displays the person’s name only if they blinked. In layman’s terms, the program runs as follows:

- Detect faces in each frame generated by the webcam.

- For each detected face, detect eyes.

- For each detected eyes, detect if eyes are open or closed.

- If at some point it was detected that the eyes were open then closed then open, we conclude the person has blinked and the program displays its name (in the case of a facial recognition door opener, we would authorize the person to enter).

For the detection and recognition of faces you need to install the face_recognition library which provides very useful deep learning methods to find and identify faces in an image. In particular, the face_locations, face_encodings and compare_faces functions are the 3 most useful_._ The face_locations method can detect faces using two methods_: Histrogram of oriented Gradients (HoG)_ and C_onvolutional Neural Network (CNN)._ Due to time constraintsthe HoG method was chosen. The face_encodings function is a pre-trained Convolutional Neural Network able to encode an image into a vector of 128 features. This embedding vector should represent enough information to distinguish between two different persons. Finally, the compare_faces computes the distance between two embedding vectors_._ It will allow the algorithm to recognize face extracted from a webcam frame and compare its embedding vector with all encoded faces in our dataset. The closest vectors should correspond to the same person.

1. Known face dataset encoding

In my case, the algorithm is able to recognize myself and Barack Obama. I selected around 10 pictures of each. Below is the code to process and encode our database of known faces.

def process_and_encode(images):

known_encodings = []

known_names = []

print("[LOG] Encoding dataset ...")

for image_path in tqdm(images):

# Load image

image = cv2.imread(image_path)

# Convert it from BGR to RGB

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# detect face in the image and get its location (square boxes coordinates)

boxes = face_recognition.face_locations(image, model='hog')

# Encode the face into a 128-d embeddings vector

encoding = face_recognition.face_encodings(image, boxes)

# the person's name is the name of the folder where the image comes from

name = image_path.split(os.path.sep)[-2]

if len(encoding) > 0 :

known_encodings.append(encoding[0])

known_names.append(name)

return {"encodings": known_encodings, "names": known_names}

Now that we know the encodings for each person we want to recognize, we can try to identify and recognize faces through a webcam. However, before moving to this part, we need to distinguish between a face photo and a living person’s face.

2. Face liveness detection

As a reminder, the goal is to detect an open-closed-open eye pattern at some point. I trained a Convolutional Neural Network to classify whether an eye is closed or open. The chosen model is the LeNet-5 which has been trained on the Closed Eyes In The Wild (CEW) dataset. It is composed of around 4800 eye images in size 24x24.

from keras.models import Sequential

from keras.layers import Conv2D

from keras.layers import AveragePooling2D

from keras.layers import Flatten

from keras.layers import Dense

from keras.preprocessing.image import ImageDataGenerator

IMG_SIZE = 24

def train(train_generator, val_generator):

STEP_SIZE_TRAIN=train_generator.n//train_generator.batch_size

STEP_SIZE_VALID=val_generator.n//val_generator.batch_size

model = Sequential()

model.add(Conv2D(filters=6, kernel_size=(3, 3), activation='relu', input_shape=(IMG_SIZE,IMG_SIZE,1)))

model.add(AveragePooling2D())

model.add(Conv2D(filters=16, kernel_size=(3, 3), activation='relu'))

model.add(AveragePooling2D())

model.add(Flatten())

model.add(Dense(units=120, activation='relu'))

model.add(Dense(units=84, activation='relu'))

model.add(Dense(units=1, activation = 'sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

print('[LOG] Training CNN')

model.fit_generator(generator=train_generator,

steps_per_epoch=STEP_SIZE_TRAIN,

validation_data=val_generator,

validation_steps=STEP_SIZE_VALID,

epochs=20

)

return model

When evaluating the model, I reached 94% accuracy.

Each time we detect an eye, we predict its status using our model, and we keep track of the eyes status for each person. Therefore, it becomes really easy to detect an eye blinking thanks to the function below, which tries to find a closed-open-closed pattern in the eyes status history.

def isBlinking(history, maxFrames):

""" @history: A string containing the history of eyes status

where a '1' means that the eyes were closed and '0' open.

@maxFrames: The maximal number of successive frames where an eye is closed """

for i in range(maxFrames):

pattern = '1' + '0'*(i+1) + '1'

if pattern in history:

return True

return False

3. Face recognition of living people

We almost have all the elements to set up our “real”-face recognition algorithm. We just need a way to detect faces and eyes in real-time. I used openCV pre-trained Haar-cascade classifier to perfom these tasks. For more information about faces and eyes detection with Haar-cascade I highly recommend you to read this great article from openCV.

def detect_and_display(model, video_capture, face_detector, open_eyes_detector, left_eye_detector, right_eye_detector, data, eyes_detected):

frame = video_capture.read()

# resize the frame

frame = cv2.resize(frame, (0, 0), fx=0.6, fy=0.6)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# Detect faces

faces = face_detector.detectMultiScale(

gray,

scaleFactor=1.2,

minNeighbors=5,

minSize=(50, 50),

flags=cv2.CASCADE_SCALE_IMAGE

)

# for each detected face

for (x,y,w,h) in faces:

# Encode the face into a 128-d embeddings vector

encoding = face_recognition.face_encodings(rgb, [(y, x+w, y+h, x)])[0]

# Compare the vector with all known faces encodings

matches = face_recognition.compare_faces(data["encodings"], encoding)

# For now we don't know the person name

name = "Unknown"

# If there is at least one match:

if True in matches:

matchedIdxs = [i for (i, b) in enumerate(matches) if b]

counts = {}

for i in matchedIdxs:

name = data["names"][i]

counts[name] = counts.get(name, 0) + 1

# The known encoding with the most number of matches corresponds to the detected face name

name = max(counts, key=counts.get)

face = frame[y:y+h,x:x+w]

gray_face = gray[y:y+h,x:x+w]

eyes = []

# Eyes detection

# check first if eyes are open (with glasses taking into account)

open_eyes_glasses = open_eyes_detector.detectMultiScale(

gray_face,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags = cv2.CASCADE_SCALE_IMAGE

)

# if open_eyes_glasses detect eyes then they are open

if len(open_eyes_glasses) == 2:

eyes_detected[name]+='1'

for (ex,ey,ew,eh) in open_eyes_glasses:

cv2.rectangle(face,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

# otherwise try detecting eyes using left and right_eye_detector

# which can detect open and closed eyes

else:

# separate the face into left and right sides

left_face = frame[y:y+h, x+int(w/2):x+w]

left_face_gray = gray[y:y+h, x+int(w/2):x+w]

right_face = frame[y:y+h, x:x+int(w/2)]

right_face_gray = gray[y:y+h, x:x+int(w/2)]

# Detect the left eye

left_eye = left_eye_detector.detectMultiScale(

left_face_gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags = cv2.CASCADE_SCALE_IMAGE

)

# Detect the right eye

right_eye = right_eye_detector.detectMultiScale(

right_face_gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags = cv2.CASCADE_SCALE_IMAGE

)

eye_status = '1' # we suppose the eyes are open

# For each eye check wether the eye is closed.

# If one is closed we conclude the eyes are closed

for (ex,ey,ew,eh) in right_eye:

color = (0,255,0)

pred = predict(right_face[ey:ey+eh,ex:ex+ew],model)

if pred == 'closed':

eye_status='0'

color = (0,0,255)

cv2.rectangle(right_face,(ex,ey),(ex+ew,ey+eh),color,2)

for (ex,ey,ew,eh) in left_eye:

color = (0,255,0)

pred = predict(left_face[ey:ey+eh,ex:ex+ew],model)

if pred == 'closed':

eye_status='0'

color = (0,0,255)

cv2.rectangle(left_face,(ex,ey),(ex+ew,ey+eh),color,2)

eyes_detected[name] += eye_status

# Each time, we check if the person has blinked

# If yes, we display its name

if isBlinking(eyes_detected[name],3):

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

# Display name

y = y - 15 if y - 15 > 15 else y + 15

cv2.putText(frame, name, (x, y), cv2.FONT_HERSHEY_SIMPLEX,0.75, (0, 255, 0), 2)

return frame

The function above is the code used for detecting and recognizing real faces. It takes in arguments:

- model: our open/closed eyes classifier

- video_capture: a stream video

- face_detector: a Haar-cascade face classifier. I used haarcascade_frontalface_alt.xml

- open_eyes_detector: a Haar-cascade open eye classifier. I used haarcascade_eye_tree_eyeglasses.xml

- left_eye_detector: a Haar-cascade left eye classifier. I used haarcascade_lefteye_2splits.xml which can detect open or closed eyes.

- right_eye_detector: a Haar-cascade right eye classifier. I used haarcascade_righteye_2splits.xml which can detect open or closed eyes.

- data: a dictionary of known encodings and known names

- eyes_detected: a dictionary containing for each name the eyes status history.

At Lines 2–4 we grab a frame from the webcam stream and we resize it to speed up computations. At line 10 we detect faces from the frame , then at line 21, we encode them into a 128-d vector. In line 23–38 we compare this vector with the known face encodings and we determine the person’s name by counting the number of matches. The one with the biggest number of matches is selected. Starting at line 45 we try to detect eyes into face boxes. First, we try to detect open eyes with the open_eye_detector. If the detector succeeds, then at line 54,‘1’ is added to the eye status history meaning the eyes are open since the open_eye_detector cannot detect closed eyes. Otherwise, if the first classifier has failed, (maybe because eyes are closed or simply because it did not recognize eyes) then the left_eye and right_eye detectors are used. The face is separated into left and right side for the respective detectors to be classified. Beginning at line 92, the eye part is extracted and the trained model predicts whether the eyes are closed. If one closed eye is detected, then both eyes are predicted to be closed and a ‘0’ is added to the eyes status history. Otherwise it’s concluded that the eyes are open. Finally at line 110 the isBlinking() function is used to detect eye blinking and if the person has blinked the name is displayed. The whole code can be found on my github account.

#python #machine-learning #deep-learning #keras #opencv