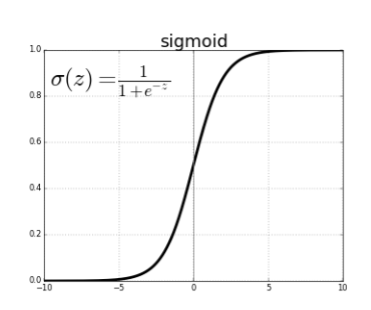

Explanation of 3 common activation functions on Deep learning by usability.

Why Activation Function so Important?

We can imagine activation Function is a thing that firing our brain (in this case neuron) to think. Maybe that illustration makes you more confuse :P

Anyway… Without activation Function every calculation in each layer doesn’t have a meaning. Why? because the calculation is linear, which is input value has the same value with output value, implicitly. Activation function makes this is not(n) linear anymore.

#deep-learning #softmax #relu #machine-learning #activation-functions

1.35 GEEK