AWS Lambda vs. Azure Functions vs. Google Functions

Compare AWS Lambda, Azure Functions, and Google Functions to find the best serverless computing platform for your needs. Learn about pricing, features, and supported languages to make an informed decision.

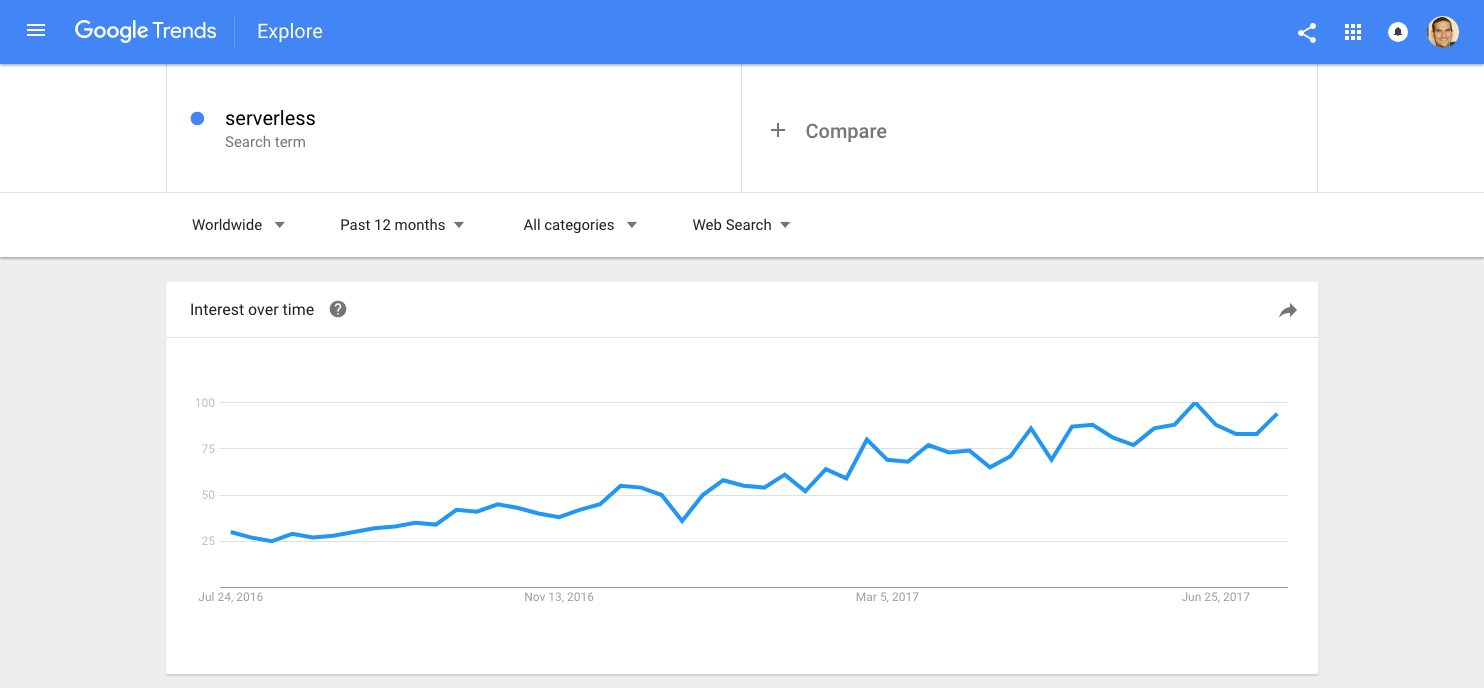

There is no doubt that the trend of serverless is growing. This article reviews these three big players so you can make the decision of which to use more clearly.

Still, there is little doubt that the general usage trend is pointing upwards.

So, What Is Serverless?

Serverless allows developers to build and run applications and services without thinking about the servers actually running the code. Serverless services, or FaaS (Functions-as-a-Service) providers, instrument this concept by allowing developers to upload the code while taking care of deploying running and scaling it. Thus, serverless can help create an environment that allows DevOps teams to focus on improving code, processes, and upgrade procedures, instead of on provisioning, scaling, and maintaining servers.

Amazon was the first cloud provider to offer a full serverless platform with Lambda and was followed by Microsoft and Google who introduced Azure Functions and Cloud Functions, respectively.

Today, these are the three main providers of serverless platforms, with huge IT organizations such as Netflix, Dropbox, and others building their entire backend services upon them. This article reviews these three big players so you can make the decision of which to use more clearly.

AWS Lambda

AWS is regarded as the groundbreaking innovator that created the concept of serverless and has integrated it completely as part of its cloud portfolio.

Lambda has native support for code written in JavaScript, Python, Java (Java 8 compatible), and C#, and allows the creation of a wrapper that can be added to Go, PHP, or Ruby projects, thus allowing execution of the code when triggered.

Lambda is positioned at the heart of AWS’s offering, acting in some ways as a gateway to almost every other cloud service it provides. Integration with S3 and Kinesis allows log analysis and on-the-fly filtering or image processing and backup — triggered by activities in these AWS services. The DynamoDB integration adds another layer of triggers for operations performed outside the real-time echo system. Lambda can also act as the full backend service of a web, mobile, or IoT application — receiving requests from the client through the Amazon gateway, which converts these requests into API calls that are later translated to predefined triggers running specific functions.

A fundamental problem for applications developed using a serverless architecture is maintaining the state of a function. As one of the main reasons to use serverless is cost reduction — through paying only for function execution duration — the natural behavior of the platform is to shut down functions as soon as they have completed their task. This presents a difficulty for developers, as functions cannot use other functions’ knowledge without having a third-party tool to collect and manage it. Step Functions, a new module recently released by Amazon, addresses this exact challenge. It helps by logging the state of each function, so it can be used by other functions or for root-cause analysis.

Managing your functions in Lambda allows you to invoke them from an endless list of sources. Amazon services such as CodeCommit and CloudWatch Logs can trigger events for new or tracked deployment processes, AWS Config API can trigger events for evaluating whether AWS resource configurations comply with pre-defined rules, and even Amazon’s Alexa can trigger Lambda events and analyze voice-activated commands. Amazon also allows users to define workflows for more complicated triggers, including decision trees for different business use-cases originating from the same function.

An interesting trend based around Lambda is the development of Lambda frameworks. Several companies and individual contributors developed and open-sourced code that helps with building and deploying event-driven functions. These frameworks give developers a template into which code is inserted, and bring a built-in integration with the other Amazon services.

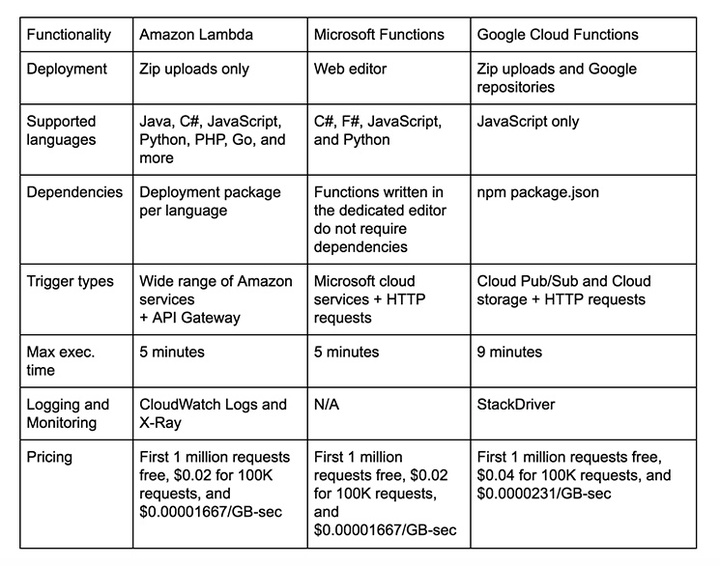

Amazon created serverless to save money and IT organizations choose to use it to save money. As concurrent servers are no longer required, resource usage is significantly decreased, and the cost is calculated according to execution time. Amazon charges for execution in 100 ms units. The first one million requests are free each month, and every 100,000 requests cost $0.04 — divided between request and compute charges.

These figures might seem meager, but if execution time exceeds goals, request numbers spike, and triggers are not monitored, costs can multiply by hundreds of percent and diminish the value of serverless.

Logs and metrics are significantly important and tools that process and analyze them and later produce valuable information must be part of the ecosystem. ELK stack is the most common method used for log analysis, and as such is now being used for Lambda function analysis as well. Other monitoring tools, such as Datadog and New Relic are being used as well, displaying usage metrics and giving a real-time view of traffic and the utilization of functions. Some tools even allow alert generation for when latency, load, or network throughput thresholds are exceeded.

Azure Functions

Azure Functions, Microsoft’s counterpart of Lambda, was created and introduced only at the end of March 2016. Microsoft is working hard to close the functionality and conceptual gap with Amazon, but lacking its competitor’s broad cloud portfolio, Functions has a more narrow scope in terms of overall functionality.

However, it does offer powerful integrations and practical functionality. Microsoft allows functions to be coded in its native languages — C# and F# — inside the web functions editor, or they can be written and uploaded using common script-based options — Bash, Batch, and PowerShell. Developers are also able to write functions in JavaScript or Python.

Azure has out-of-the-box integrations with VS Team Services, GitHub, and Bitbucket, allowing easy setup of a continuous integration process and the deploying of code in the cloud.

Azure Functions supports several types of event triggers. Cron jobs enable timer-based events for scheduled tasks, while events on Microsoft’s SaaS services, for example, OneDrive or SharePoint, can be configured to trigger operations in Functions. Common triggers for real-time processing of data or files add the ability to operate a serverless bot that uses Cortana as the information provider.

But Microsoft hasn’t stopped there and is now attempting to address the needs of less technical users, involving them in the process by making serverless simpler and more approachable for non-coders. Logic Apps, which according to Microsoft is the “workflow orchestration engine in the cloud,” is designed to allow business-oriented personnel to define and manage data processing tasks and to set workflow paths.

Microsoft has taken the same pricing approach as Amazon, calculating the total cost from both number of triggers and execution time. The same prices also apply, with the first one million requests being free, while exceeding this limit will cost $0.02 for every 100,000 executions and another $0.02 for the 100,000 GB/s.

Microsoft understands the importance and complexity of monitoring resources through logs and metrics in a serverless environment and also the fact that this is something missing from its offering. For this reason, it is currently in the process of purchasingCloudyn—a cloud management, and more importantly, cost optimization, software producer. This purchase, once confirmed, will give Microsoft’s users the added value of understanding how the business manages resource usage and allow them to make conscious decisions on utilization and improving cloud efficiency.

Google Cloud Functions

Google was the last to join the serverless scene. Its current support is very limited, allowing functions to be written only in JavaScript, and triggering events solely on Google’s internal event bus — Cloud Pub/Subtopics. HTTP triggers are supported as well, as mobile events from Firebase.

Google is still missing some important integrations with storage and other cloud services that help with business-related triggers, but this isn’t the problematic part. Google restricts projects to having less than 20 triggers.

Monitoring is enabled via the Stackdriver logging tool, which is very handy and easy to use, but does not supply all the information and metrics serverless users require.

On top of all the missing functionality and limited capabilities, Google charges the highest price for function triggering. After using the one million free requests, its prices are double those of the other providers — $0.04 for 100,000 invocations, plus $0.04 for 100,000 milliseconds.

It seems that Google is still designing and learning how its serverless platform is going to work, but once this process is finalized, it will have to align with the other providers.

Conclusion

On top of its cost saving and maintenance reduction benefits, serverless also encourages correct coding and pushes for effective and fast execution as a result of its pay-per-use model. Organizations aiming at reducing the monthly payment for serverless services must reduce their runtime. Developers who can reduce function run-time and write the smallest independent pieces of code will be able to take greater advantage of serverless and significantly reduce costs for their organization.

The Serverless Cost Calculator allows costs to be estimated according to predicted number of executions and average execution time and can help developers who would like to introduce serverless in their organization by clearly displaying potential savings.

The table below contains a summary of the key attributes of each provider:

Thanks for reading ❤

If you liked this post, share it with all of your programming buddies!

#aws #azure #function