How to Consume Kafka Efficiently in Golang

If you often need to process data from Message Queue Systems like Kafka, you may often wonder how to consume data efficiently. Specifically, how could you process 1 billion or more messages within an hour? Then this story is just for you.

In this story, I proposed four consuming patterns using the well-known golang package Sarama and performed several experiments to show how different patterns affect the final performance. Let’s go.

Setup

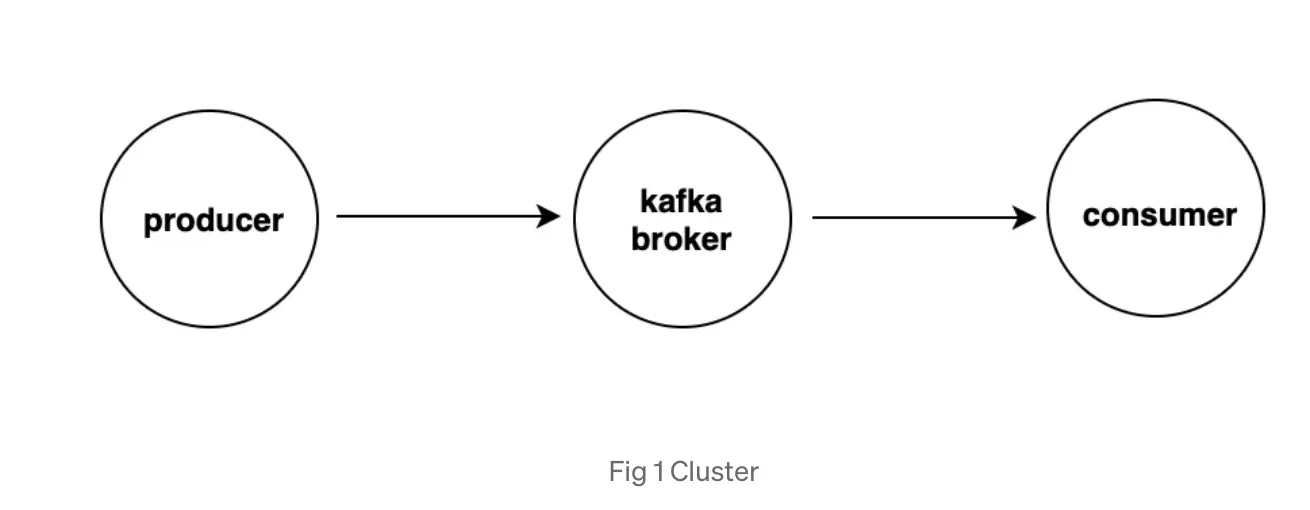

To do this test, I established a simple cluster with three nodes, one for Kafka broker, one for the producer server and the final as the consumer server, as shown in Fig 1:

To ensure fairness, all of the three nodes have the same configurations(4 Cpu cores, 8GB memory and 500GB SSD disk). We defined a struct _Message _and let producer/consumer simply do json marshal/unmarshal things.

type Message struct {

Id int `json:"id"`

}The producer code is fairly simple:

func (p *Producer) StartProduce(done chan struct{}, topic string) {

start := time.Now()

for i := 0; ; i++ {

msg := Message{i}

msgBytes, err := json.Marshal(msg)

if err != nil {

continue

}

select {

case <-done:

return

case p.p.Input() <- &sarama.ProducerMessage{

Topic: topic,

Value: sarama.ByteEncoder(msgBytes),

}:

if i % 5000 == 0 {

fmt.Printf("produced %d messages with speed %.2f/s\n", i, float64(i) / time.Since(start).Seconds())

}

case err := <-p.p.Errors():

fmt.Printf("Failed to send message to kafka, err: %s, msg: %s\n", err, msgBytes)

}

}

}

With this configuration, we achieved a producing speed of 570k/s:

produced 63445000 messages with speed 570853.28/s

Could our patterns handle data with such high load? Let’s wait and see.

Sync mode

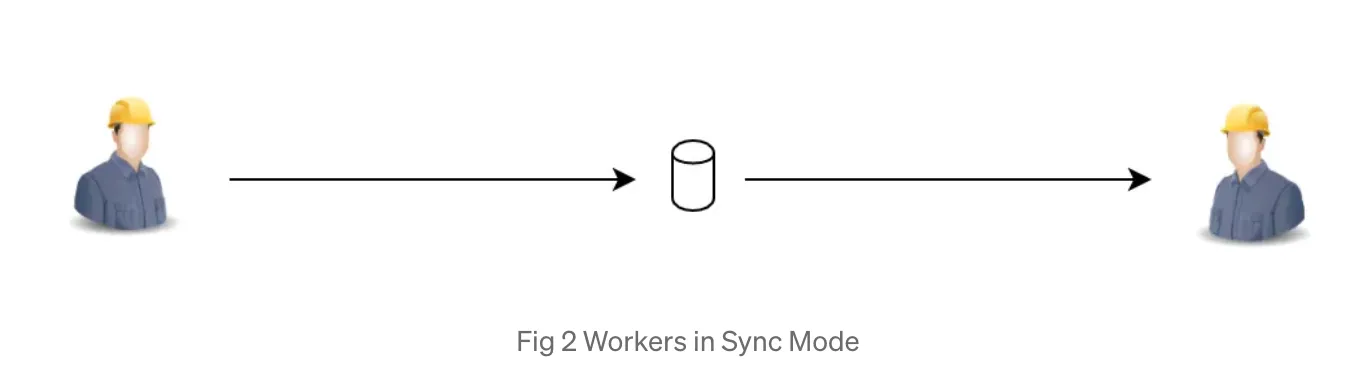

The first consuming pattern is the synchronous one by one mode, i.e. process data as soon as we receive it, so I named it ‘Sync mode’, as shown in Fig 2:

The code is simple:

func (h *syncConsumerGroupHandler) ConsumeClaim(session sarama.ConsumerGroupSession, claim sarama.ConsumerGroupClaim) error {

claimMsgChan := claim.Messages()

for message := range claimMsgChan {

if h.cb(message.Value) == nil {

session.MarkMessage(message, "")

}

}

return nil

}With this pattern we achieved consuming speed of 483k per second:

sync consumer consumed 12000000 messages at speed 483180.52/sA speed that is a bit slower than producing. Can we make it faster? Let’s continue.

Batch mode

In this mode, we accumulate several messages and pass them to callback function as a whole. Within the handler, we use a slice to hold buffered messages and a ticker to flush insufficient buffer in case the traffic is low, as illustrated in Fig 3:

The handler is like the following(some parts are omitted for simplicity):

type batchConsumerGroupHandler struct {

cfg *BatchConsumerConfig

// buffer

ticker *time.Ticker

msgBuf []*ConsumerSessionMessage

// lock to protect buffer operation

mu sync.RWMutex

// callback

cb func([]*ConsumerSessionMessage) error

}

...

func (h *batchConsumerGroupHandler) ConsumeClaim(session sarama.ConsumerGroupSession, claim sarama.ConsumerGroupClaim) error {

claimMsgChan := claim.Messages()

for {

select {

case message, ok := <-claimMsgChan:

if ok {

h.insertMessage(&ConsumerSessionMessage{

Message: message,

Session: session,

})

} else {

return nil

}

case <-h.ticker.C:

h.mu.Lock()

h.flushBuffer()

h.mu.Unlock()

}

}

return nil

}With this consuming pattern we achieved a speed of 447k per second:

batch consumer consumed 8160000 messages at speed 447858.14/sEven slower than sync mode. Why? Notice that in this mode we had a lower CPU utilization of 1 core , so it seems the advantage that batching brought was negated by the hungry consumer. And in fact we are still in synchronous mode. How about handling data asynchronously and using multiple goroutines? Let’s continue to explore the next.

MultiAsync mode

In this pattern, we use a buffered channel to separate the process of receiving messages and the process of handling them. And we also have multiple goroutines waiting on the channel in order to fully utilize the concurrent capabilities that Golang provides. In fact, this is just the ‘Fan in / Fan out’ pattern that you may hear before, though we only use ‘Fan out’ here. The process is illustrated in Fig 4:

The code is shown below:

func (h *multiAsyncConsumerGroupHandler) ConsumeClaim(session sarama.ConsumerGroupSession, claim sarama.ConsumerGroupClaim) error {

claimMsgChan := claim.Messages()

for message := range claimMsgChan {

h.cfg.BufChan <- &ConsumerSessionMessage{

Session: session,

Message: message,

}

}

return nil

}

// to use the handler

func StartMultiAsyncConsumer(broker, topic string) (*ConsumerGroup, error) {

var count int64

var start = time.Now()

var bufChan = make(chan *ConsumerSessionMessage, 1000)

for i := 0; i < 8; i++ {

go func() {

for message := range bufChan {

if err := decodeMessage(message.Message.Value); err == nil {

message.Session.MarkMessage(message.Message, "")

}

cur := atomic.AddInt64(&count, 1)

if cur % 5000 == 0 {

fmt.Printf("multi async consumer consumed %d messages at speed %.2f/s\n", cur, float64(cur) / time.Since(start).Seconds())

}

}

}()

}

handler := NewMultiAsyncConsumerGroupHandler(&MultiAsyncConsumerConfig{

BufChan: bufChan,

})

consumer, err := NewConsumerGroup(broker, []string{topic}, "multi-async-consumer-" + fmt.Sprintf("%d", time.Now().Unix()), handler)

if err != nil {

return nil, err

}

return consumer, nil

}With this pattern we increased our speed to 560k per second! Nearly 17 percent’s increase! Not that amazing, but it looks like we are on the right way, isn’t it?

multi async consumer consumed 18620000 messages at speed 560736.64/sSince we use multiple workers, our cpu utilization also increased. But not that much. Our goroutines were not fully engaged. Maybe the work load is too light for a single worker? What about feeding our workers a batch of messages? Then it’s the last pattern — multi batch mode.

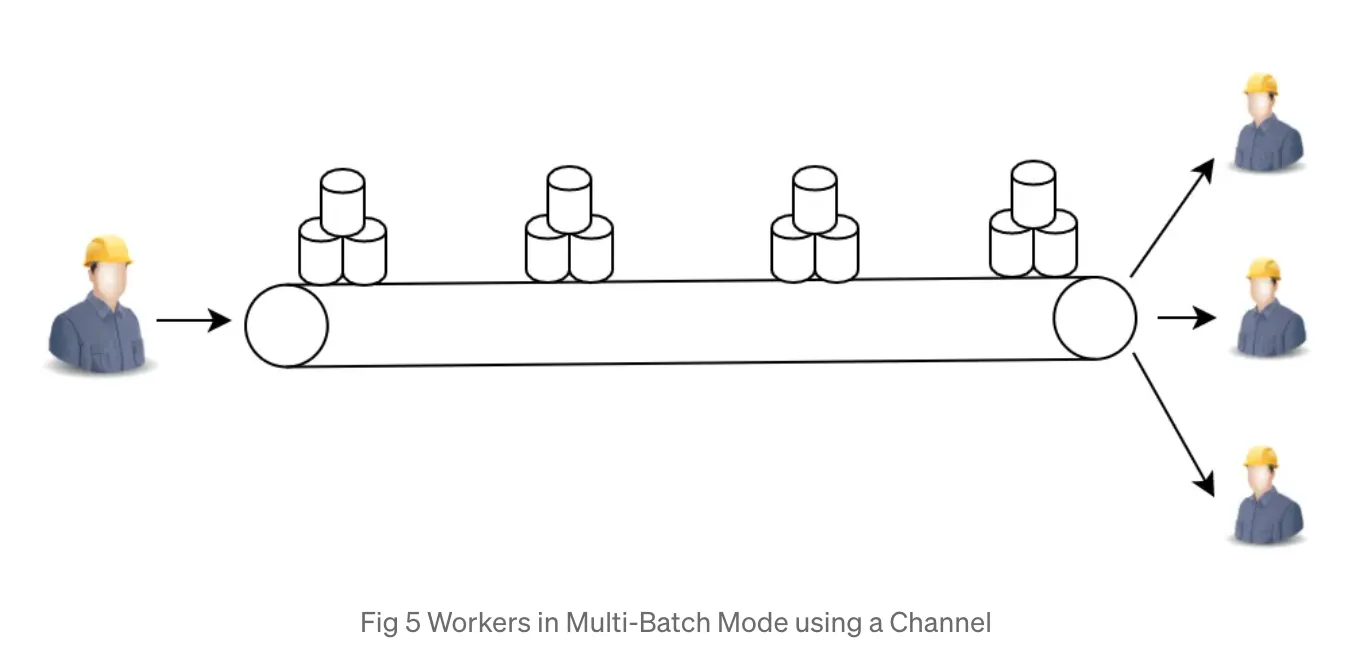

MultiBatch mode

In this mode, we will pass a batch of messages to the buffered channel instead of one. In fact it’s a combination of MultiAsync mode and Batch mode, as illustrated in Fig 5:

So the code is similar to the previous patterns:

type multiBatchConsumerGroupHandler struct {

cfg *MultiBatchConsumerConfig

...

// buffer

ticker *time.Ticker

msgBuf batchMessages

// lock to protect buffer operation

mu sync.RWMutex

}

func (h *multiBatchConsumerGroupHandler) flushBuffer() {

if len(h.msgBuf) > 0 {

h.cfg.BufChan <- h.msgBuf

h.msgBuf = make([]*ConsumerSessionMessage, 0, h.cfg.BufferCapacity)

}

}

func (h *multiBatchConsumerGroupHandler) insertMessage(msg *ConsumerSessionMessage) {

h.mu.Lock()

defer h.mu.Unlock()

h.msgBuf = append(h.msgBuf, msg)

if len(h.msgBuf) >= h.cfg.MaxBufSize {

h.flushBuffer()

}

}

// how to use

func StartMultiBatchConsumer(broker, topic string) (*ConsumerGroup, error) {

var count int64

var start = time.Now()

var bufChan = make(chan batchMessages, 1000)

for i := 0; i < 8; i++ {

go func() {

for messages := range bufChan {

for j := range messages {

if err := decodeMessage(messages[j].Message.Value); err == nil {

messages[j].Session.MarkMessage(messages[j].Message, "")

}

}

cur := atomic.AddInt64(&count, int64(len(messages)))

if cur % 1000 == 0 {

fmt.Printf("multi batch consumer consumed %d messages at speed %.2f/s\n", cur, float64(cur) / time.Since(start).Seconds())

}

}

}()

}

handler := NewMultiBatchConsumerGroupHandler(&MultiBatchConsumerConfig{

MaxBufSize: 1000,

BufChan: bufChan,

})

consumer, err := NewConsumerGroup(broker, []string{topic}, "multi-batch-consumer-" + fmt.Sprintf("%d", time.Now().Unix()), handler)

if err != nil {

return nil, err

}

return consumer, nil

}With this change our consuming speed reached the peak of 2448k per second. 5 times faster than the original sync mode! And our cpu utilization increased to 3.6 core. All goroutines were busily working now. In my application, this mode could even process more than 1 billion messages within one hour. It’s really an impressive improvement, right?

Conclusion

- It’s not necessary to adopt the MultiBatch pattern at first, that is to say, avoid premature optimization. But if you are faced with the pressure of processing pretty massive data within a short time, have a try of these patterns

- Batch and Fan In / Fan out are excellent programing methods that are worthy of your attention, especially the latter. Whenever you are addressing problems with high concurrency, don’t forget to employ more workers to share the load

Source: https://github.com/sceneryback/kafka-best-practices

#golang #sarama #kafka