Alan is a complete Conversational Voice AI Platform that lets you Build, Debug, Integrate, and Iterate on a voice assistant for your application.

Previously, you would’ve had to work from the ground-up: learning Python, creating your machine learning model, hosting on the cloud, training Speech Recognition software, and tediously integrating it into your app.

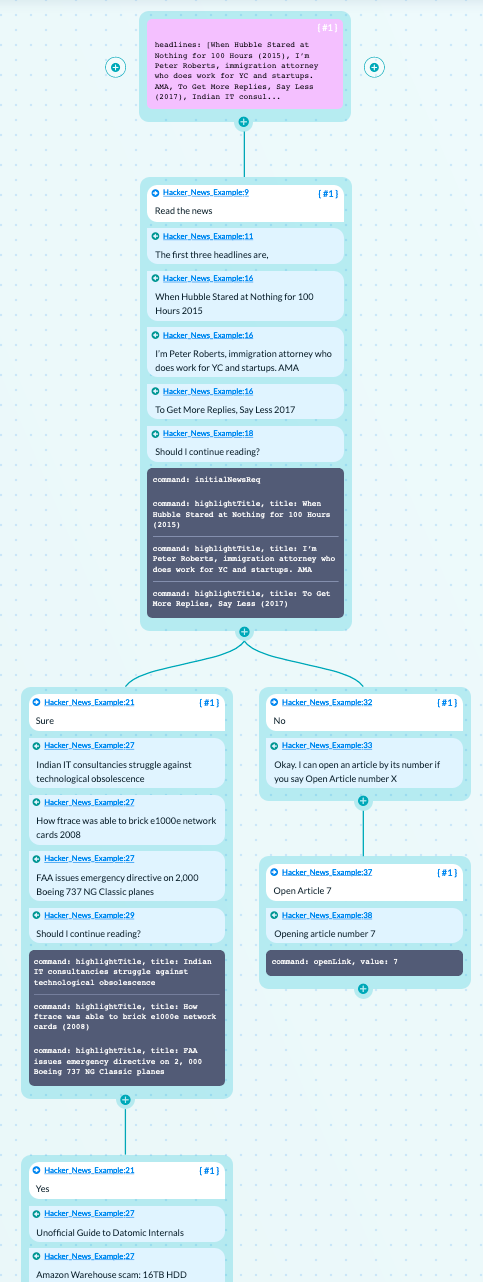

Alan Platform Diagram

The Alan Platform automates this with its cloud-based infrastructure — incorporating a large number of advanced voice recognition and Spoken Language Understanding technologies. This enables Alan to support complete conversational voice experiences — defined by developers using Alan Studio scripts, written in JavaScript. Alan integrates voice AI into any application with easy to use SDKs.

To show you the power of the Alan Platform, we’ll start with a React Example App and add a few simple features to create a multi-modal interface. The React application we’ll be working with is a Hacker News Clone, available on the React Example Projects page.

You can also refer to this repository for a completed result. If you are unfamiliar with JavaScript or React, you can clone this completed repository instead of the one below to follow along. The completed Alan Script is inside, called Hacker-News-Example-Alan-Script.zip.

To start, clone the example project and make sure it works correctly:

$ git clone https://github.com/clintonwoo/hackernews-react-graphql.git

$ cd hackernews-react-graphql

$ npm install

$ npm start

Now that we have our app saved on our computer, we can start with the voice script. Remember where this is saved — we’ll need to come back for it later!

#voice-technology #ai #web-development #conversational-ux #reactjs