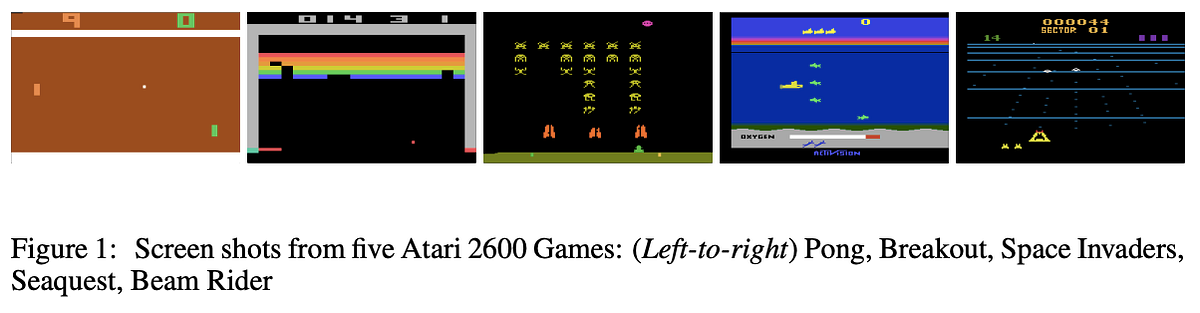

This paper presents a deep reinforcement learning model that learns control policies directly from high-dimensional sensory inputs (raw pixels /video data). The deep learning model, created by DeepMind, consisted of a CNN trained with a variant of Q-learning. The model learned to play seven Atari 2600 games and the results showed that the algorithm outperformed all the previous approaches.

Introduction

The paper lists some of the challenges faced by Reinforcement Learning algorithms in comparison to other Deep Learning techniques. Unlike other DL algorithms that have a large amount of labeled training data, RL relies on scalar reward functions that are sparse and delayed. The reward for a particular action could be received after several thousand time-steps. This delay between input and output reward is in stark contrast to the direct association between input and target in supervised learning. In other DL approaches, the inputs are independent, whereas, in RL, the input is a sequence of highly correlated states. Also, in RL, the data distribution changes as the algorithm learns new behaviors.

This paper trains a CNN with a variant of Q-learning algorithm to overcome the drawbacks mentioned above and uses stochastic gradient descent to update the weights. The problem of correlated data and non-stationary distribution is handled using an experience replay mechanism that randomly samples previous transitions. The approach is tested on the Atari game environment which provides a training ground with high dimensional visual input. The goal is to create a neural network learning agent whose only input is the raw video input, the reward and terminal signals and a set of valid actions. It is noteworthy that the same neural network architecture and hyperparameters were used for all the games.

1. Background

In the proposed task, an agent interacts with the environment Ɛ (the Atari emulator) by performing an action at from a set of valid actions A — {1,…K}. Ɛ may be stochastic as the game environment is unpredictable. The agent can observe an image xtϵ R^d that represents a vector of raw pixels in the emulator and receives a reward rt depending on the action taken. The agent only sees the current screen xt which does not give a complete idea about the current state. Hence, the paper refers to a sequence of actions and observations that depict the state st of the system at time t as st= x1 + a1 + x2 + a2 …xt + at_._ This makes the machine learn in a more human-like manner. The goal is to maximize the future rewards that are discounted by a factor η.

The optimal value function is defined as Q*(s,a) = maxπ E [Rt| st = s; at = a; π ] that obeys the Bellman Equation. The optimal value function can be found through a value iteration approach, but since it is impractical, the authors propose a non-linear function approximator such as a neural network to estimate the optimal Q value. The Q network can be trained by minimizing the loss functions Li(Θi) that changes at every iteration i.

The Q-learning algorithm is model-free, meaning, it doesn’t need to learn the rules of the game, as opposed to the model-based approaches, where the rules of the game are embedded in a transition matrix. The Q-learning algorithm is also off-policy and not on-policy. In fact, the paper follows a more hybrid approach. In the game environment, the agent can take one of several possible actions. Each action is associated with a Q value. Instead of always selecting the action with the highest Q value (greedy approach /on-policy), the agent can choose other actions to see where they lead(off-policy) and ‘build an experience’. In this paper, the agent picks the greedy action with a probability of (1-e), where e represents the randomness of the choice. At first, the network begins ‘exploring’ the environment by choosing e very close to 1 and gradually reduces e to 0 when it starts ‘exploiting’ the environment.

The Atari game environment faces a ‘perceptual aliasing’ problem where a single frame at any given instant does not reveal any useful information about the state of the environment. The paper solves this problem by using the last 4 frames of history and stacks them to produce the input to the Q-function.

2. Related Work

The most closely related work to this paper is neural fitted Q- learning (NFQ) that optimizes a sequence of loss functions using the RPOP algorithm. But they used batch update which is computationally expensive. This paper uses stochastic gradient descent that has low constant cost per iteration and can scale to large datasets. NFQ used deep autoencoders to learn a low dimensional representation of the task. This paper’s novelty lies in using reinforcement learning end-to-end directly from raw visual inputs thus learning features that are directly relevant to discriminating actionA values.

A

3. Deep Reinforcement Learning architecture

The paper aims to connect a reinforcement learning algorithm to a deep neural network that directly takes in RGB images as input and processes it using SGD. This paper utilizes a technique called Experience Replay. The agent’s experience at each step is stored as et = (st, at, rt, st+1) in a dataset pooled over multiple episodes and is called replay memory. Q-learning updates are applied to a random batch of samples from the pool. This makes the training data samples more random and uncorrelated, thus making it more ‘stationary’ to the neural network as each new batch is filled with random strategy experiences.

Deep Q learning algorithm with Experience Replay has the following advantages –

· Each step of the experience is potentially used in many weight updates, which allows for greater data efficiency.

· By using experience replay the behavior distribution is averaged over many of its previous states, smoothing out learning and avoiding oscillations or divergence in the parameters.

The proposed algorithm has the limitation that it only stores the last n experience tuples in memory. A better approach would be to emphasize more on the transitions from which the agent can learn the most.

The input image is 210x160, which is large and computationally expensive. To simplify, the raw image is pre-processed by reducing it to greyscale with dimension 110x84. The image is cropped to 84x84 as they use GPU implementations of 2D convolution which accepts only square inputs. The last four frames in history are pre-processed and stacked to produce the input to the Q-function.

Instead of giving a (state, action) pair as the input to the neural network, in this architecture, only the state representation is given as input and separate output units are present for each possible action for the given state. The main advantage of this type of architecture is the ability to compute Q-values for all possible actions in a given state with only a single forward pass through the network.

The input to the neural network consists of an 84x 84x 4 image subjected to the following DQN structure -

· 16 8x8 filters with stride 4, ReLu activation

· 32 4x4 filters with stride 2, ReLu activation

· a fully connected layer, 256 rectified units

#q-learning #deep-reinforcement #deep-learning #atari #dqn #deep learning