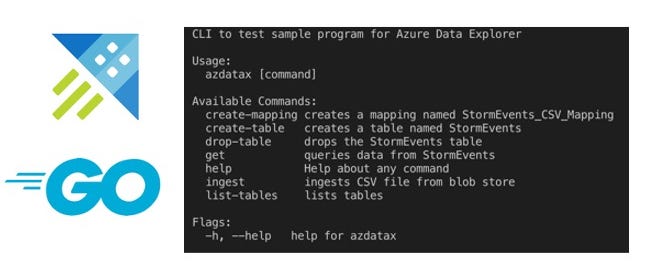

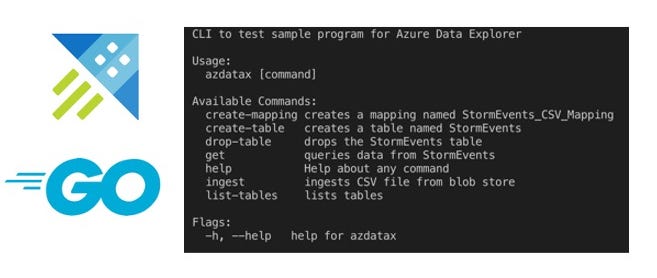

With the help of an example, this blog post will walk you through how to use the Azure Data explorer Go SDK to ingest data from a Azure Blob storage container and query it programmatically using the SDK. After a quick overview of how to setup Azure Data Explorer cluster (and a database), we will explore the code to understand what’s going on (and how) and finally test the application using a simple CLI interface

Azure Data Explorer using the Go SDK

The sample data is a CSV file that can be downloaded from here

_The code is available on GitHub _https://github.com/abhirockzz/azure-dataexplorer-go

What is Azure Data Explorer ?

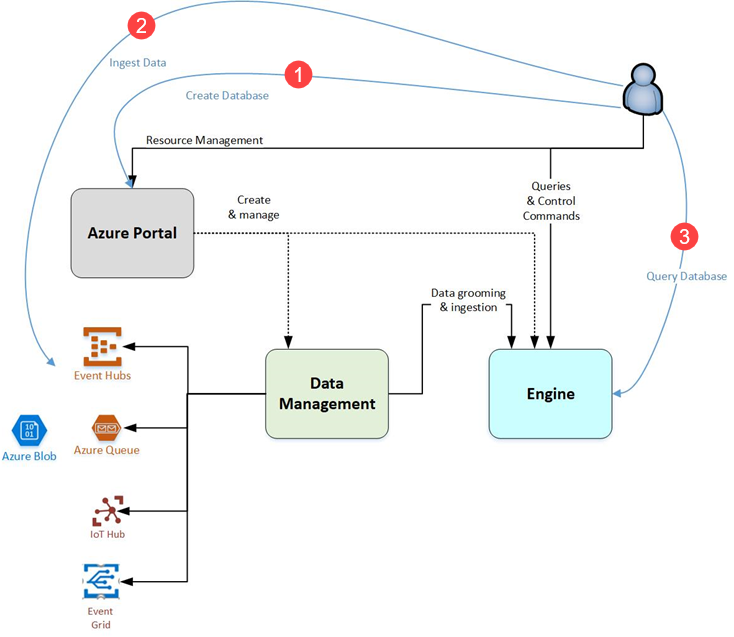

Azure Data Explorer (also known as Kusto) is a fast and scalable data exploration service for analyzing large volumes of diverse data from any data source, such as websites, applications, IoT devices, and more. This data can then be used for diagnostics, monitoring, reporting, machine learning, and additional analytics capabilities.

It supports several ingestion methods, including connectors to common services like Event Hub, programmatic ingestion using SDKs, such as .NET and Python, and direct access to the engine for exploration purposes. It also integrates with analytics and modeling services for additional analysis and visualization of data using tools such as Power BI

Go SDK for Azure Data Explorer

The Go client SDK allows you to query, control and ingest into Azure Data Explorer clusters using Go. Please note that this is for interacting with the Azure Data Explorer cluster (and related components such as tables etc.). To create Azure Data Explorer clusters, databases etc. you should the use the admin component (control plane) SDK which is a part of the larger Azure SDK for Go

_API docs — _https://godoc.org/github.com/Azure/azure-kusto-go

Before getting started, here is what you would need to try out the sample application

Pre-requisites

You will need a Microsoft Azure account. Go ahead and sign up for a free one!

Install the Azure CLI if you don’t have it already (should be quick!)

Setup Azure Data Explorer cluster, create a Database and configure security

Start by creating a cluster using az kusto cluster create. Once that’s done, create a database with az kusto database create, e.g.

az kusto cluster create -l "Central US" -n MyADXCluster -g MyADXResGrp --sku Standard_D11_v2 --capacity 2

az kusto database create --cluster-name MyADXCluster -g MyADXResGrp -n MyADXdb

az kusto database show --cluster-name MyADXCluster --name MyADXdb --resource-group MyADXResGrp

Create a Service Principal using az ad sp create-for-rbac

az ad sp create-for-rbac -n "test-datax-sp"

You will get a JSON response as such — please note down the appId, password and tenant as you will be using them in subsequent steps

{

"appId": "fe7280c7-5705-4789-b17f-71a472340429",

"displayName": "test-datax-sp",

"name": "http://test-datax-sp",

"password": "29c719dd-f2b3-46de-b71c-4004fb6116ee",

"tenant": "42f988bf-86f1-42af-91ab-2d7cd011db42"

}

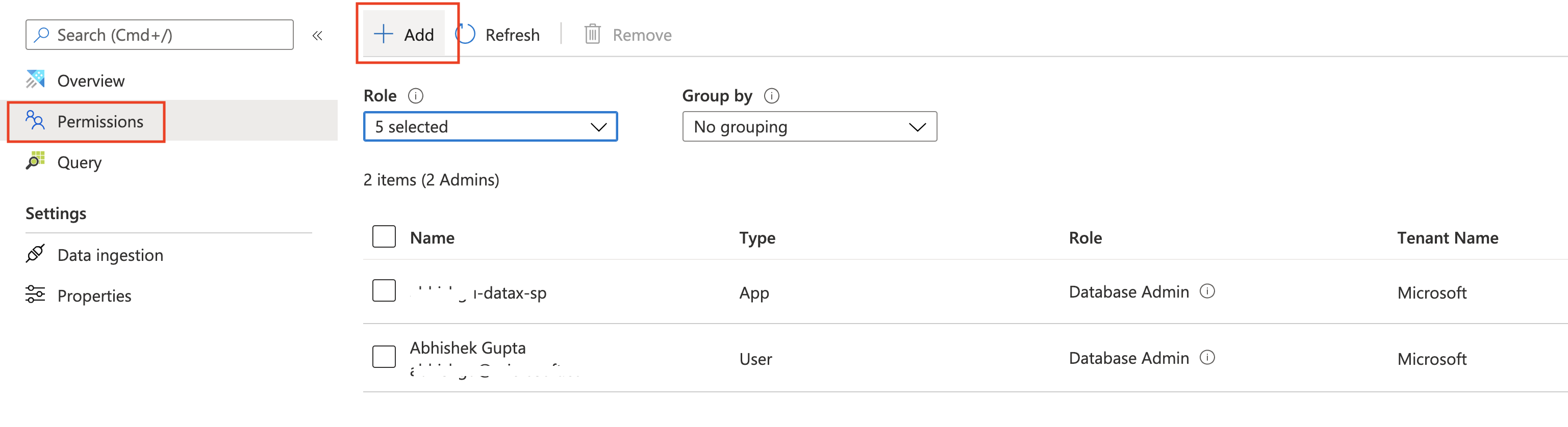

You will need to assign roles to the Service Principal so that it can access the database you just created. To do so using the Azure Portal, open the Azure Data Explorer cluster, navigate to Data > Databases and select the database. Choose Permissions form the left menu and and click Add to proceed.

_For more information, please refer to _Secure Azure Data Explorer clusters in Azure

Code walk through

At a high level, this is what the sample code does:

- Connect to an Azure Data Explorer cluster (of course!)

- Create a table (and list them just to be sure)

- Create data mapping

- Ingest/load existing data from a CSV file in Azure Blob storage

- Run a query on the data you just ingested

Let’s look at each of these steps

Connect to an Azure Data Explorer cluster

We use Service Principal to authenticate to Azure Data Explorer and provide the Azure tenant ID, client ID and client secret (which were obtained after creating the principal using az ad sp create-for-rbac)

auth := kusto.Authorization{Config: auth.NewClientCredentialsConfig(clientID, clientSecret, tenantID)}

kc, err := kusto.New(kustoEndpoint, auth)

if err != nil {

log.Fatal("failed to create kusto client", err)

}

_You can _check out the code here

Create table and data mappings

To create a table, we simply execute create table

func CreateTable(kc *kusto.Client, kustoDB string) {

_, err := kc.Mgmt(context.Background(), kustoDB, kusto.NewStmt(createTableCommand))

if err != nil {

log.Fatal("failed to create table", err)

}

log.Printf("table %s created\n", kustoTable)

}

_You can _check out the code here

Notice how we use client.Mgmt to execute this operation since this is a management query. Later, you will see how to execute query to read data from Azure Data Explorer.

To confirm, we run a query to check the tables in database i.e. show tables

func FindTable(kc *kusto.Client, kustoDB string) []TableInfo {

var tables []TableInfo

ri, err := kc.Mgmt(context.Background(), kustoDB, kusto.NewStmt(testQuery))

if err != nil {

log.Fatalf("failed to execute query %s - %s", testQuery, err)

}

var t TableInfo

for {

row, err := ri.Next()

if err != nil {

if err == io.EOF {

break

} else {

log.Println("error", err)

}

}

row.ToStruct(&t)

tables = append(tables, t)

}

return tables

}

...

type TableInfo struct {

Name string `kusto:"TableName"`

DB string `kusto:"DatabaseName"`

}

_You can _check out the code here

After executing the query, [ToStruct](https://github.com/Azure/azure-kusto-go/blob/master/kusto/data/table/table.go#L120) is used to save the result to an instance of a user-defined TableInfo struct

Once the table is created, we can configure data mappings that are used during ingestion to map incoming data to columns inside Kusto tables

func CreateMapping(kc *kusto.Client, kustoDB string) {

_, err := kc.Mgmt(context.Background(), kustoDB, kusto.NewStmt(createMappingCommand))

if err != nil {

log.Fatal("failed to create mapping", err)

}

log.Printf("mapping %s created\n", kustoMappingRefName)

}

_You can _check out the code here

Ingest data from Azure Blob storage

To ingest data we use the [Ingestion](https://godoc.org/github.com/Azure/azure-kusto-go/kusto/ingest#Ingestion) client

const blobStorePathFormat = "https://%s.blob.core.windows.net/%s/%s%s"

func CSVFromBlob(kc *kusto.Client, blobStoreAccountName, blobStoreContainer, blobStoreToken, blobStoreFileName, kustoMappingRefName, kustoDB, kustoTable string) {

kIngest, err := ingest.New(kc, kustoDB, kustoTable)

if err != nil {

log.Fatal("failed to create ingestion client", err)

}

blobStorePath := fmt.Sprintf(blobStorePathFormat, blobStoreAccountName, blobStoreContainer, blobStoreFileName, blobStoreToken)

err = kIngest.FromFile(context.Background(), blobStorePath, ingest.FileFormat(ingest.CSV), ingest.IngestionMappingRef(kustoMappingRefName, ingest.CSV))

if err != nil {

log.Fatal("failed to ingest file", err)

}

log.Println("Ingested file from -", blobStorePath)

}

_You can _check out the code here

We have the path to the file in Azure Blob storage and we refer to it in [FromFile](https://godoc.org/github.com/Azure/azure-kusto-go/kusto/ingest#Ingestion.FromFile) function along with file type (CSV in this case) as well as data mapping we just created (StormEvents_CSV_Mapping)

#analytics #big-data #go #azure #serverless