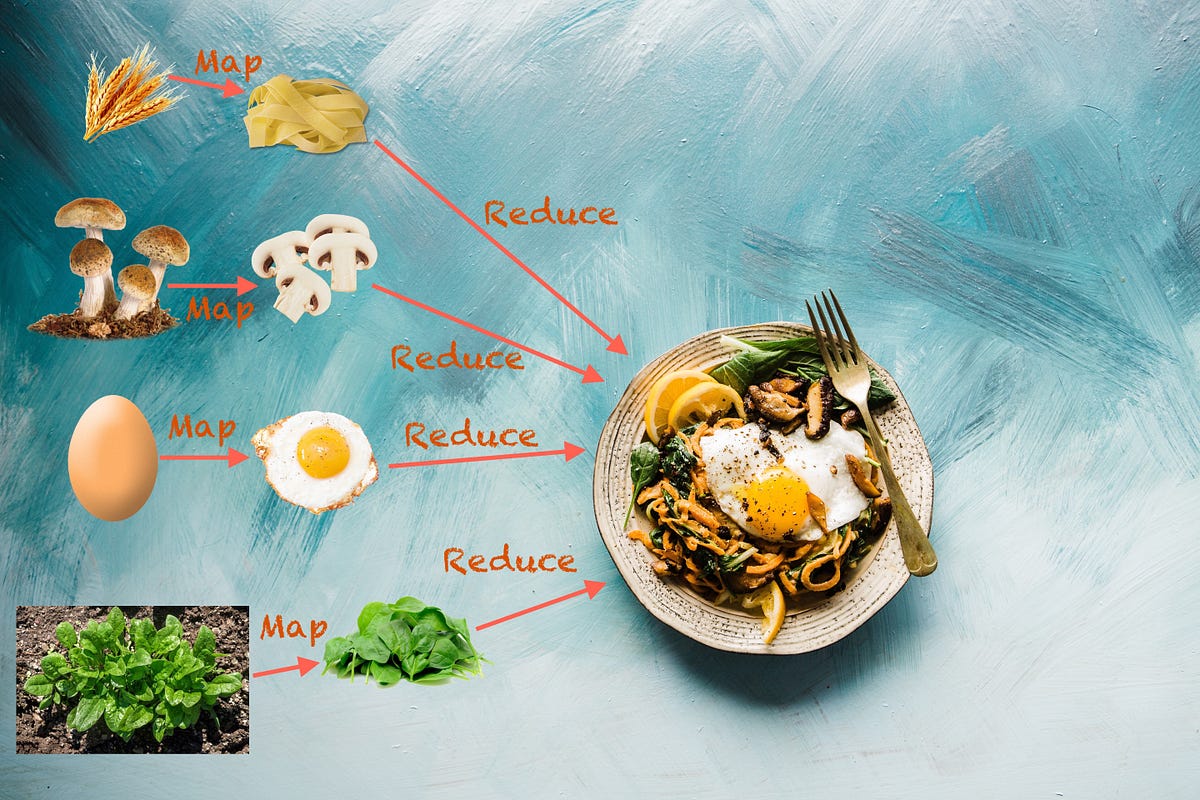

MapReduce is a programming technique for manipulating large data sets, whereas Hadoop MapReduce is a specific implementation of this programming technique.

Following is how the process looks in general:

Map(s) (for individual chunk of input) ->

- sorting individual map outputs ->

Combiner(s) (for each individual map output) ->

- shuffle and partition for distribution to reducers ->

- sorting individual reducer input ->

Reducer(s) (for sorted data of group of partitions)

Hadoop’s MapReduce In General

Hadoop MapReduce is a framework to write applications which process enormous amounts of data (multi-terabyte) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

A typical MapReduce job:

- splits the input data-set into independent data sets

- each individual dataset is processed in parallel by the map tasks

- then the framework sorts the outputs of maps,

- this output is then used as input to the reduce tasks

In general, both the input and the output of the job are stored in a file-system.

The Hadoop MapReduce framework takes care of scheduling tasks, monitoring them and re-execution of the failed tasks.

Generally, the Hadoop’s MapReduce framework and Hadoop Distribution File System (HDFS) run on the same nodes, which means that each node is used for compute and storage both. The benefit of such a configuration is that tasks can be scheduled on the nodes where data resides, and thus results in high aggregated bandwidth across the cluster.

The MapReduce framework consists of:

- a single master

ResourceManager(Hadoop YARN), - one worker

NodeManagerper cluster-node, and MRAppMasterper application

The resource manager keeps track of compute resources, assigns them to specific tasks, and schedules jobs across the cluster.

In order to configure a MapReduce job, at minimum, an application specifies:

- input source and output destination

- map and reduce function

A job along with its configuration is then submitted by the Hadoop’s job client to YARN, which is then responsible for distributing it across the cluster, schedules tasks, monitors them, and provide their status back to the job client.

Although the Hadoop framework is implemented in Java, MapReduce applications need not be written in Java. We can use [**mrjobs**](https://github.com/Yelp/mrjob) Python package to write MapReduce jobs that can be run on Hadoop or AWS.

Inputs And Outputs Of MapReduce Jobs

For both input and output, the data is stored in key-value pairs. Each key and value class has to be serialisable by MapReduce framework, and thus should implement the [**Writable**](https://hadoop.apache.org/docs/stable/api/org/apache/hadoop/io/Writable.html) interface. Apart from this, the key class need to implement [**WritableComparable**](https://hadoop.apache.org/docs/stable/api/org/apache/hadoop/io/WritableComparable.html) interface as well which is required for sort mechanism.

The Mapper

A [**Mapper**](https://hadoop.apache.org/docs/stable/api/org/apache/hadoop/mapreduce/Mapper.html) map is a task which input key/value pairs to a set of output key/value pairs (which are then used by further steps). The output records do not need to be of the same type as that of input records, also an input pair may be mapped to zero or more output pairs.

All values associated with a given output key are subsequently grouped by the framework, and passed to the Reducer(s) to determine the final output. The Mapper outputs are sorted and then partitioned per Reducer. The total number of partitions is the same as the same as the number of reduce tasks for the job.

Shuffle & Sort Phases

The output of individual mapper output is sorted by the framework.

Before feeding data to reducers, the data from all mappers is partitioned by some grouping of keys. Each partition contains data from one or more keys. The data for each partition is sorted by keys. The partitions are then distributed to reducers. Each reducer input data is data from one or more partitions (generally 1:1 ratio).

The Reducer

A [**Reducer**](https://hadoop.apache.org/docs/stable/api/org/apache/hadoop/mapreduce/Reducer.html) reduces a set of intermediate values (output of shuffle and sort phase) which share a key to a smaller set of values.

In the reducer phase, the reduce method is called for each <key, (list of values)> pair in the grouped inputs. Note that the output of Reducer is not sorted.

The right number of reducers are generally between 0.95 and 1.75 multiplied by <no. of nodes> * <no. of maximum containers per node>.

The Combiner

We can optionally specify a Combiner (know as local-reducer) to perform local aggregation of the intermediate outputs, which helps to cut down the amount of data transferred from the the Mapper to Reducer. In many case, same reducer code can be used as combiner as well.

Let’s look at an simple example

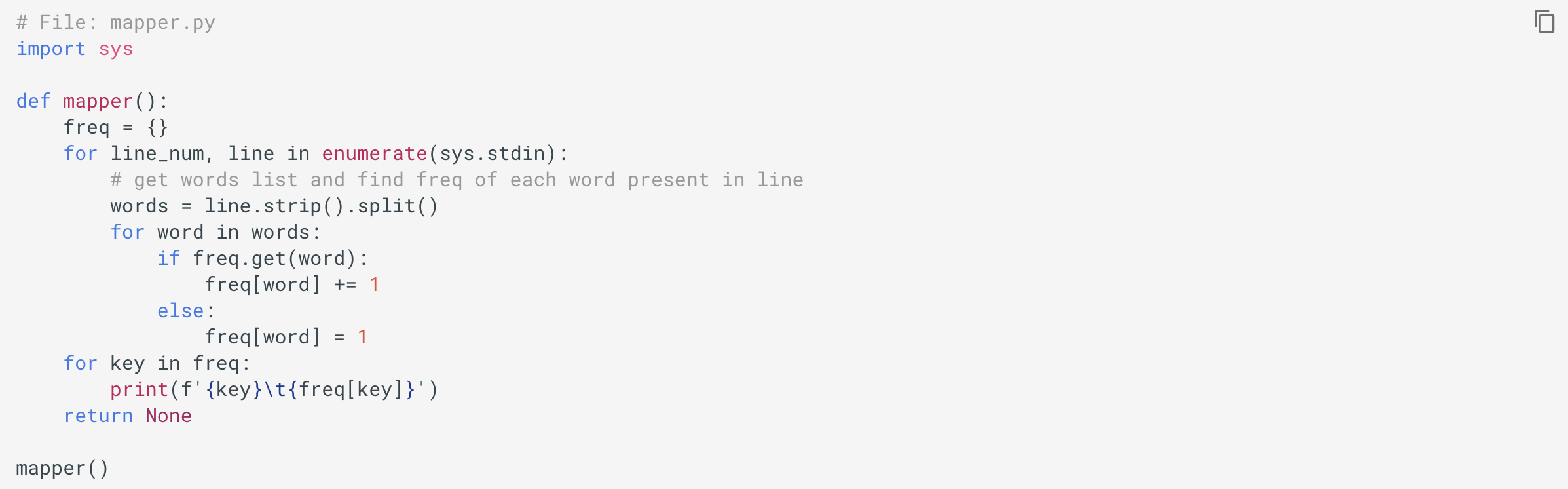

Let’s look at a simple example on counting word frequencies. Consider following mapper.py file:

#big-data #data-science #mapreduce #data-engineering #hadoop #data analysisa