In the previous blog, we started with the introduction to Apache Spark, why it is preferred, its features and advantages, architecture and working along with its industrial use cases. In this article we’ll get started with PySpark — Apache Spark using Python! By the end of this article, you’ll have a better understanding of what PySpark is, why we choose python for Spark, its features and advantages followed by a quick installation guide to set up PySpark in your own computer. Finally, this article will throw some light on some of the important concepts in Spark in order to proceed further.

What is PySpark?

Source: Databricks

As we have already discussed, Apache Spark also supports Python along with other languages, to make it easier for developers who are more comfortable working with Python for Apache Spark. Python being a relatively easier programming language to learn and use as compared to Spark’s native language Scala, it is preferred by many to develop Spark applications. As we all know, Python is the de facto language for many data analytics workload. While Apache Spark is the most extensively used big data framework today, Python is one of the most widely used programming languages especially for data science. So why not integrate them? This is where PySpark — python for Spark comes in. In order to support Python with Apache Spark, PySpark was released. As many data scientists and analysts use python for its rich libraries, integrating it with Spark is having the best of both worlds. With a strong support by the open source community, PySpark was developed using the Py4j library, to interface with the RDDs in Apache Spark using Python. High speed data processing, powerful caching, real-time and in-memory computation and low latency are some of the features of PySpark that makes it better than other data processing frameworks.

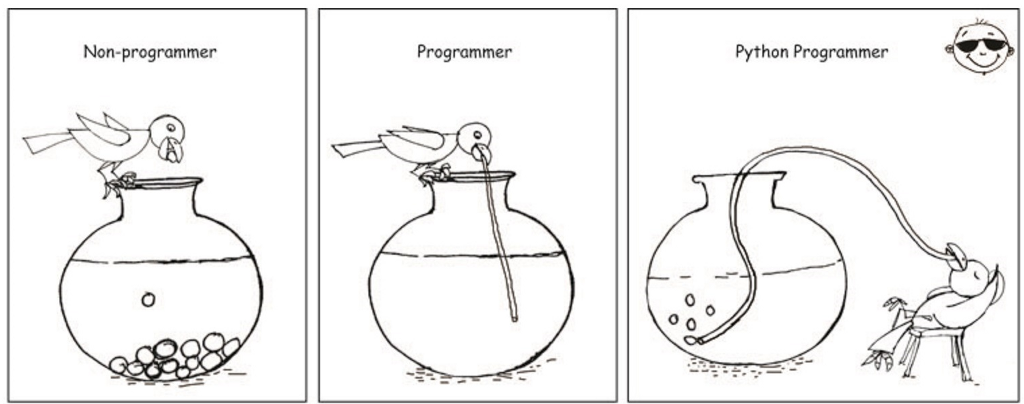

Why choose Python for Spark?

Source: becominghuman.ai

Python is easier to learn and use compared to other programming languages, thanks to its syntax and standard libraries. Python being a dynamically typed language, facilitates Spark’s RDDs to hold objects of multiple types. Moreover, Python has an extensive and rich set of libraries for a wide range of utilities like machine learning, natural language processing, visualization, local data transformations and many more.

While python has many libraries like Pandas, NumPy, SciPy for data analysis and manipulation, these libraries are memory dependent and depend on a single node system. Hence, these are not ideal for working with very large datasets in the order of terabytes and petabytes. With Pandas, scalability is an issue. In cases of real-time or near real-time flow of data, where large amount of data needs to be brought into an integrated space for transformation, processing and analysis, Pandas wouldn’t be an optimal choice. Instead, we need a framework to do the work faster and more efficiently by means of distributed and pipelined processing. This is where PySpark would come into action.

#pyspark #introduction-to-pyspark #data-science #python #apache-spark #apache