In the first part of this series, we’ve learned about some important terms and concepts in Reinforcement Learning (RL). We’ve also learned how RL is applied in an autonomous race car in the second part.

In this article, we will learn about the taxonomy of Reinforcement Learning algorithms. We will not only learn about one taxonomy but several taxonomies from many different points of view.

After we have familiar with the taxonomy, we will learn more about each of the branches in future episodes. Without wasting any more time, let’s take a deep breath, make a cup of chocolate, and I invite you to learn with me about the bird’s-eye view of the RL algorithms taxonomy!

Photo by American Heritage Chocolate on Unsplash

Model-Free vs Model-Based

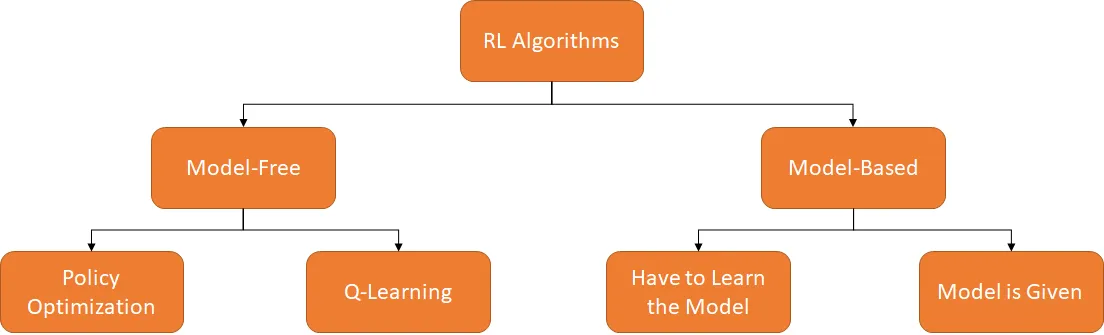

One way to classify RL algorithms is by asking whether the agent has access to a model of the environment or not. In other words, by asking whether we can know exactly how the environment will respond to our agent’s action or not.

Based on this point-of-view, we have 2 branches of RL algorithms: model-free and model-based:

- Model-based is the branch of RL algorithms that try to choose the optimal policy based on the learned model of the environment.

- In model-free algorithms, the optimal policy is chosen based on the trial-and-error experienced by the agent.

Both model-free and model-based algorithms have their own upsides and downsides as listed in the table below.

#data-science #artificial-intelligence #machine-learning #reinforcement-learning