Some background

My life in Site Reliability can be described as a perfect cocktail of building automation, reactive problem solving, and…posting “let me google that for you” links on slack channels. One of the major themes is figuring out how we scale based on customer demands. Currently, at LogicMonitor, we are running a hybrid environment where our core application as well as time series databases are running on servers in our physical data centers. These environments interact with microservices running in AWS. We called one of these hybrid production environments a “pod”(which in hindsight, is a poor choice of name once we introduced Kubernetes). We use the Atlassian product Bamboo for CI/CD.

In the last year and a half, the team went through the grueling but satisfying task of converting all our applications that were housed on ECS and EC2 instances into pods in Kubernetes, with each production “pod” being considered a namespace.

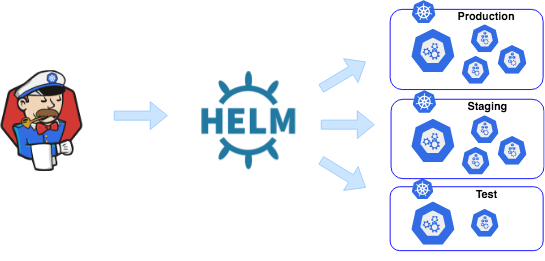

Deploying to k8s

Our deployment process was to use helm from within Bamboo. Each application built would produce a helm chart artifact and a docker image. Upon deployment, the image is pushed to a private docker repository and a helm install command is run with corresponding charts. However, with each production environment being considered a Kubernetes namespace, we needed to deploy to multiple namespaces per cluster, which was set by having an individual Bamboo deploy plan** per namespace, per application. **As of today we have 50 different prod environments and 8 microservices(for you math whizzes out there, that is 400 individual deploy plans). Sometimes, just for one application point release, it could take a developer well over an hour or two to deploy and verify all of production.

Building a new tool

So theres no way around this…if we want to effectively scale in infrastructure, we need to find a smarter way to deploy. Currently we use a variety of shell scripts that initiate the deployment process. In order to build a new tool, it needs to:

- Be able to query and list all the production namespaces

- Integrate helm/kubernetes libraries

- Deploy to multiple namespaces at once.

- Centralized logs for deployment progress

Introducing k8sdeploy

k8sdeploy is a go based tool, written with the goal of creating a cli that utilizes helm and kubernetes client libraries to deploy to multiple namespaces at once.

Initialization:

This creates the Helm Client and Client to Kubernetes**. **The current example below is for helmv2. Drastic changes with helm3 allow helm to directly communicate with k8s api server directly via kubeconfig.

// GetKubeClient generates a k8s client based on kubeconfig

func GetKubeClient(kubeconfig string) (*kubernetes.Clientset, error) {

config, err := clientcmd.BuildConfigFromFlags("", kubeconfig)

if err != nil {

panic(err.Error())

}

return kubernetes.NewForConfig(config)

}

//GetHelmClientv2 creates helm2 client based on kubeconfig

func GetHelmClientv2(kubeconfig string) *helm.Client {

config, _ := clientcmd.BuildConfigFromFlags(“”, kubeconfig)

client, _ := kubernetes.NewForConfig(config)

// port forward tiller (specific to helm2)

tillerTunnel, _ := portforwarder.New(“kube-system”, client, config)

// new helm client

host := fmt.Sprintf(“127.0.0.1:%d”, tillerTunnel.Local)

helmClient := helm.NewClient(helm.Host(host))

return helmClient

}

Shared Informer:

After the tool creates the client, it initializes a deployment watcher. This is a shared informer, which watches for changes in the current state of Kubernetes objects. In our case, upon deployment, we would create a channel to start and stop a shared informer for the ReplicaSet resource. The goal here is to not only log the deployment status (“1 of 2 updated replicas are available”), but also collate all the information in one stream which is crucial when deploying to multiple namespaces at once.

#kubernetes #programming #golang #automation #devops