Introduction

This article is based on my previous article “Big Data Pipeline Recipe” where I tried to give a quick overview of all aspects of the Big Data world.

The goal of this article is to focus on the “T” of the a Big Data ETL pipeline reviewing the main frameworks to process large amount of data. The main focus will be the Hadoop ecosystem.

But first, let’s review what this phase is all about. Remember, at this point you have ingested raw data that is ready to be processed.

Big Data Processing Phase

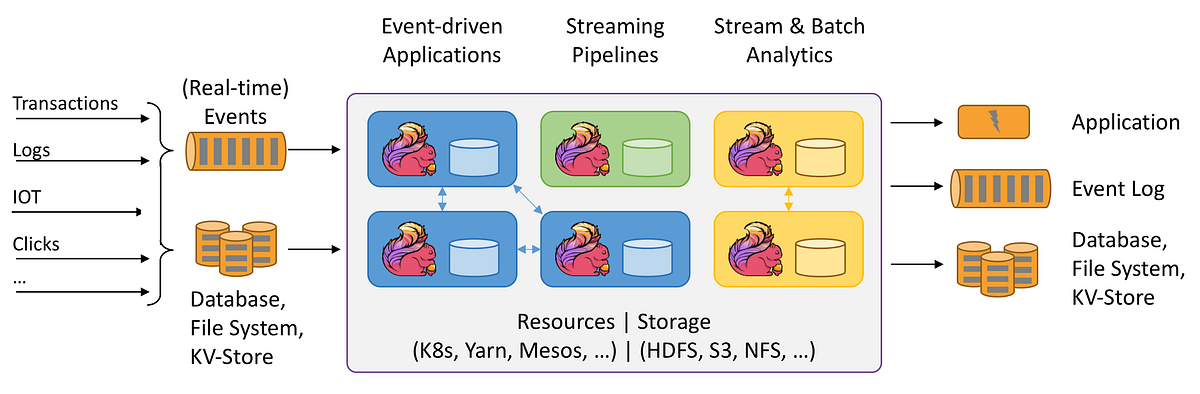

The goal of this phase is to** clean, normalize, process and save the data using a single schema. The end result is a trusted data set with a well defined schema. **Processing frameworks such Spark are used to process the data in parallel in a cluster of machines.

Generally, you would need to do some kind of processing such as:

- Validation: Validate data and quarantine bad data by storing it in a separate storage. Send alerts when a certain threshold is reached based on your data quality requirements.

- Wrangling and Cleansing: Clean your data and store it in another format to be further processed, for example replace inefficient JSON with Avro.

- Normalization and Standardization of values

- Rename fields

- …

#apache #scala #big-data #spark #etl