Learn how we use gRPC in combination with Kubernetes to improve the performance and usability of internal APIs.

Over the past year and a half, Cloudflare has been hard at work moving our back-end services running in our non-edge locations from bare metal solutions and Mesos Marathon to a more unified approach using Kubernetes(K8s). We chose Kubernetes because it allowed us to split up our monolithic application into many different microservices with granular control of communication.

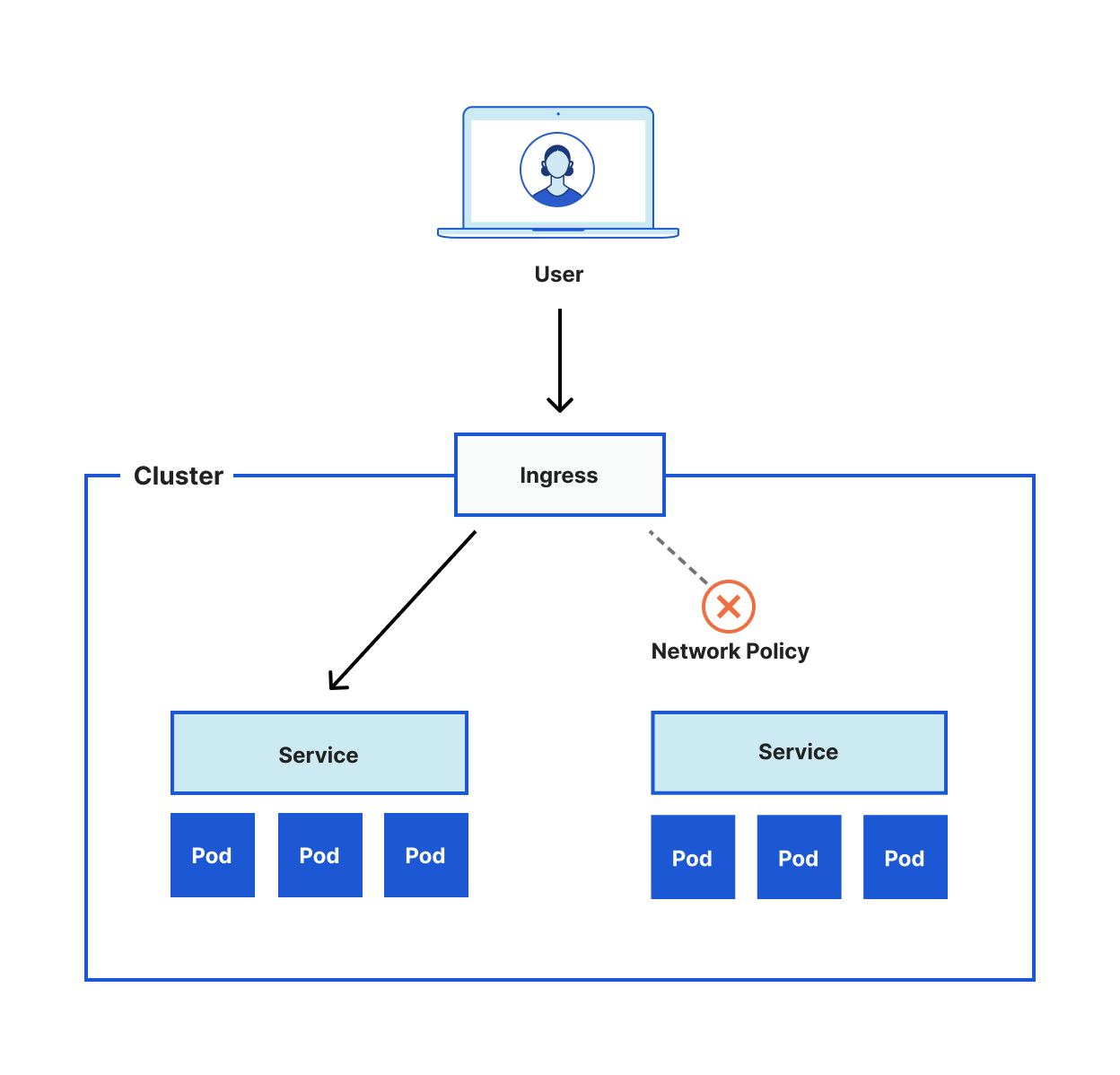

For example, a ReplicaSet in Kubernetes can provide high availability by ensuring that the correct number of pods are always available. A Pod in Kubernetes is similar to a container in Docker. Both are responsible for running the actual application. These pods can then be exposed through a Kubernetes Service to abstract away the number of replicas by providing a single endpoint that load balances to the pods behind it. The services can then be exposed to the Internet via an Ingress. Lastly, a network policy can protect against unwanted communication by ensuring the correct policies are applied to the application. These policies can include L3 or L4 rules.

The diagram below shows a simple example of this setup.

Though Kubernetes does an excellent job at providing the tools for communication and traffic management, it does not help the developer decide the best way to communicate between the applications running on the pods. Throughout this blog we will look at some of the decisions we made and why we made them to discuss the pros and cons of two commonly used API architectures, REST and gRPC.

#grpc #kubernetes