As a data scientist, I get extremely happy when I find labeled data that requires very little cleaning. Not so happy when I have a hard time finding data. Of course, finding labeled data that requires little cleaning is extremely rare, and we can’t change that. On the other hand, not finding data is extremely rare too. How is this possible? Well, we live in a world that the internet is expanding exponentially every day. The internet is basically a huge repository of data. Fortunately, we can use python, request, and Beautiful Soup to use this data.

BEFORE WE BEGIN

I did write this in capital letters because it is of extreme importance. It is not illegal to scrape data from websites, but it can be against the terms of use of the website. It cost money serving webpages to robots, so many websites try to avoid doing so. Please be respectful of the wishes of website owners.

Background

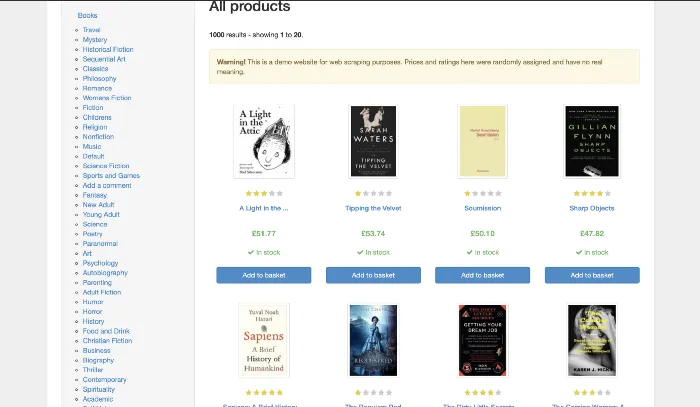

It’s a Saturday morning, and we finished reading all the books in our bookshelf. Boy are we excited, but we feel like we need more books. We hop online, and we find the following website: http://books.toscrape.com/index.html. Boy are we excited that we found it because we have not read any books from it. We start writing the name of the books by hand, but we think to ourselves “There has to be an easier way”. Well, there is! Let’s go over how to do it.

Gathering Info

We are going to be using Beautiful Soup to parse the HTML files, and we are going to be using requests to get the files. First, we are going to access the website to get familiar with the layout.

#data-science #ai #machine-learning #data-scraping #python