Welcome back guys:) Today, I would like to give a revision for deep image inpainting we have talked about so far. Also, I want to have another review of an image inpainting paper for the consolidation of knowledge of deep image inpainting. Let’s learn and enjoy!

Recall

Here, Let’s first briefly recall what we have learnt from previous posts.

**Context Encoder (CE) **[1] is the first GAN-based inpainting algorithm in the literature. It emphasizes the importance of understanding the context of the entire image for the task of inpainting and (channel-wise) fully-connected layer is used to achieve such a function. You can click here to link to the previous post for details.

Multi-scale Neural Patch Synthesis (MNPS) [2] can be regarded as an improved version of CE. It consists of two networks, namely content network and texture network. The content network is a CE and the texture network is a pre-trained VGG-19 for object classification task. The idea of employing a texture network comes from the recent success of neural style transfer. Simply speaking, the neural responses in a pre-trained network for high-level vision tasks (e.g. object classification) contain the information about the image styles. By encouraging similar neural responses inside and outside the missing regions, we can further enhance the texture details of the generated pixels and hence the completed images would be more realistic-looking. Interested readers are highly recommended to skim through the post here for details.

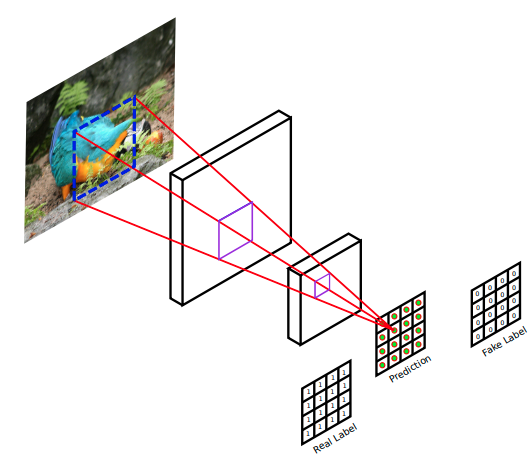

Globally and Locally Consistent Image Completion (GLCIC) [3] is a milestone in the task of deep image inpainting. The authors adopt Fully Convolutional Network (FCN) with Dilated Convolution (DilatedConv) as a framework of their proposed model. FCN allows various input sizes and DilatedConv replaces (channel-wise) fully-connected layer which is used to understand the context of the entire image. In addition, two discriminators are used for distinguishing completed images from real images at two scales. A global discriminator looks at the entire image while a local discriminator focuses on the local filled image patch. I highly recommend readers to take a look of the post here, especially for the dilated convolution in CNNs.

Today, we are going to review the paper, Patch-Based Image Inpainting with Generative Adversarial Networks [4]. This can be regarded as a variant of GLCIC, hence we can do some revision for this typical network structure.

#generative-adversarial #image-inpainting #deep-learning