Just a few months back, I never used to call containers as containers…I used to call them docker containers…when I heard that OpenShift is moving to CRI-O, I thought what’s the big deal…to understand the “big deal”…I had to understand the evolution of the k8s worker node

Evolution

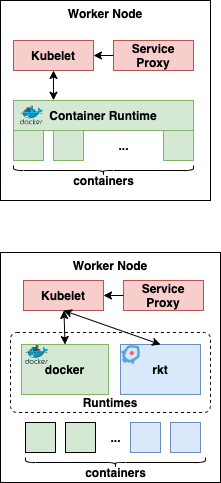

If you look at the evolution of the k8s architecture, there has been a significant change and optimization in the way the worker nodes have been running the containers…here are significant stages of the evolution, that I attempted to capture…

Stage 0 : docker is the captain

It started with a simple architecture of kubelets as the worker node agents that receive the command from admins, through api-server from the master node. The kubelets used docker runtime to launch the docker containers (pulling the images from the registry). This was all good…until the alternate container runtimes, with better performance & unique strengths, started appearing in the market, we realised that it would be good if we can plug and play these runtimes…the obvious design pattern we would use to fix this issue is ??? “adapter/proxy” pattern…right?? that led to the next stage.

Evolution is all about adapting to the changes in the ecosystem

Stage 1: CRI (Container Runtime Interface)

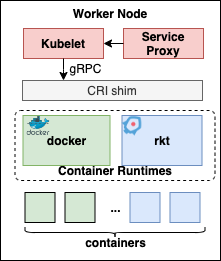

Container Runtime Interface (CRI) spec was introduced in K8s 1.5. CRI also consists of protocol buffers, gRPC API and libraries. This brought the abstraction layer, and acted as an adapter, with the help of gRPC client running in kubelet and gRPC server running in CRI Shim. This allowed a simpler way to run the various container runtimes.

Before we go any further…we need to understand what all functionality is expected from container runtimes. Container runtime used to manage. downloading the images, unpacking them, running them, and also handle the networking, storage. It was fine… until we starting realizing that this is like a monolith!!!

Let me layer these functionalities into 2 levels.

- High level — Image management, transport, unpacking the images & API to send commands to run the container, network, storage (eg: rkt, docker, LXC, etc).

- **Low Level **— run the containers.

It made more sense to split these functionalities into components that can be mixed and matched with various open-source options, that provide more optimizations and efficiencies…the obvious design/architecture pattern we would use to fix this issue is ??? “layering” pattern…right?? that led to the next stage.

#openshift #kubernetes #crios #containers #docker