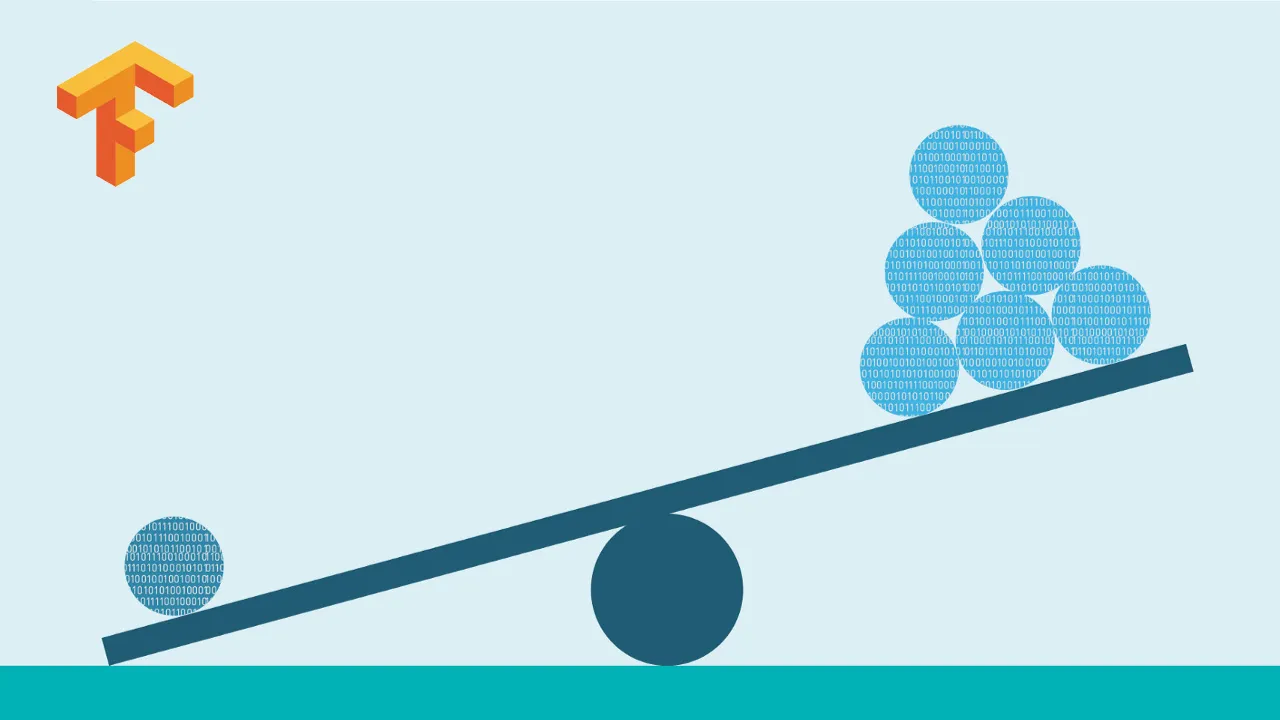

Class imbalance is a common challenge when training Machine Learning models. Here is a possible solution by generating class weights and how to use them in single and multi-output models.

It is frequent to encounter class imbalance when developing models for real-world applications. This occurs when there are substantially more instances associated with one class than with the other.

For example, in a Credit Risk Modeling project, when looking at the status of loans in historical data, most of the loans being granted have probably been paid in full. If models susceptible to class imbalance are used, defaulted loans would probably not have much relevance in the training process, as the overall loss continues to decrease when the model focuses on the majority class.

To make the model pay more attention to examples where the loan was defaulted, class weights can be used so that the prediction error is larger when an instance of the underrepresented class is incorrectly classified.

In addition to using class weights, there are other approaches to address class imbalance, such as oversampling and undersampling, two-stage training, or using more representative losses. However, this article will focus on how to calculate and use class weights when training your Machine Learning model, as it is a very simple and effective method to address imbalance.

First, I will present you with a way to generate class weights from your dataset and next how to use them in both a single and multiple output model.

#imbalanced-data #tensorflow #data-analysis #keras #machine-learning #dealing with imbalanced data in tensorflow